Eli Pariser argues that “filter bubbles” are bad for us and bad for democracy:

As web companies strive to tailor their services (including news and search results) to our personal tastes, there’s a dangerous unintended consequence: We get trapped in a “filter bubble” and don’t get exposed to information that could challenge or broaden our worldview.

Filter bubbles are close cousins of confirmation bias, groupthink, polarization and other cognitive and social pathologies.

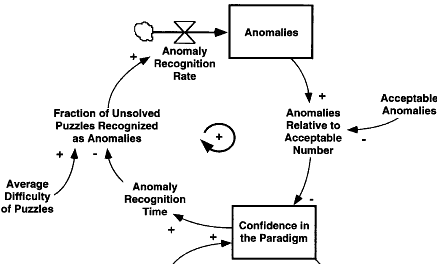

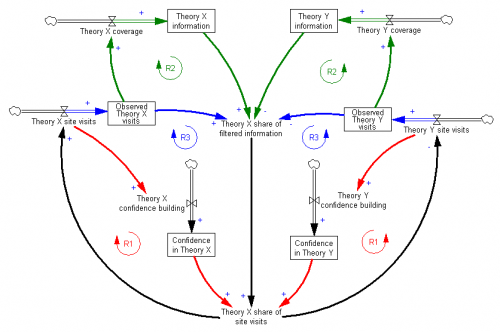

A key feedback is this reinforcing loop, from Sterman & Wittenberg’s model of path dependence in Kuhnian scientific revolutions:

As confidence in an idea grows, the delay in recognition (or frequency of outright rejection) of anomalous information grows larger. As a result, confidence in the idea – flat earth, 100mpg carburetor – can grow far beyond the level that would be considered reasonable, if contradictory information were recognized.

The dynamics resulting from this and other positive feedbacks play out in many spheres. Wittenberg & Sterman give an example:

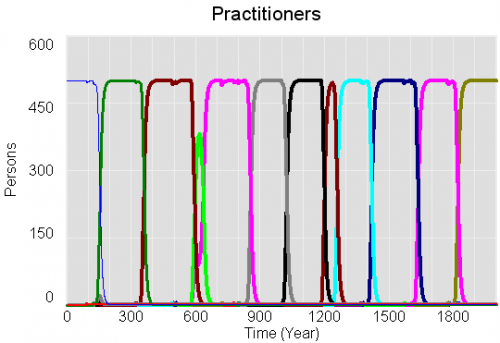

The dynamics generated by the model resemble the life cycle of intellectual fads. Often a promising new idea rapidly becomes fashionable through excessive optimism, aggressive marketing, media hype, and popularization by gurus. Many times the rapid influx of poorly trained practitioners, or the lack of established protocols and methods, causes expectations to outrun achievements, leading to a backlash and disaffection. Such fads are commonplace, especially in (quack) medicine and most particularly in the world of business, where “new paradigms” are routinely touted in the pages of popular journals of management, only to be displaced in the next issue by what many business people have cynically come to call the next “flavor of the month.”

Typically, a guru proposes a new theory, tool, or process promising to address persistent problems facing businesses (that is, a new paradigm claiming to solve the anomalies that have undermined the old paradigm.) The early adopters of the guru’s method spread the word and initiate some projects. Even in cases where the ideas of the guru have little merit, the energy and enthusiasm a team can bring to bear on a problem, coupled with Hawthorne and placebo effects and the existence of “low hanging fruit” will often lead to some successes, both real and apparent. Proponents rapidly attribute these successes to the use of the guru’s ideas. Positive word of mouth then leads to additional adoption of the guru’s ideas. (Of course, failures are covered up and explained away; as in science there is the occasional fraud as well.) Media attention further spreads the word about the apparent successes, further boosting the credibility and prestige of the guru and stimulating additional adoption.

As people become increasingly convinced that the guru’s ideas work, they are less and less likely to seek or attend to disconfirming evidence. Management gurus and their followers, like many scientists, develop strong personal, professional, and financial stakes in the success of their theories, and are tempted to selectively present favorable and suppress unfavorable data, just as scientists grow increasingly unable to recognize anomalies as their familiarity with and confidence in their paradigm grows. Positive feedback processes dominate the dynamics, leading to rapid adoption of those new ideas lucky enough to gain a sufficient initial following. …

The wide range of positive feedbacks identified above can lead to the swift and broad diffusion of an idea with little intrinsic merit because the negative feedbacks that might reveal that the tools don’t work operate with very long delays compared to the positive loops generating the growth. …

For filter bubbles, I think the key positive loops are as follows:

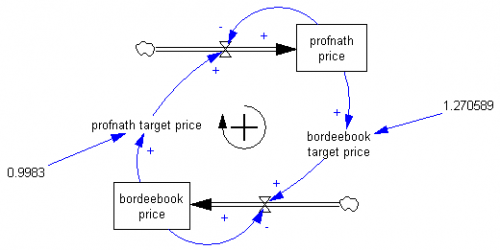

Loops R1 are the user’s well-worn path. We preferentially visit sites presenting information (theory x or y) in which we have confidence. In doing so, we consider only a subset of all information, building our confidence in the visited theory. This is a built-in part of our psychology, and to some extent a necessary part of the process of winnowing the world’s information fire hose down to a usable stream.

Loops R1 are the user’s well-worn path. We preferentially visit sites presenting information (theory x or y) in which we have confidence. In doing so, we consider only a subset of all information, building our confidence in the visited theory. This is a built-in part of our psychology, and to some extent a necessary part of the process of winnowing the world’s information fire hose down to a usable stream.

Loops R2 involve the information providers. When we visit a site, advertisers and other observers (Nielsen) notice, and this provides the resources (ad revenue) and motivation to create more content supporting theory x or y. This has also been a part of the information marketplace for a long time.

R1 and R2 are stabilized by some balancing loops (not shown). Users get bored with an all-theory-y diet, and seek variety. Providers seek out controversy (real or imagined) and sensationalize x-vs-y battles. As Pariser points out, there’s less scope for the positive loops to play out in an environment with a few broad media outlets, like city newspapers. The front page of the Bozeman Daily Chronicle has to work for a wide variety of readers. If the paper let the positive loops run rampant, it would quickly lose half its readership. In the online world, with information customized at the individual level, there’s no such constraint.

Individual filtering introduces R3. As the filter observes site visit patterns, and preferentially serves up information consistent with past preferences. This introduces a third set of reinforcing feedback processes, as users begin to see what they prefer, they also learn to prefer what they see. In addition, on Facebook and other social networking sites every person is essentially a site, and people include one another in networks preferentially. This is another mechanism implementing loop R1 – birds of a feather flock together and share information consistent with their mutual preferences, and potentially following one another down conceptual rabbit holes.

The result of the social web and algorithmic filtering is to upset the existing balance of positive and negative feedback. The question is, were things better before, or are they better now?

I’m not exactly sure how to tell. Presumably one could observe trends in political polarization and duration of fads for an indication of the direction of change, but that still leaves open the question of whether we have more or less than the “optimal” quantity of pet rocks, anti-vaccine campaigns and climate skepticism.

My suspicion is that we now have too much positive feedback. This is consistent with Wittenberg & Sterman’s insight from the modeling exercise, that the positive loops are fast, while the negative loops are weak or delayed. They offer a prescription for that,

The results of our model suggest that the long-term success of new theories can be enhanced by slowing the positive feedback processes, such as word of mouth, marketing, media hype, and extravagant claims of efficacy by which new theories can grow, and strengthening the processes of theory articulation and testing, which can enhance learning and puzzle-solving capability.

In the video, Pariser implores the content aggregators to carefully ponder the consequences of filtering. I think that also implies more negative feedback in algorithms. It’s not clear that providers have an incentive to do that though. The positive loops tend to reward individuals for successful filtering, while the risks (e.g., catastrophic groupthink) accrue partly to society. At the same time, it’s hard to imagine a regulatory that does not flirt with censorship.

Absent a global fix, I think it’s incumbent on individuals to practice good mental hygiene, by seeking diverse information that stands some chance of refuting their preconceptions once in a while. If enough individuals demand transparency in filtering, as Pariser suggests, it may even be possible to gain some local control over the positive loops we participate in.

I’m not sure that goes far enough though. We need tools that serve the social equivalent of “strengthening the processes of theory articulation and testing” to improve our ability to think and talk about complex systems. One such attempt is the “collective intelligence” behind Climate Colab. It’s not quite Facebook-scale yet, but it’s a start. Semantic web initiatives are starting to help by organizing detailed data, but we’re a long way from having a “behavioral dynamic web” that translates structure into predictions of behavior in a shareable way.

Update: From Tech Review, technology for breaking the bubble