I’m still attracted to the idea of objective measurements of teaching performance.* But I’m wary of what appear to be some pretty big limitations in current implementations.

It’s interesting reading the teacher comments on the LA Times’ teacher value added database, because many teachers appear to have a similar view – conceptually supportive, but wary of caveats and cognizant of many data problems. (Interestingly, the LAT ratings seem to have higher year-on-year and cross subject rating reliability, much more like I would expect a useful metric to behave. I can only browse incrementally though, so seeing the full dataset rather than individual samples might reveal otherwise.)

My takeaways on the value added measurements:

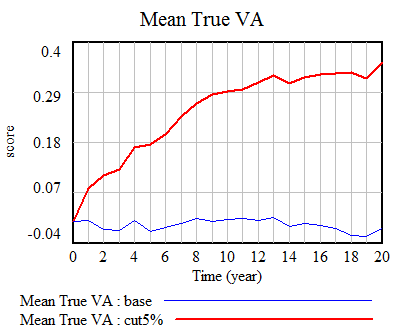

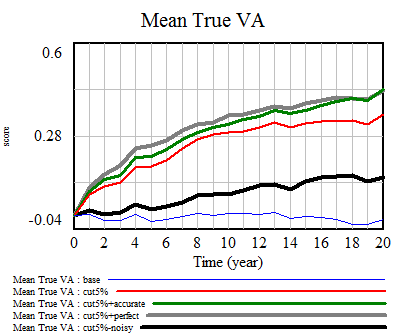

- Within reason, high measurement error by itself is not an obstacle to getting some performance benefit from value added rankings.

- From released data, I can’t satisfy myself that the NYC value added methods have measurement error “within reason.”

- High measurement error is rough on individual teachers.

- This makes current use of VAM for tenure decisions suspect.

- It makes it hard for teachers to learn from their better-performing peers, because identification of high performers becomes somewhat random.

- Feedback effects severely limit the potential for widespread use of VAM to improve education performance across the board.

I think the bigger issues have more to do with the content of the value added measurements rather than their precision. There’s nothing mysterious about what teacher value added measures. It’s very explicitly the teacher-level contribution to year-on-year improvement in student standardized test scores. Any particular measurement might contain noise and bias, but if you could get rid of those, there are still some drawbacks to the metric.

- Testing typically emphasizes only math and English, maybe science, and not art, music, and a long list of other things. This is broadly counterproductive for life, but also even narrowly for learning math and English, because you need some real-world subject matter in order to have interesting problems to solve and things to write about.

- Life is a team sport. Teaching is, or should be, too. (If you doubt this, watch a few episodes of a reality show like American Chopper and ponder whether performance would be more enhanced by better algebra skills, or better cooperation, communication and project management skills. Then ponder whether building choppers is much different from larger enterprises, like the Chunnel.) We should be thinking about performance accordingly.

- A focus at the student and teacher level ignores the fact that school system-level dynamics are most likely the biggest opportunity for improvement.

- A focus on single-subject year-on-year improvements means that teachers are driven to make decisions with a 100% time discount rate, and similar total disregard for the needs of other teachers’ classes.**

- Putting teachers in a measurement-obsessed command-and-control environment is surely not the best way to attract high-quality teachers.

- It’s hard to see how putting every student through the same material at the same pace can be optimal.

- It doesn’t make sense to put too much weight on standardized test scores, when the intersection between those and more general thinking/living skills is not well understood.

If no teachers are ever let go for poor performance, that probably signals a problem. In fact, it’s likely a bigger problem if teacher performance measurement (generally, not just VAM) is noisy, because bad teachers can get tenure by luck. If VAM helps with the winnowing process, that might be a useful function.

But it seems to me that the power of value added modeling is being wasted by this musical chairs*** mentality. The real challenge in teaching is not to decrease the stock of bad teachers. It’s to increase the stock of good ones, by attracting new ones, retaining the ones we have, and helping all of them learn to improve. Of course, that might require something more scarce than seats in musical chairs – money.

* A friend and school board member in semi-rural California was an unexpected fan of No Child Left Behind testing requirements, because objective measurements were the only thing that finally forced her district to admit that, well, they kind of sucked.

** A friend’s son, a math teacher, proposed to take a few days out of the normal curriculum to wrap up some loose ends from prior years. He thought this would help students to cement the understanding of foundational topics that they’d imperfectly mastered. Management answered categorically that there could be no departures from the current year material, needed to cover standardized test requirements. He defied them and did it, but only because he knew that it would take the district a year to fire him, and he was quitting anyway.

*** Musical chairs has to be one of the worst games you could possibly teach to children. We played it fairly regularly in elementary school.

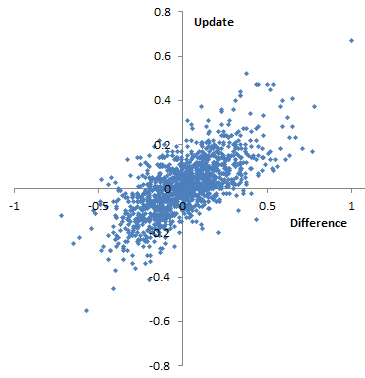

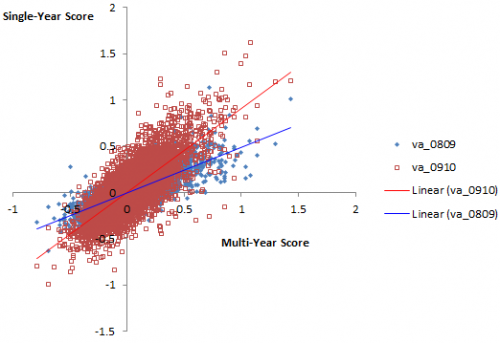

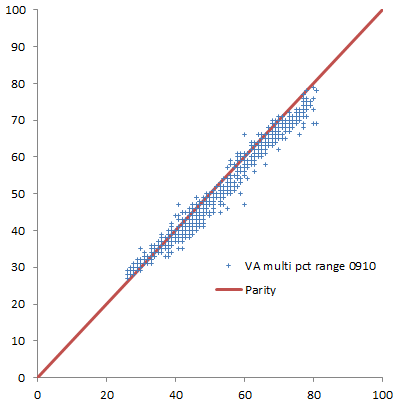

Plotting single-year scores for 08-09 and 09-10 against the 09-10 multi-year score, it appears that the multi-year score is much better correlated with 09-10, which would seem to indicate that 09-10 has greater leverage on the outcome. Again, his is 4th grade English, but generalizes.

Plotting single-year scores for 08-09 and 09-10 against the 09-10 multi-year score, it appears that the multi-year score is much better correlated with 09-10, which would seem to indicate that 09-10 has greater leverage on the outcome. Again, his is 4th grade English, but generalizes.

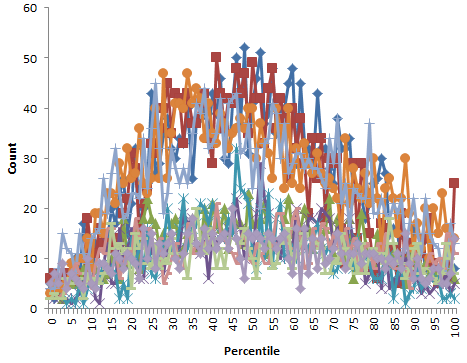

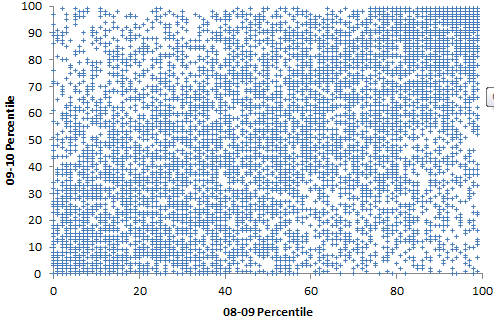

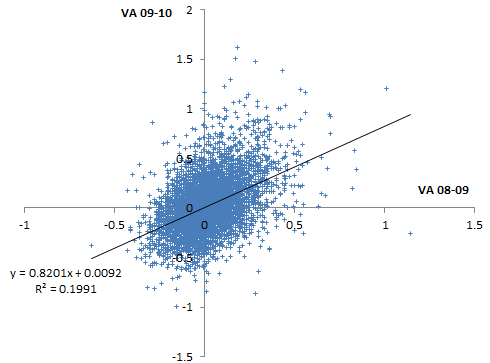

Percentiles are actually not the greatest measure here, because they throw away a lot of information about the distribution. Also, the points are integers and therefore overlap. Here are raw z-scores:

Percentiles are actually not the greatest measure here, because they throw away a lot of information about the distribution. Also, the points are integers and therefore overlap. Here are raw z-scores: Some things to note here:

Some things to note here: