Education is a mess, and well-motivated policy changes are making it worse.

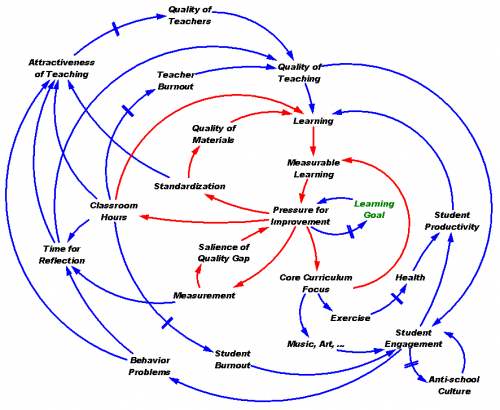

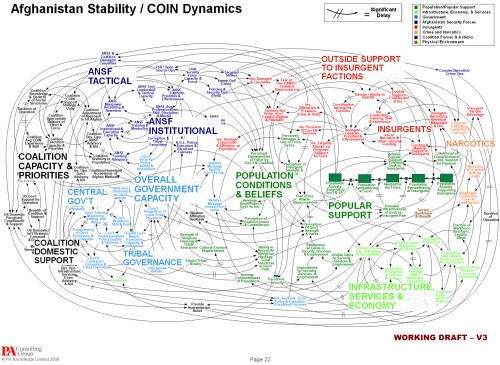

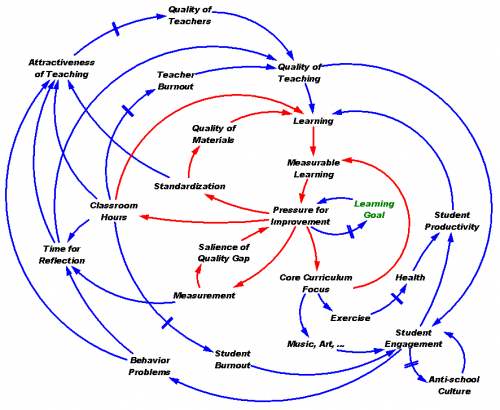

I was just reading this and this, and the juices got flowing, so my wife and I brainstormed this picture:

Click to enlarge

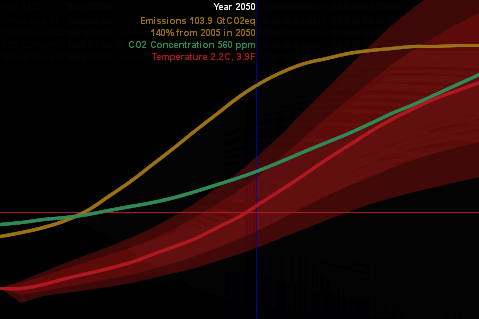

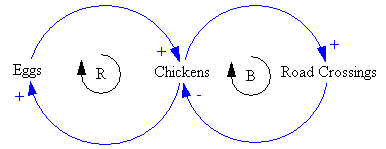

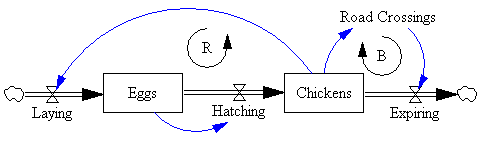

Yep, it’s spaghetti, like a lot of causal brainstorming efforts. The underlying problem space is very messy and hard to articulate quickly, but I think the essence is simple. Educational outcomes are substandard, creating pressure to improve. In at least some areas, outcomes slipped a lot because the response to pressure was to erode learning goals rather than to improve (blue loop through the green goal). One benefit of No Child Left Behind testing is to offset that loop, by making actual performance salient and restoring the pressure to improve. Other intuitive responses (red loops) also have some benefit: increasing school hours provides more time for learning; standardization yields economies of scale in materials and may improve teaching of low-skill teachers; core curriculum focus aligns learning with measured goals.

The problem is that these measures have devastating side effects, especially in the long run. Measurement obsession eats up time for reflection and learning. Core curriculum focus cuts out art and exercise, so that lower student engagement and health diminishes learning productivity. Low engagement means more sit-down-and-shut-up, which eats up teacher time and makes teaching unattractive. Increased hours lead to burnout of both students and teachers. Long hours and standardization make teaching unattractive. Degrading the attractiveness of teaching makes it hard to attract quality teachers. Students aren’t mindless blank slates; they know when they’re being fed rubbish, and check out. When a bad situation persists, an anti-intellectual culture of resistance to education evolves.

The nest of reinforcing feedbacks within education meshes with one in broader society. Poor education diminishes future educational opportunity, and thus the money and knowledge available to provide future schooling. Economic distress drives crime, and prison budgets eat up resources that could otherwise go to schools. Dysfunction reinforces the perception that government is incompetent, leading to reduced willingness to fund schools, ensuring future dysfunction. This is augmented by flight of the rich and smart to private schools.

I’m far from having all the answers here, but it seems that standard SD advice on the counter-intuitive behavior of social systems applies. First, any single policy will fail, because it gets defeated by other feedbacks in the system. Perhaps that’s why technology-led efforts haven’t lived up to expectations; high tech by itself doesn’t help if teachers have no time to reflect on and refine its use. Therefore intervention has to be multifaceted and targeted to activate key loops. Second, things get worse before they get better. Making progress requires more resources, or a redirection of resources away from things that produce the short-term measured benefits that people are watching.

I think there are reasons to be optimistic. All of the reinforcing feedback loops that currently act as vicious cycles can run the other way, if we can just get over the hump of the various delays and irreversibilities to start the process. There’s enormous slack in the system, in a variety of forms: time wasted on discipline and memorization, burned out teachers who could be re-energized and students with unmet thirst for knowledge.

The key is, how to get started. I suspect that the conservative approach of privatization half-works: it successfully exploits reinforcing feedback to provide high quality for those who opt out of the public system. However, I don’t want to live in a two class society, and there’s evidence that high inequality slows economic growth. Instead, my half-baked personal prescription (which we pursue as homeschooling parents) is to make schools more open, connecting students to real-world trades and research. Forget about standardized pathways through the curriculum, because children develop at different rates and have varied interests. Replace quantity of hours with quality, freeing teachers’ time for process improvement and guidance of self-directed learning. Suck it up, and spend the dough to hire better teachers. Recover some of that money, and avoid lengthy review, by using schools year ’round. I’m not sure how realistic all of this is as long as schools function as day care, so maybe we need some reform of work and parental attitudes to go along.

[Update: There are of course many good efforts that can be emulated, by people who’ve thought about this more deeply than I. Pegasus describes some here. Two of note are the Waters Foundation and Creative Learning Exchange. Reorganizing education around systems is a great way to improve productivity through learner-directed learning, make learning exciting and relevant to the real world, and convey skills that are crucial for society to confront its biggest problems.]