I’ve asserted here that the Global Warming Challenge is a sucker bet. I still think that’s true, but I may be wrong about the identity of the sucker. Here are the terms of the bet as of this writing:

The general objective of the challenge is to promote the proper use of science in formulating public policy. This involves such things as full disclosure of forecasting methods and data, and the proper testing of alternative methods. A specific objective is to develop useful methods to forecast global temperatures. Hopefully other competitors would join to show the value of their forecasting methods. These are objectives that we share and they can be achieved no matter who wins the challenge.

Al Gore is invited to select any currently available fully disclosed climate model to produce the forecasts (without human adjustments to the model’s forecasts). Scott Armstrong’s forecasts will be based on the naive (no-change) model; that is, for each of the ten years of the challenge, he will use the most recent year’s average temperature at each station as the forecast for each of the years in the future. The naïve model is a commonly used benchmark in assessing forecasting methods and it is a strong competitor when uncertainty is high or when improper forecasting methods have been used.

Specifically, the challenge will involve making forecasts for ten weather stations that are reliable and geographically dispersed. An independent panel composed of experts agreeable to both parties will designate the weather stations. Data from these sites will be listed on a public web site along with daily temperature readings and, when available, error scores for each contestant.

Starting at the beginning of 2008, one-year ahead forecasts then two-year ahead forecasts, and so on up to ten-year-ahead forecasts of annual ‘mean temperature’ will be made annually for each weather station for each of the next ten years. Forecasts must be submitted by the end of the first working day in January. Each calendar year would end on December 31.

The criteria for accuracy would be the average absolute forecast error at each weather station. Averages across stations would be made for each forecast horizon (e.g., for a six-year ahead forecast). Finally, simple unweighted averages will be made of the forecast errors across all forecast horizons. For example, the average across the two-year ahead forecast errors would receive the same weight as that across the nine-year-ahead forecast errors. This unweighted average would be used as the criterion for determining the winner.

I previously noted several problems with the bet:

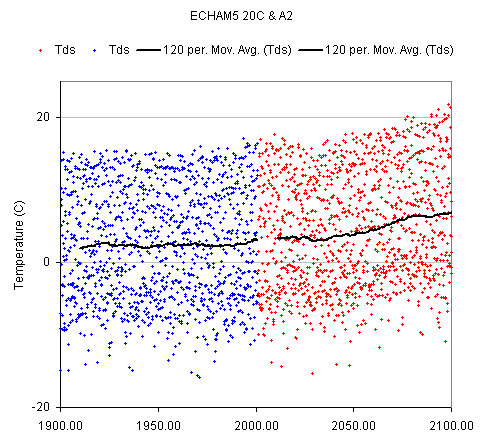

The Global Warming Challenge is indeed a sucker bet, with terms slanted to favor the naive forecast. It focuses on temperature at just 10 specific stations over only 10 years, thus exploiting the facts that (a) GCMs do not have local resolution (their grids are typically several degrees) (b) GCMs, unlike weather models, do not have infrastructure for realtime updating of forcings and initial conditions (c) ten stations is a pathetically small sample, and thus a low signal-to-noise ratio is expected under any circumstances (d) the decadal trend in global temperature is small compared to natural variability.

It’s actually worse than I initially thought. I assumed that Armstrong would determine the absolute error of the average across the 10 stations, rather than the average of the individual absolute errors. By the triangle inequality, the latter is always greater than or equal to the former, so this approach further worsens the signal-to-noise ratio and enhances the advantage of the naive forecast. In effect, the bet is 10 replications of a single-station test. But wait, there’s still more: the procedure involves simple, unweighted averages of errors across all horizons. But there will be only one 10-year forecast, two 9-year forecasts … , and ten 1-year forecasts. If the temperature and forecast are stationary, the errors at various horizons have the same magnitude, and the weighted average horizon is only four years. Even with other plausible assumptions, the average horizon of the experiment is much less than 10 years, further reducing the value of an accurate long-term climate model.

However, there is a silver lining. I have determined, by playing with the GHCN data, that Armstrong’s procedure can be reliably beaten by a simple extension of a physical climate model published a number of years ago. I’m busy and I have a high discount rate, so I will happily sell this procedure to the best reasonable offer (remember, you stand to make $10,000).

Update: I’m serious about this, by the way. It can be beaten.