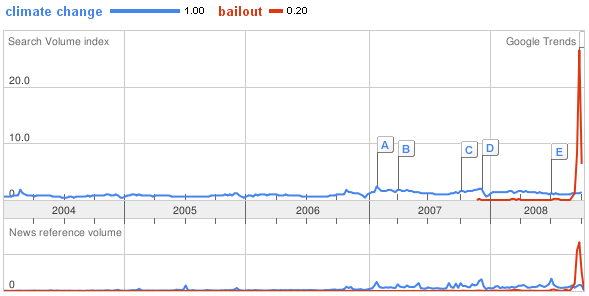

One reason long-term environmental issues like climate change are so hard to solve is that there’s always something else to do that seems more immediately pressing. War? Energy crisis? Financial meltdown? Those grab headlines, leaving the long-term problems for the slow news days:

In this case, I don’t think slow and steady wins the race. The financial sector gets a trillion dollars in one year, and climate policy gets the Copenhagen Consensus.

Ironically, inattention to long term problems is partly responsible for the creation of crises. It was pretty obvious a few years in advance that New Orleans’ levies were inadequate, that housing prices were bubbly, that mortgage securities and derivatives weren’t transparent, that the US had no meaningful energy policy, etc. But as long as those pots hadn’t boiled over, attention was easily drawn to 9/11, Iraq, and other more immediate catastrophes. As a result, we get Katrina, bailouts, and energy insecurity.

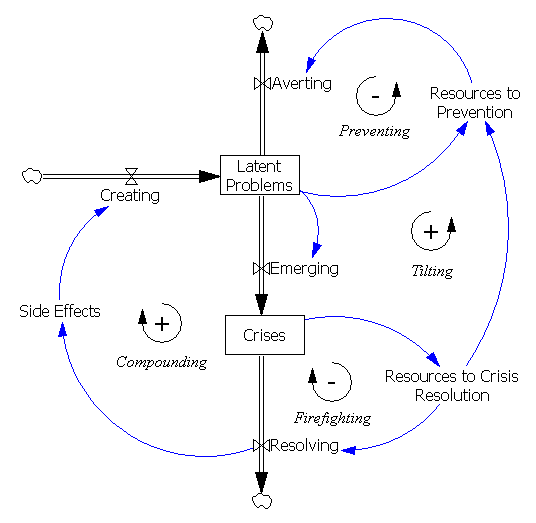

Laura Black and Nelson Repenning discuss one aspect of this problem in Why Firefighting Is Never Enough: Preserving High-Quality Product Development. They identify a “tilting” effect, a dynamic trap by which short term pressures draw an organization into a self-reinforcing firefighting mode, from which it is difficult to escape. As is often the case in complex systems, policies that appear to make things better in the short run can make things worse in the long run. Policies that really make thinks better over the long haul are hard to implement, because they require some short term sacrifice, and present stakeholders are more vocal than future ones.

At the risk of carrying the project analogy too far, I’ve paraphrased their Figure 12 to reflect global problems, as follows:

In a perfect world, the balancing processes of preventing and firefighting successfully extinguish most problems. As in product development though, a problem arises due to resource constraints. An increase in crises requires more resources for resolution, stealing resources from prevention. All else equal, fewer long term problems get resolved, guaranteeing more crises in the future (the self-reinforcing tilting effect). To make matters worse, ill-conceived short term solutions create more latent problems, another self-reinforcing compounding effect.

In addition to worse-before-better tradeoffs, Black and Repenning suggest another interesting reason for organizations persistently falling into the firefighting trap:

There is an additional reason, so far not discussed, why organizations so often fail to do up-front work [paraphrased as the preventing loop above]: They don’t know how. Organizations that do not invest in up-front activities are unlikely ever to develop significant competence in executing them.

I think that’s an apt characterization of society as a whole. Our ignorance of long-term problem solving is profound. Recently, I saw the problem posed as, “why don’t we do what we know we have to do, when we know why we don’t do what we know we have to do?” We operate on the same landscape as firms developing products, with a basin of attraction around firefighting. Our opportunities to fall into that basin are compounded by greater diversity of agents, goals, and paradigms, mental models that (naively or wilfully) fail to correspond with reality, and evolutionary pressure tilting the table for us.

In the product development model, an attractive policy is to limit the stock of projects underway, to provide adequate resources in early-stage development and prevent problems from spilling over into crises. That limits the effect of the tilting loop. It isn’t obvious how to implement the analog for society, shedding latent problems. Still, we need to work on the tilting loop, and its companion the compounding loop, as well as enhancing the preventing loop. That implies several possible strategies:

- Learn to predict the consequences of actions (and thus to choose actions that mitigate side-effects). Yay, we need more models!

- Focus more on identification and resolution of latent problems, before they become crises. Again, this requires prediction of the consequences of actions. But it also takes a culture change: we need to recognize the building safety inspector who prevents a catastrophe as a hero as much as the firefighter who pulls a child out of the collapsing apartment.

- Perhaps sometimes, in crisis, we should just “let ‘er burn,” to avoid creating more new problems than we solve. More importantly, we need to stop discounting the cost of creating latent problems in any context.