Tsar Nicholas I reportedly coined the term “Sick Man of Europe” to refer to the declining Ottoman Empire. Ironically, now it’s Russia that seems sick.

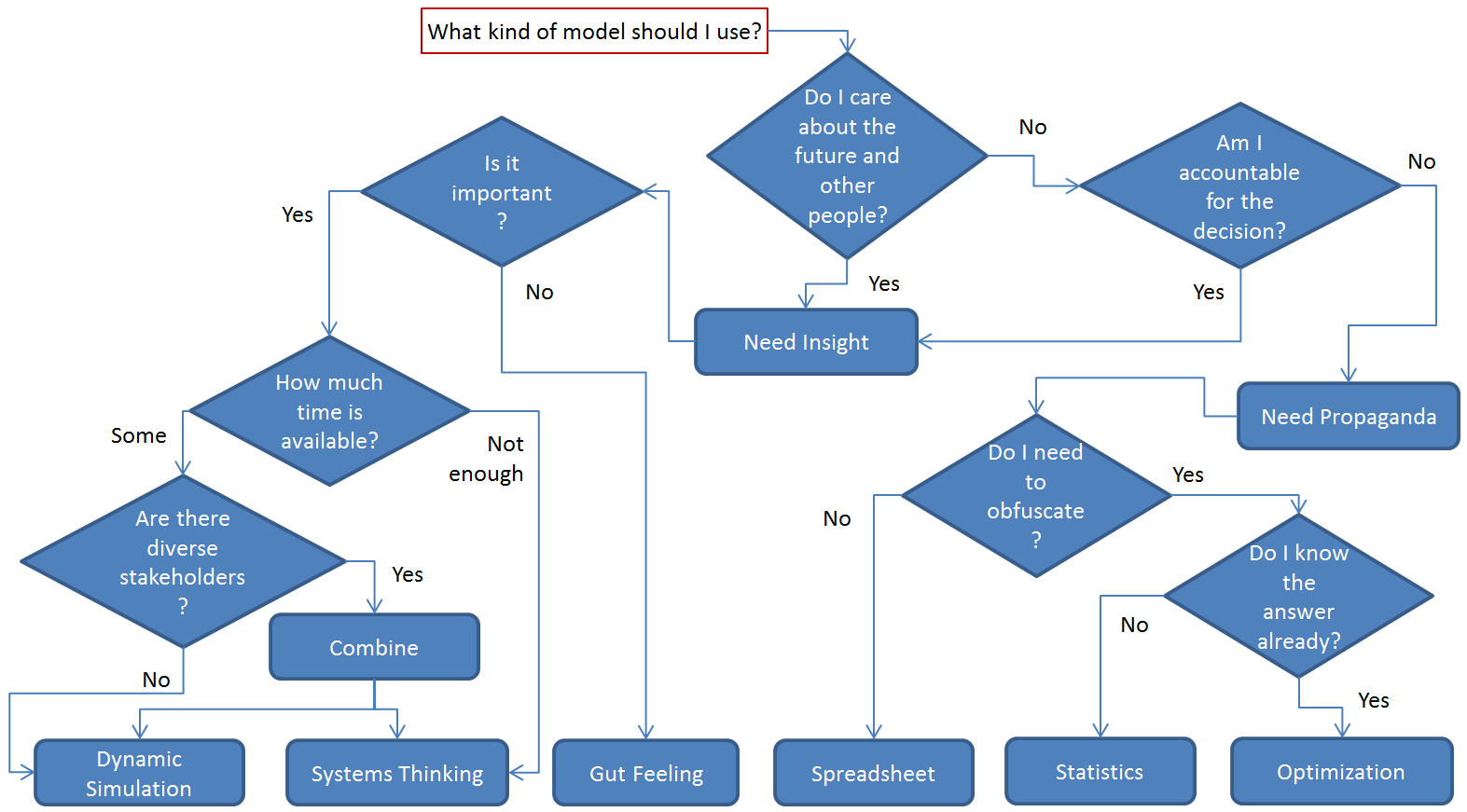

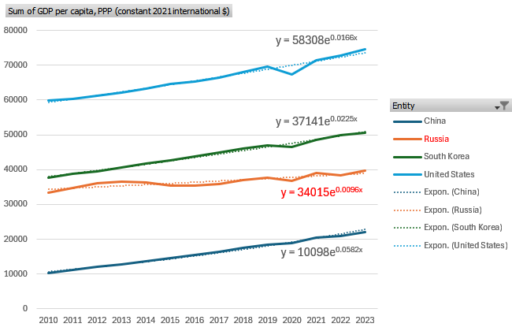

Above shows GDP per capita (constant PPP, World Bank). Here’s how this works. GDP growth is basically from two things: capital accumulation, and technology. There are diminishing returns to capital, so in the long run technology is essentially the whole game. Countries that innovate grow, and those that don’t stagnate.

Above shows GDP per capita (constant PPP, World Bank). Here’s how this works. GDP growth is basically from two things: capital accumulation, and technology. There are diminishing returns to capital, so in the long run technology is essentially the whole game. Countries that innovate grow, and those that don’t stagnate.

However, when you’re the technology leader, innovation is hard. There’s a tension between making big technical leaps and falling off a cliff because you picked the wrong one. Since the industrial revolution, this has created a glass ceiling of growth at 1.5-2%/year for the leading countries. That rate has prevailed despite huge waves of technology from railroads to microchips, and at low and high tax rates. If you’re below the ceiling, you can grow faster, because you can imitate rather than innovate, and that entails much less risk of failure.

If you’re below the glass ceiling, you can also fail to grow, because growth is essentially a function of MIN( rule of law, functioning markets, etc. ) that enable innovation or imitation. If you don’t have some basic functioning human and institutional capital, you can’t grow. Unfortunately, human rights don’t seem to be a necessary part of the equation, as long as there’s some basic economic infrastructure. On the other hand, there’s some evidence that equity matters, probably because innovation is a bottom-up evolutionary process.

In the chart above, the US is doing pretty well lately at 1.7%/yr. China has also done very well, at 5.8%, which is actually off their highest growth rates, but it’s substantially due to catch-up effects. South Korea, at about 60% of US GDP/cap, grows a little faster at 2.2% (a couple decades back, they were growing much faster, but have now caught up.

So why is Russia, poorer than S. Korea, doing poorly at only 0.96%/year? The answer clearly isn’t resource endowment, because Russia is massively rich in that sense. I think the answer is rampant corruption and endless war, where the powerful steal the resources that could otherwise be used for innovation, and the state squanders the rest on conflict.

At current rates, China could surpass Russia in just 12 years (though it’s likely that growth will slow). At that point, it would be a vastly larger economy. So why are we throwing our lot in with a decrepit, underperforming nation that finds it easier to steal crypto from Americans than to borrow ideas? I think the US was already at risk from overconcentration of firms and equity effects that destroy education and community, but we’re now turning down a road that leads to a moribund oligarchy.

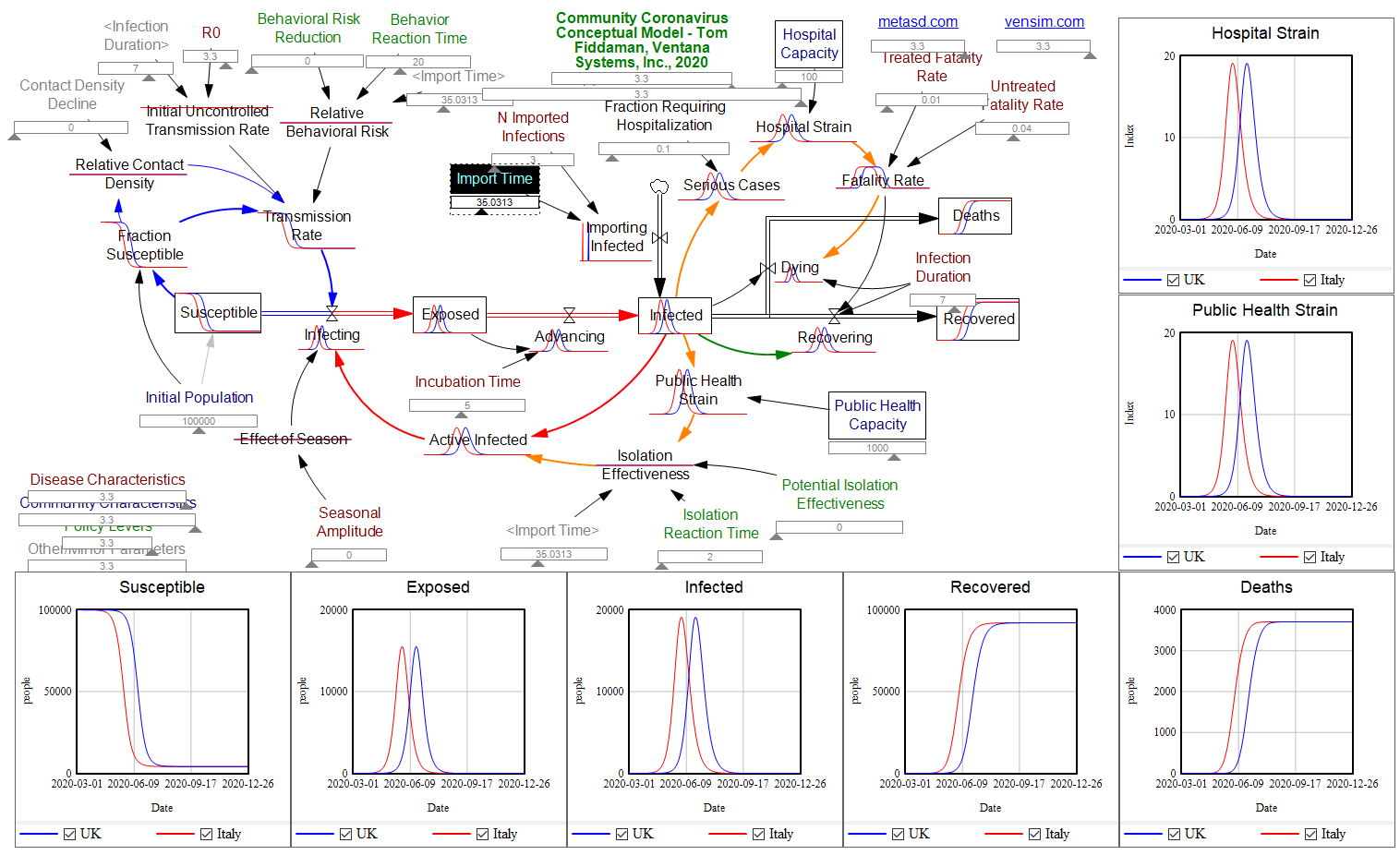

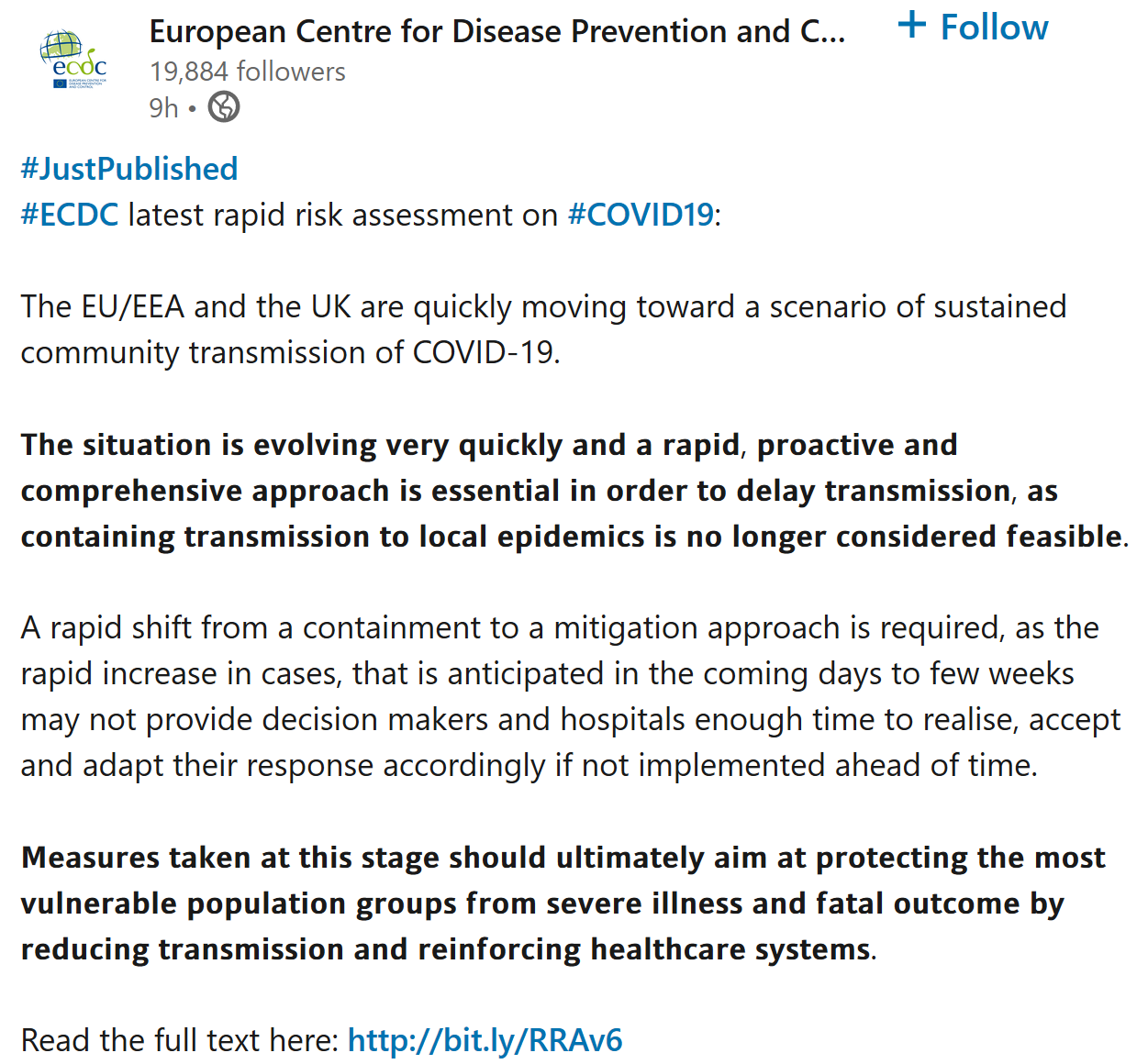

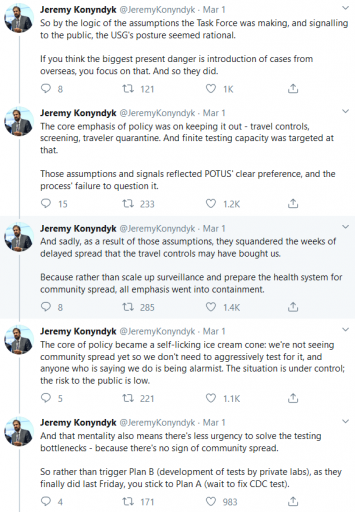

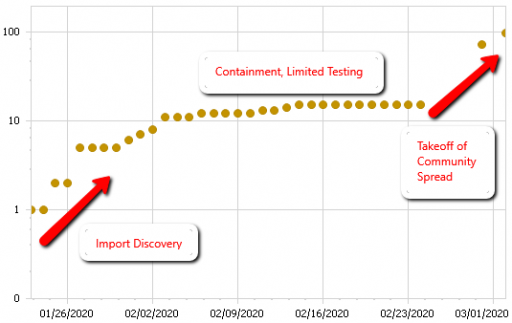

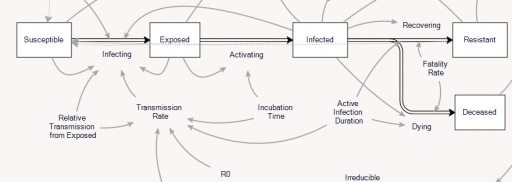

The problem is that containment alone doesn’t work, because the structure of the system defeats it. You can’t intercept every infected person, because some are exposed but not yet symptomatic, or have mild cases. As soon as a few of these people slip into the wild, the positive loops that drive infection operate as they always have. Once the virus is in the wild, it’s essential to change behavior enough to lower its reproduction below replacement.

The problem is that containment alone doesn’t work, because the structure of the system defeats it. You can’t intercept every infected person, because some are exposed but not yet symptomatic, or have mild cases. As soon as a few of these people slip into the wild, the positive loops that drive infection operate as they always have. Once the virus is in the wild, it’s essential to change behavior enough to lower its reproduction below replacement.