The architect of Texas’ electricity market says it’s working as planned. Critics compare it to late Soviet Russia.

Yahoo – The Week

Who’s right? Both and neither.

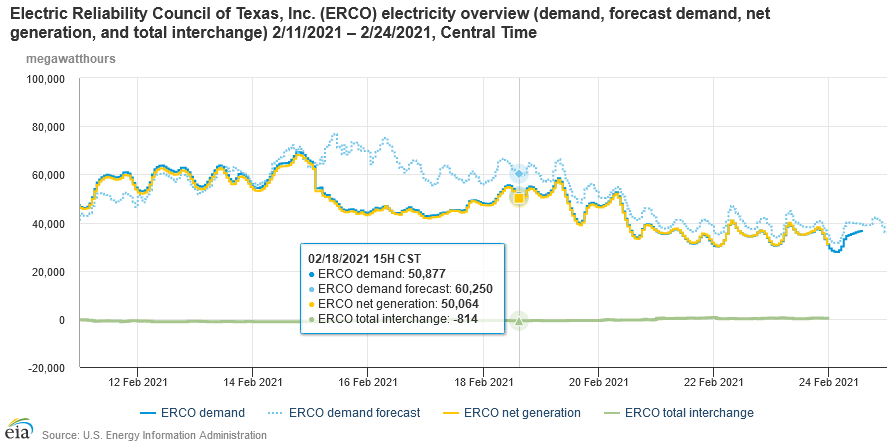

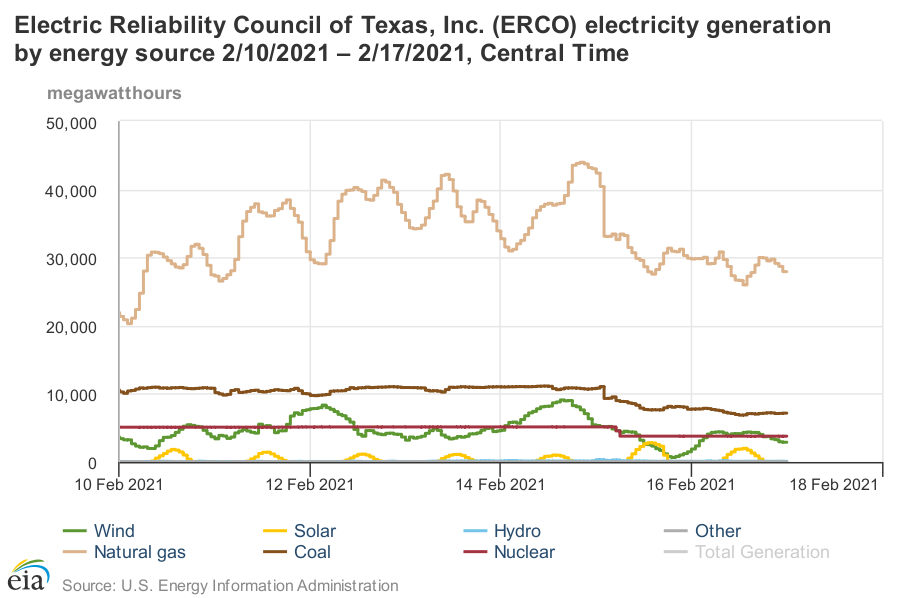

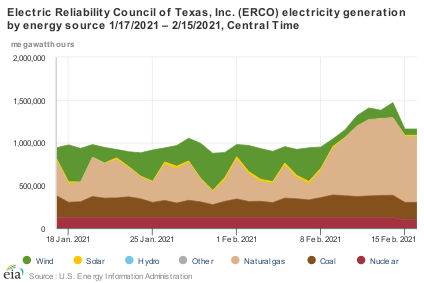

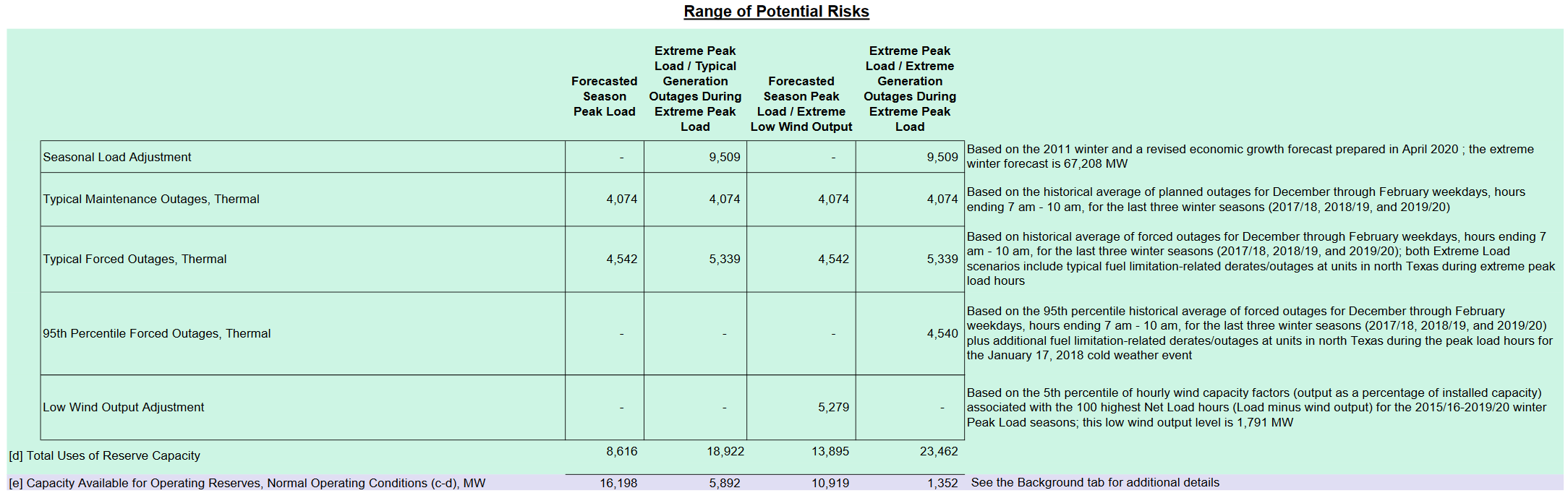

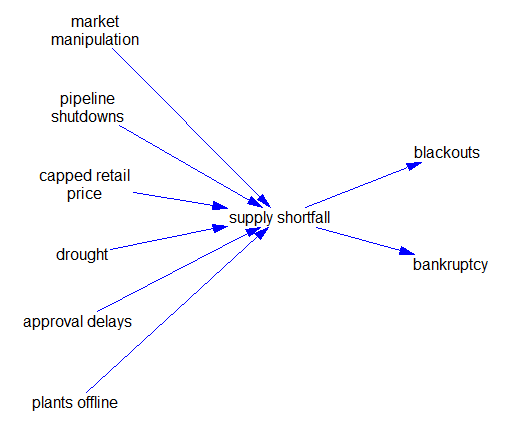

I think there’s little debate about what actually happened, though probably much remains to be discovered. But the general features are known: bad weather hit, wind output was unusually low, gas plants and infrastructure failed in droves, and coal and nuclear generation also took a hit. Dependencies may have amplified problems, as for example when electrified gas infrastructure couldn’t deliver gas to power plants due to blackouts. Contingency plans were ready for low wind but not correlated failures of many thermal plants.

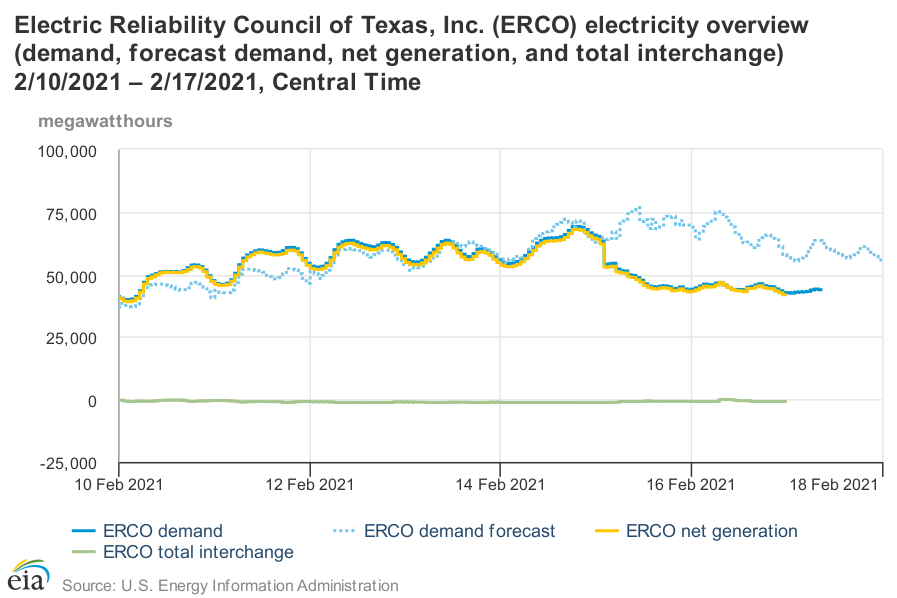

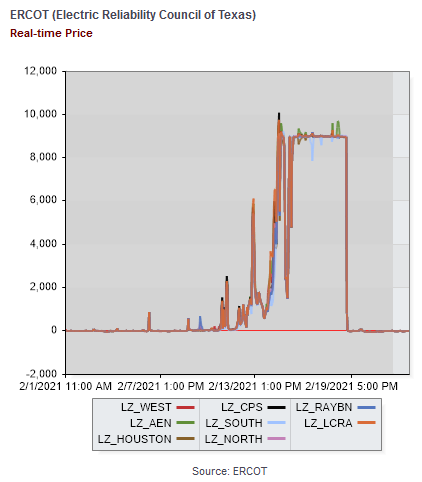

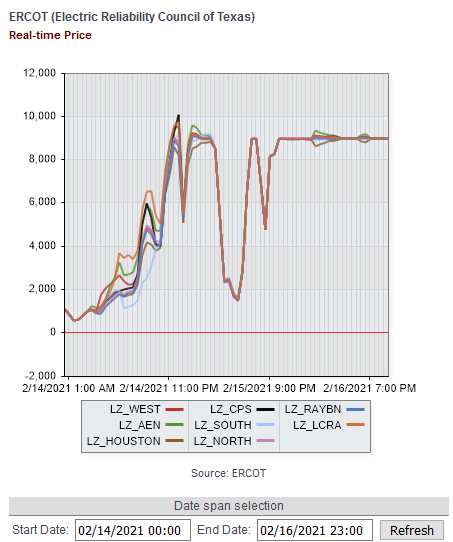

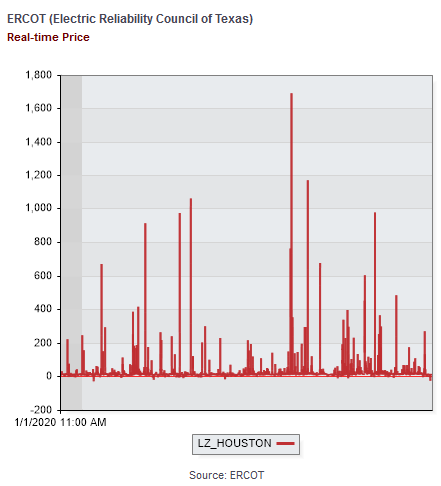

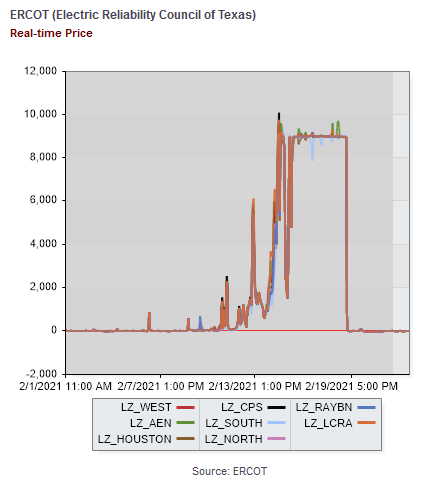

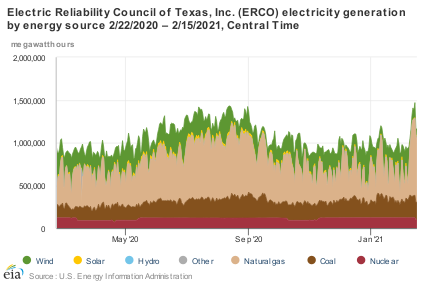

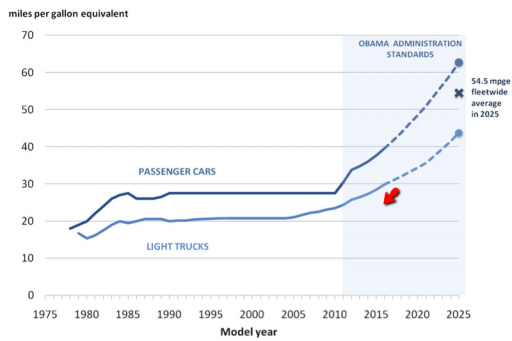

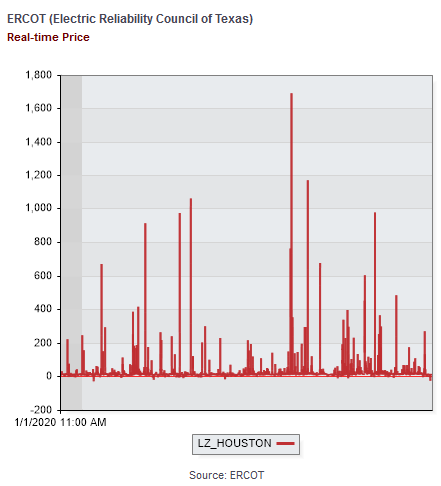

The failures led to a spectacular excursion in the market. Normally Texas grid prices are around $20/MWhr (2 cents a kWhr wholesale). Sometimes they’re negative (due to subsidized renewable abundance) and for a few hours a year they spike into the 100s or 1000s:

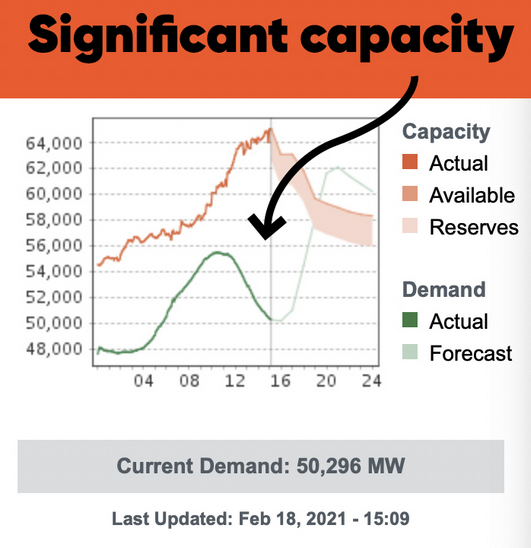

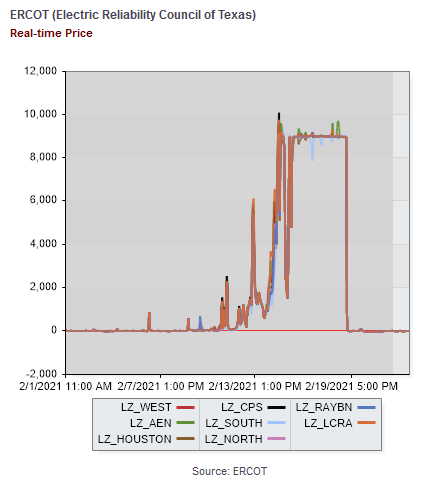

But last week, prices hit the market cap of $9000/MWhr and stayed there for days:

“The year 2011 was a miserable cold snap and there were blackouts,” University of Houston energy fellow Edward Hirs tells the Houston Chronicle. “It happened before and will continue to happen until Texas restructures its electricity market.” Texans “hate it when I say that,” but the Texas grid “has collapsed in exactly the same manner as the old Soviet Union,” or today’s oil sector in Venezuela, he added. “It limped along on underinvestment and neglect until it finally broke under predictable circumstances.”

I think comparisons to the Soviet Union are misplaced. Yes, any large scale collapse is going to have some common features, as positive feedbacks on a network lead to cascades of component failures. But that’s where the similarities end. Invoking the USSR invites thoughts of communism, which is not a feature of the Texas electricity market. It has a central operator out of necessity, but it doesn’t have central planning of investment, and it does have clear property rights, private ownership of capital, a transparent market, and rule of law. Until last week, most participants liked it the way it was.

The architect sees it differently:

William Hogan, the Harvard global energy policy professor who designed the system Texas adopted seven years ago, disagreed, arguing that the state’s energy market has functioned as designed. Higher electricity demand leads to higher prices, forcing consumers to cut back on energy use while encouraging power plants to increase their output of electricity. “It’s not convenient,” Hogan told the Times. “It’s not nice. It’s necessary.”

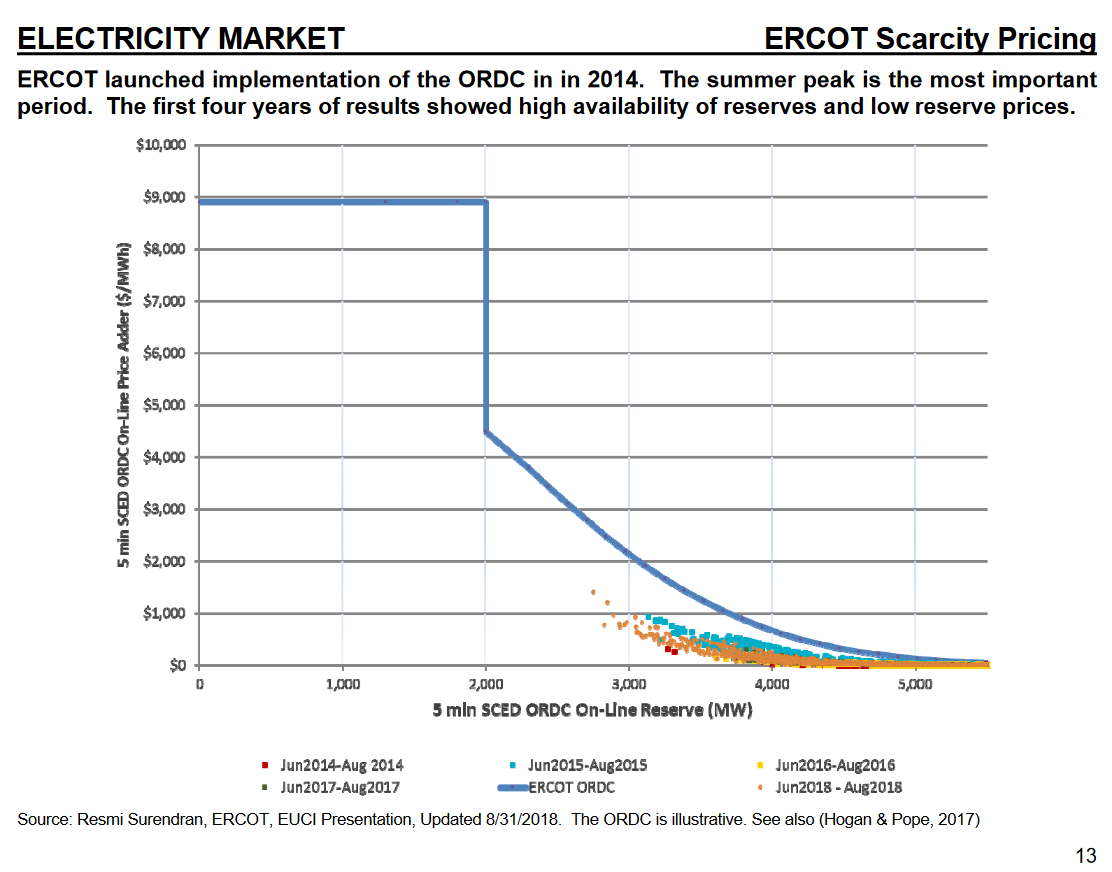

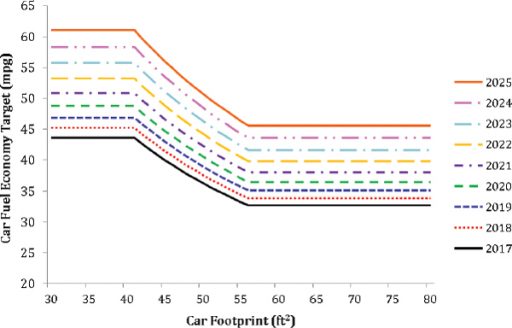

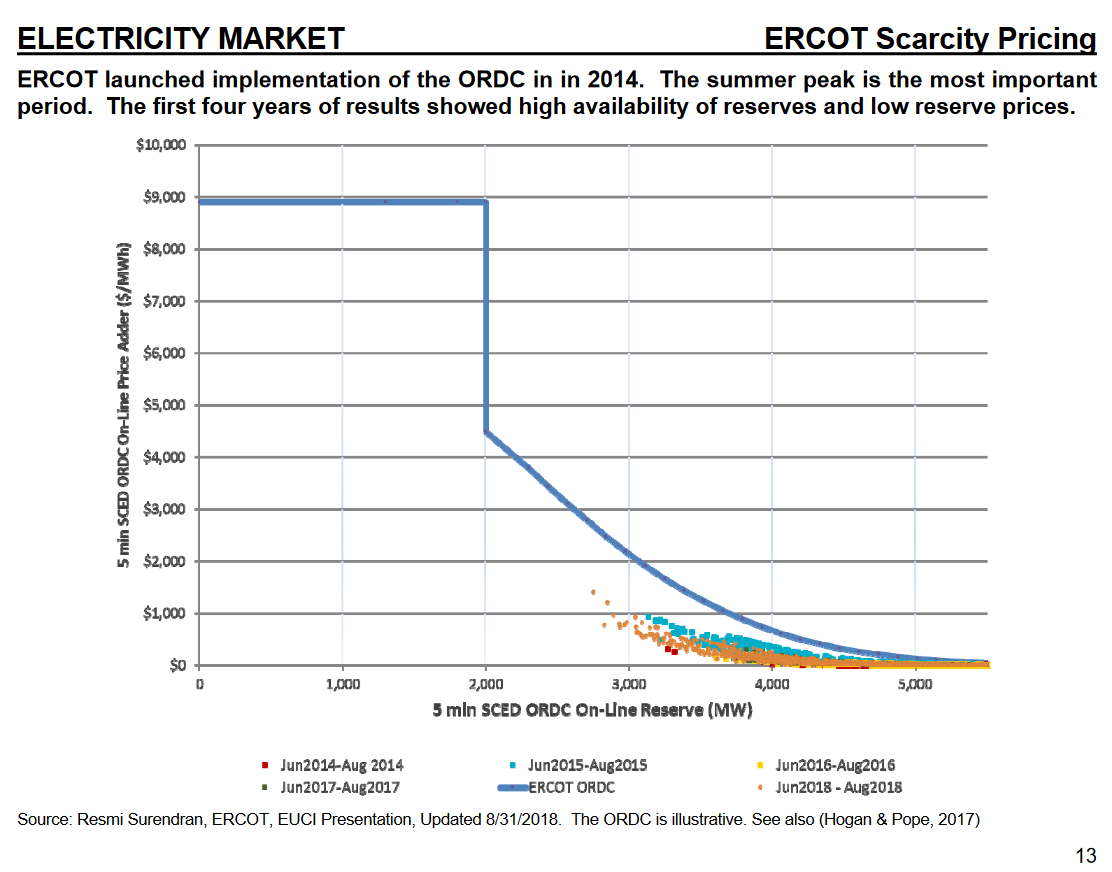

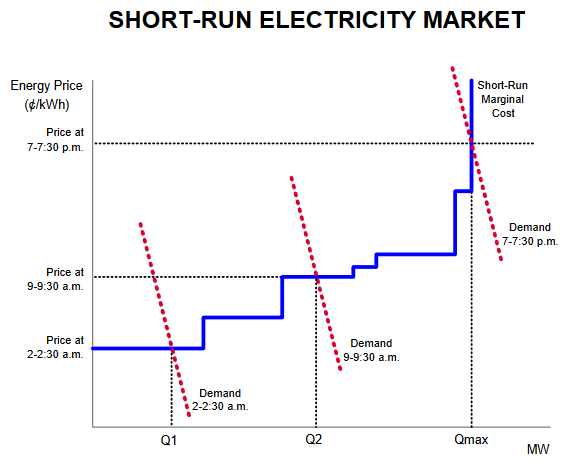

Essentially, he’s taking a short-term functional view of the market: for the set of inputs given (high demand, low capacity online), it produces exactly the output intended (extremely high prices). You can see the intent in ERCOT’s ORDC offer curve:

– W. Hogan, 2018

(This is a capacity reserve payment, but the same idea applies to regular pricing.)

In a technical sense, Hogan may be right. But I think this takes too narrow a view of the market. I’m reminded of something I heard from Hunter Lovins a long time ago: “markets are good servants, poor masters, and a lousy religion.” We can’t declare victory when the market delivers a designed technical result; we have to decide whether the design served any useful social purpose. If we fail to do that, we are the slaves, with the markets our masters. Looking at things more broadly, it seems like there are some big problems that need to be addressed.

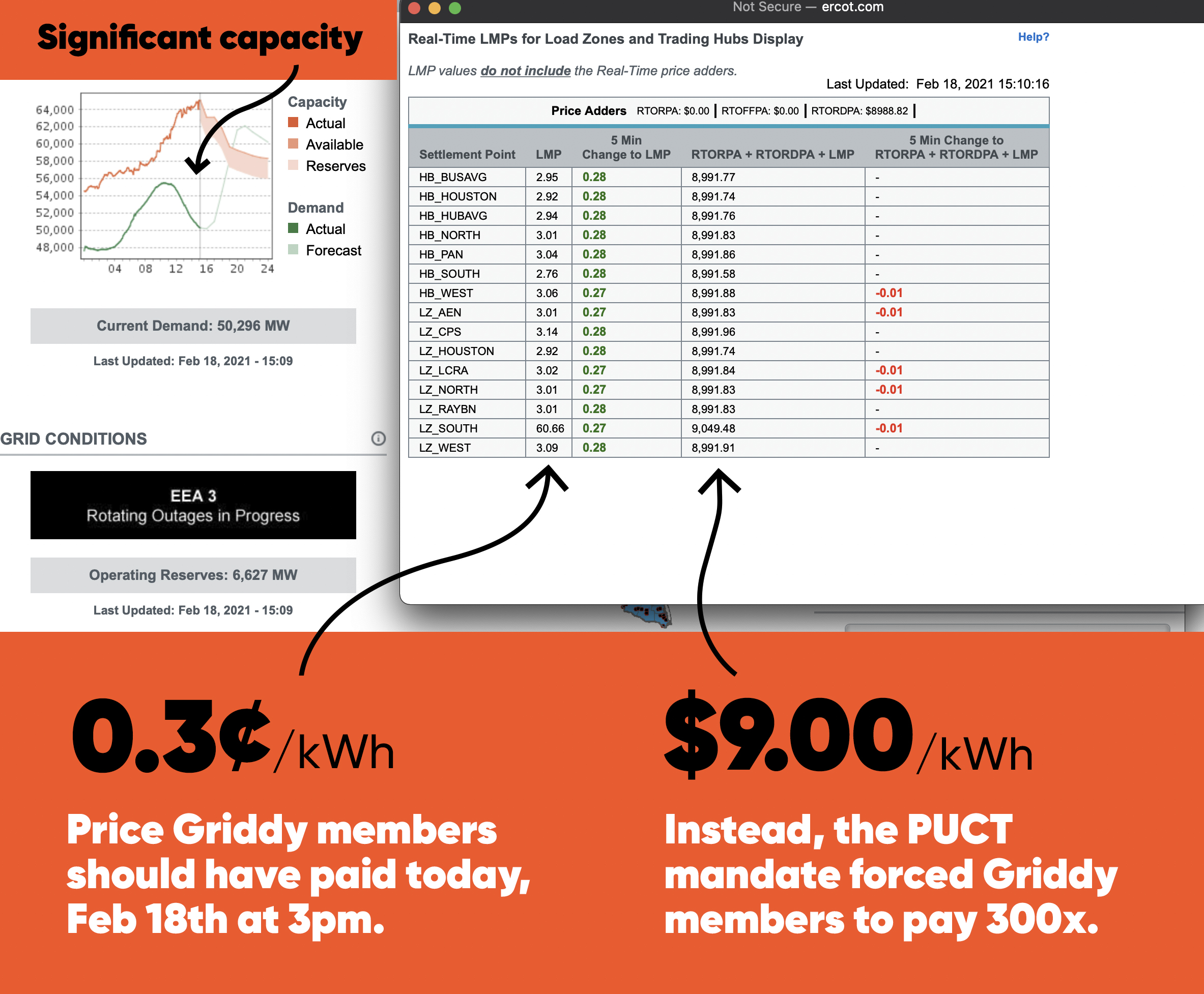

First, it appears that the high prices were not entirely a result of the market clearing process. According to Platt’s, the PUC put its finger on the scale:

The PUC met Feb. 15 to address the pricing issue and decided to order ERCOT to set prices administratively at the $9,000/MWh systemwide offer cap during the emergency.

“At various times today (Feb. 15), energy prices across the system have been as low as approximately $1,200[/MWh],” the order states. “The Commission believes this outcome is inconsistent with the fundamental design of the ERCOT market. Energy prices should reflect scarcity of the supply. If customer load is being shed, scarcity is at its maximum, and the market price for the energy needed to serve that load should also be at its highest.”

The PUC also ordered ERCOT “to correct any past prices such that firm load that is being shed in [Energy Emergency Alert Level 3] is accounted for in ERCOT’s scarcity pricing signals.”

–S&P Global/Platt’s

Second, there’s some indication that exposure to the market was extremely harmful to some customers, who now face astronomical power bills. Exposing customers to almost-unlimited losses, in the face of huge information asymmetries between payers and utilities, strikes me as predatory and unethical. You can take a Darwinian view of that, but it’s hardly a Libertarian triumph if PUC intervention in the market transferred a huge amount of money from customers to utilities.

Third, let’s go back to the point of good price signals expressed by Hogan above:

Higher electricity demand leads to higher prices, forcing consumers to cut back on energy use while encouraging power plants to increase their output of electricity. “It’s not convenient,” Hogan told the Times. “It’s not nice. It’s necessary.”

It may have been necessary, but it apparently wasn’t sufficient in the short run, because demand was not curtailed much (except by blackouts), and high prices could not keep capacity online when it failed for technical reasons.

I think the demand side problem is that there’s really very little retail price exposure in the market. The customers of Griddy and other services with spot price exposure apparently didn’t have the tools to observe realtime prices and conserve before their bills went through the roof. Customers with fixed rates may soon find that their utilities are bankrupt, as happened in the California debacle.

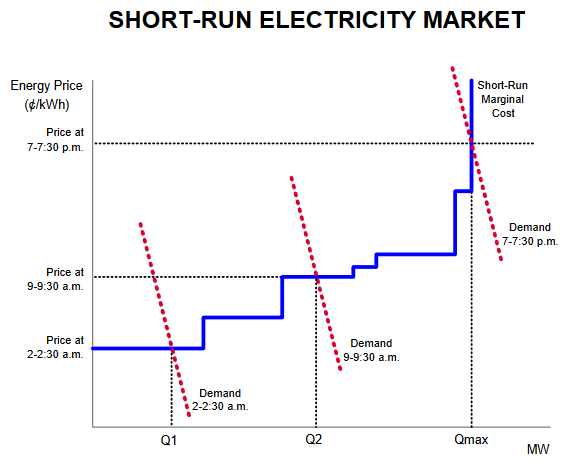

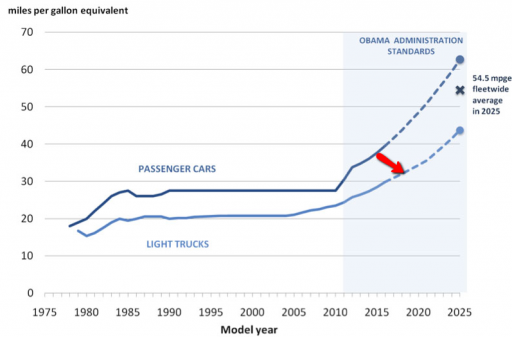

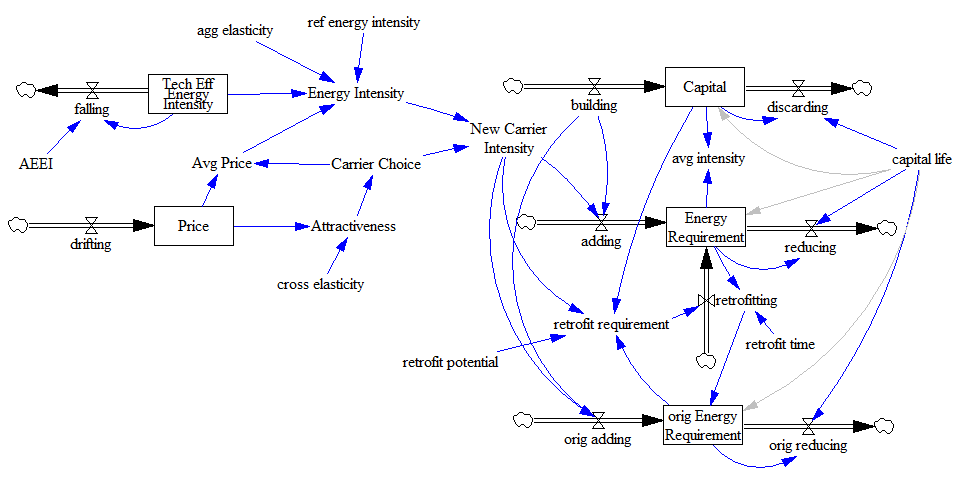

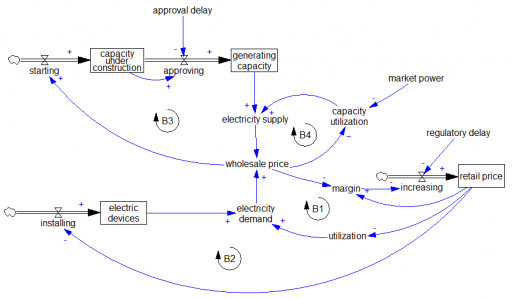

Hogan diagrams the situation like this:

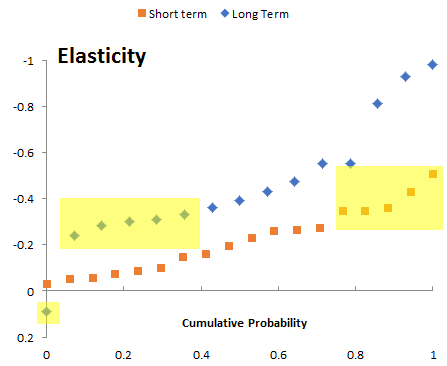

This is just a schematic, but in reality I think there are too many markets where the red demand curves are nearly vertical, because very few customers see realtime prices. That’s very destabilizing.

Strangely, the importance of retail price elasticity has long been known. In their seminal work on Spot Pricing of Electricity, Schweppe, Caramanis, Tabors & Bohn write, right in the introduction:

Five ingredients for a successful marketplace are

- A supply side with varying supply costs that increase with demand

- A demand side with varying demands which can adapt to price changes

- A market mechanism for buying and selling

- No monopsonistic behavior on the demand side

- No monopolistic behavior on the supply side

I find it puzzling that there isn’t more attention to creation of retail demand response. I suspect the answer may be that utilities don’t want it, because flat rates create cross-subsidies that let them sell more power overall, by spreading costs from high peak users across the entire rate base.

On the supply side, I think the question is whether the expectation that prices could one day go to the $9000/MWhr cap induced suppliers to do anything to provide greater contingency power by investing in peakers or resiliency of their own operations. Certainly any generator who went offline on Feb. 15th due to failure to winterize left a huge amount of money on the table. But it appears that that’s exactly what happened.

Presumably there are some good behavioral reasons for this. No one expected correlated failures across the system, and thus they underestimated the challenge of staying online in the worst conditions. There’s lots of evidence that perception of risk of rare events is problematic. Even a sophisticated investor who understood the prospects would have had a hard time convincing financiers to invest in resilience: imagine walking into a bank, “I’d like a loan for this piece of equipment, which will never be used, until one day in a couple years when it will pay for itself in one go.”

I think legislators and regulators have their work cut out for them. Hopefully they can resist the urge to throw the baby out with the bathwater. It’s wrong to indict communism, capitalism, renewables, or any single actor; this was a systemic failure, and similar events have happened under other regimes, and will happen again. ERCOT has been a pioneering design in many ways, and it would be a shame to revert to a regulated, average-cost-pricing model. The cure for ills like demand inelasticity is more market exposure, not less. The market may require more than a little tinkering around the edges, but catastrophes are rare, so there ought to be time to do that.