The Montana climate case, Held vs. State of Montana, has just turned in a win for youth.

The decision looks pretty strong. I think the bottom line is that the legislature’s MEPA exclusions preventing consideration of climate in state regulation are a limitation of the MT constitutional environmental rights, and therefore require strict scrutiny. The state failed to show that the MEPA Limitation serves a compelling government interest.

Not to diminish the accomplishments of the plaintiffs, but the state put forth a very weak case. The Montana Supreme Court tossed out AG Knudsen’s untimely efforts to send the case back to the drawing board. The state’s own attorney, Thane Johnson, couldn’t get acronyms right for the IPCC and RCPs. That’s perhaps not surprising, given that the Director of Montana’s alleged environmental agency admitted unfamiliarity with the largest scientific body related to climate,

Montana’s top witnesses — state employees who are responsible for permitting fossil fuel projects — however, acknowledged they are not well-versed in climate science and at times struggled with the many acronyms used in the case.

Chris Dorrington, director of the Montana Department of Environmental Quality, told an attorney for the youth that he had been unaware of the U.N. Intergovernmental Panel on Climate Change (IPCC) — which has issued increasingly dire assessments since it was established more than 30 years ago to synthesize global climate data.

“I attended this trial last week, when there was testimony relevant to IPCC,” Dorrington said. “Prior to that, I wasn’t familiar, and certainly not deeply familiar with its role or its work.”

As noted by Judge Seeley, the state left much of the plaintiffs’ evidence uncontested. They also declined to call their start witness on climate science, Judith Curry, who reflects:

MT’s lawyers were totally unprepared for direct and cross examination of climate science witnesses. This was not surprising, since this is a very complex issue that they apparently had not previously encountered. One lawyer who was cross-examining the Plaintiffs’ witnesses kept getting confused by ICP (IPCC) and RPC (RCP). The Plaintiffs were very enthusiastic about keeping witnesses in reserve to rebut my testimony, with several of the Plaintiffs’ witnesses who were leaving on travel presenting pre-buttals to my anticipated testimony during their direct questioning – all of this totally misrepresented what was in my written testimony, and can now be deleted from the court record since I didn’t testify. I can see that all of this would have turned the Hearing into a 3-ring climate circus, and at the end of all that I might not have managed to get my important points across, since I am only allowed to respond to questions.

On Thurs eve, I received a call from the lead Montana lawyer telling me that they were “letting me off the hook.” I was relieved to be able to stay home and recapture those 4 days I had scheduled for travel to and from MT.

The state’s team sounds pretty dysfunctional:

Montana’s approach to the case has evolved since 2020, has evolved rapidly in the last 6 months since a new legal team was brought in, and even evolved rapidly during the course of the trial. The lawyers I spoke to in Sept 2022 were gone by the end of Oct, with an interim team brought in from the private sector, and then a new team that was hired for the Montana’s State Attorney’s Office in Dec.

MT’s original expert witnesses were apparently tossed, and I and several other expert witnesses were brought on board in the 11th hour, around Sept 2022. Note: instructions for preparing our written reports were received from lawyers two generations removed from the actual trial lawyers. As per questioning during my Deposition, I gleaned that the state originally had a collection of witnesses that were pretty subpar (I don’t know who they were). The new set of witnesses was apparently much better.

If the state has such a compelling case, why can’t they get their act together?

In any case, I find one argument in all of this really disturbing. Suppose we accept Curry’s math:

With regards to Montana’s CO2 emissions, based on 2019 estimates Montana produces 0.63% of U.S. emissions and 0.09% of global emissions. For an anticipated warming of 2oC, Montana’s 0.09% of emissions would account for 0.0018oC of warming. There are other ways to frame this calculation (and more recent numbers), but any way you slice it, you can’t come up with a significant amount of global warming that is caused by Montana’s emissions.

Never mind that MT is also only .0135% of global population. If you get granular enough, every region is a tiny fraction of the world in all things. So if we are to imagine that “my contribution is small” equates to “I don’t have to do anything about the problem,” then no one has to do anything about climate, or any other global problem for that matter. There’s no role for leadership, cooperation or enlightened self-interest. This is a circular firing squad for global civilization.

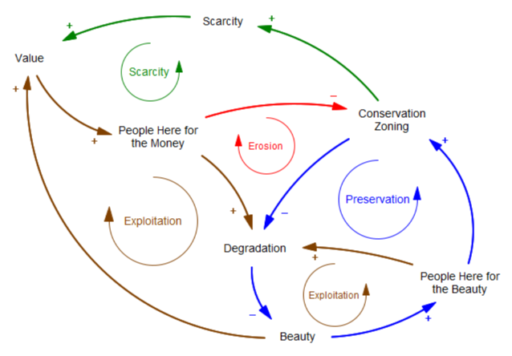

Fifty years ago, some far sighted residents realized that development would eventually ruin the area, so they created conservation zoning designed to limit density and preserve natural resources. For a long time, that structure attracted people who were more interested in beauty than money, and the rules enjoyed strong support.

Fifty years ago, some far sighted residents realized that development would eventually ruin the area, so they created conservation zoning designed to limit density and preserve natural resources. For a long time, that structure attracted people who were more interested in beauty than money, and the rules enjoyed strong support.