Following Bill Harris’ comment on Are causal loop diagrams useful? I went looking for Coyle’s hybrid influence diagrams. I didn’t find them, but instead ran across this interesting conversation in the SDR:

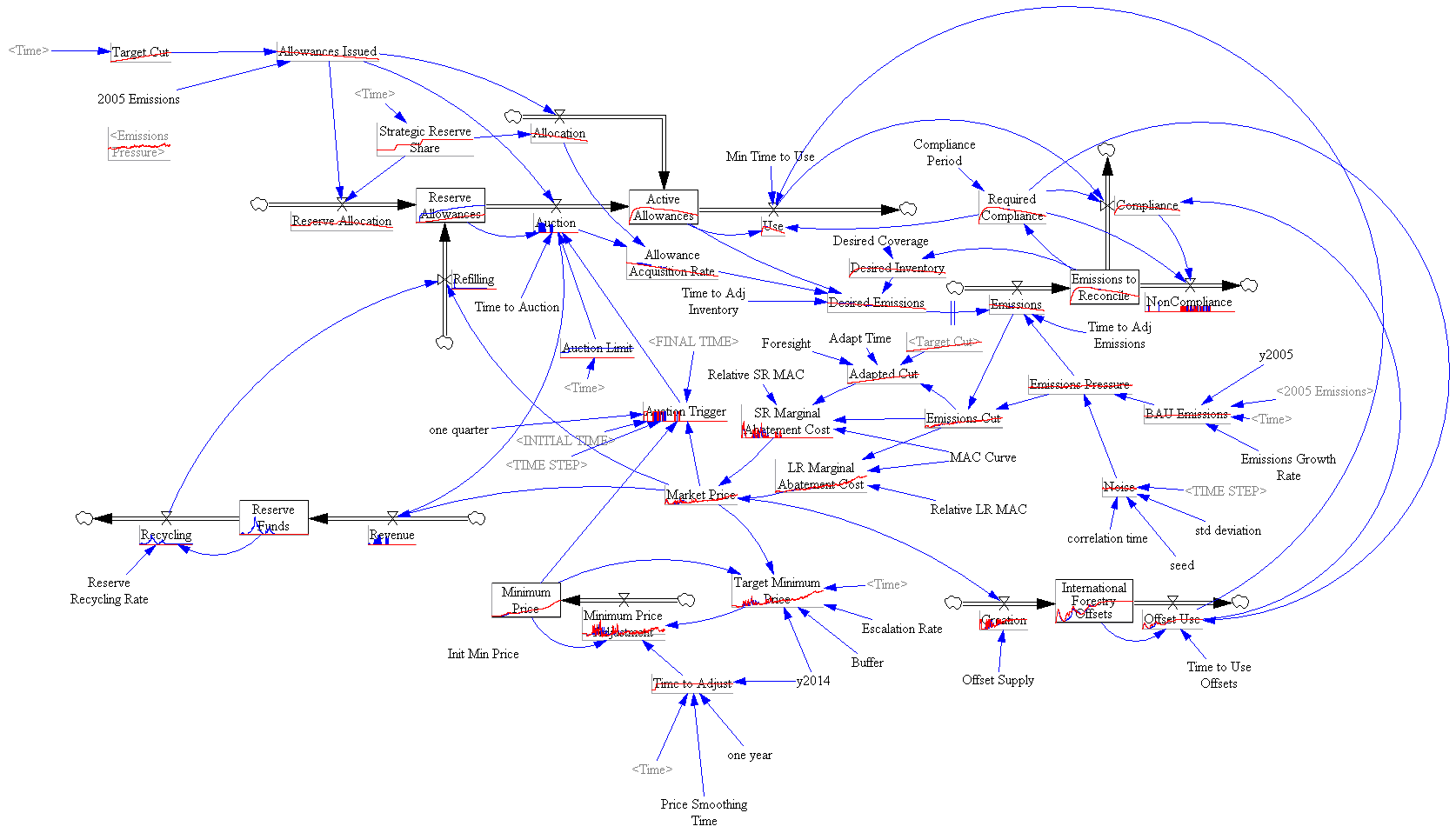

The tradition, one might call it the orthodoxy, in system dynamics is that a problem can only be analysed, and policy guidance given, through the aegis of a fully quantified model. In the last 15 years, however, a number of purely qualitative models have been described, and have been criticised, in the literature. This article briefly reviews that debate and then discusses some of the problems and risks sometimes involved in quantification. Those problems are exemplified by an analysis of a particular model, which turns out to bear little relation to the real problem it purported to analyse. Some qualitative models are then reviewed to show that they can, indeed, lead to policy insights and five roles for qualitative models are identified. Finally, a research agenda is proposed to determine the wise balance between qualitative and quantitative models.

… In none of this work was it stated or implied that dynamic behaviour can reliably be inferred from a complex diagram; it has simply been argued that describing a system is, in itself, a useful thing to do and may lead to better understanding of the problem in question. It has, on the other hand, been implied that, in some cases, quantification might be fraught with so many uncertainties that the model’s outputs could be so misleading that the policy inferences drawn from them might be illusory. The research issue is whether or not there are circumstances in which the uncertainties of simulation may be so large that the results are seriously misleading to the analyst and the client. … This stream of work has attracted some adverse comment. Lane has gone so far as to assert that system dynamics without quantified simulation is an oxymoron and has called it ‘system dynamics lite (sic)’. …

Coyle (2000) Qualitative and quantitative modelling in system dynamics: some research questions

Jack Homer and Rogelio Oliva aren’t buying it:

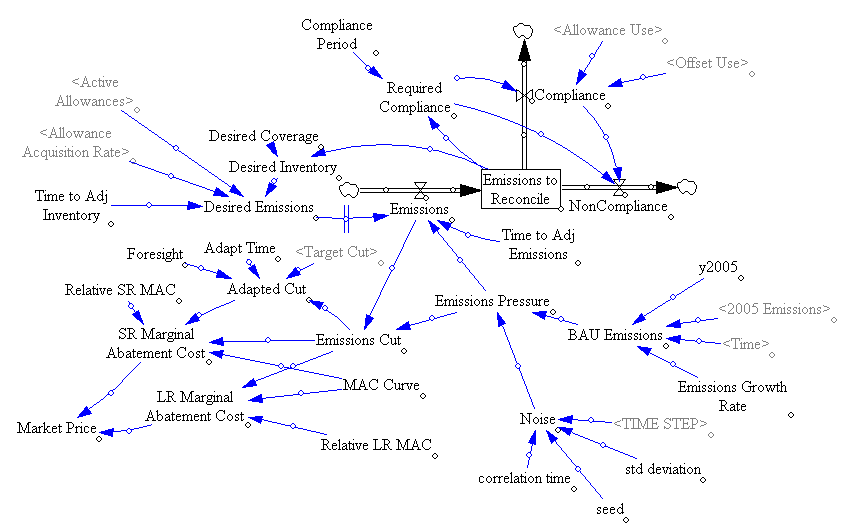

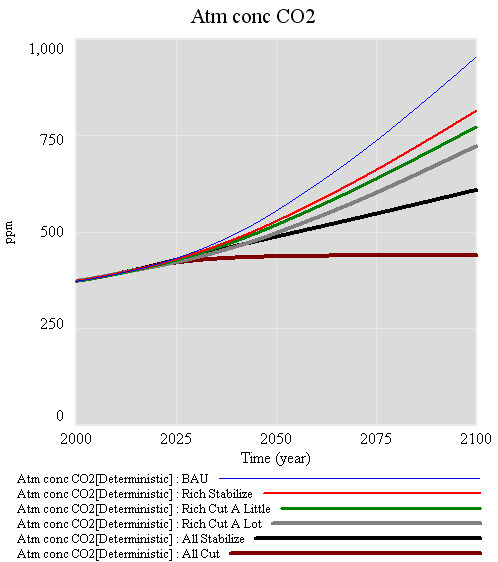

Geoff Coyle has recently posed the question as to whether or not there may be situations in which computer simulation adds no value beyond that gained from qualitative causal-loop mapping. We argue that simulation nearly always adds value, even in the face of significant uncertainties about data and the formulation of soft variables. This value derives from the fact that simulation models are formally testable, making it possible to draw behavioral and policy inferences reliably through simulation in a way that is rarely possible with maps alone. Even in those cases in which the uncertainties are too great to reach firm conclusions from a model, simulation can provide value by indicating which pieces of information would be required in order to make firm conclusions possible. Though qualitative mapping is useful for describing a problem situation and its possible causes and solutions, the added value of simulation modeling suggests that it should be used for dynamic analysis whenever the stakes are significant and time and budget permit.

Homer & Oliva (2001) Maps and models in system dynamics: a response to Coyle

Coyle rejoins:

This rejoinder clarifies that there is significant agreement between my position and that of Homer and Oliva as elaborated in their response. Where we differ is largely to the extent that quantification offers worthwhile benefit over and above analysis from qualitative analysis (diagrams and discourse) alone. Quantification may indeed offer potential value in many cases, though even here it may not actually represent ‘‘value for money’’. However, even more concerning is that in other cases the risks associated with attempting to quantify multiple and poorly understood soft relationships are likely to outweigh whatever potential benefit there might be. To support these propositions I add further citations to published work that recount effective qualitative-only based studies, and I offer a further real-world example where any attempts to quantify ‘‘multiple softness’’ could have lead to confusion rather than enlightenment. My proposition remains that this is an issue that deserves real research to test the positions of Homer and Oliva, myself, and no doubt others, which are at this stage largely based on personal experiences and anecdotal evidence.

My take: I agree with Coyle that qualitative models can often lead to insight. However, I don’t buy the argument that the risks of quantification of poorly understood soft variables exceeds the benefits. First, if the variables in question are really too squishy to get a grip on, that part of the modeling effort will fail. Even so, the modeler will have some other working pieces that are more physical or certain, providing insight into the context in which the soft variables operate. Second, as long as the modeler is doing things right, which means spending ample effort on validation and sensitivity analysis, the danger of dodgy quantification will reveal itself as large uncertainties in behavior subject to the assumptions in question. Third, the mere attempt to quantify the qualitative is likely to yield some insight into the uncertain variables, which exceeds that derived from the purely qualitative approach. In fact, I would argue that the greater danger lies in the qualitative approach, because it is quite likely that plausible-looking constructs on a diagram will go unchallenged, yet harbor deep conceptual problems that would be revealed by modeling.

I see this as a cost-benefit question. With infinite resources, a model always beats a diagram. The trouble is that in many cases time, money and the will of participants are in short supply, or can’t be justified given the small scale of a problem. Often in those cases a qualitative approach is justified, and diagramming or other elicitation of structure is likely to yield a better outcome than pure talk. Also, where resources are limited, an overzealous modeling attempt could lead to narrow focus, overemphasis on easily quantifiable concepts, and implementation failure due to too much model and not enough process. If there’s a risk to modeling, that’s it – but that’s a risk of bad modeling, and there are many of those.