It’s SD conference paper review time again. Last year I took notes while reviewing, in an attempt to capture the attributes of a good paper. A few additional thoughts:

- No model is perfect, but it pays to ask yourself, will your model stand up to critique?

- Model-data comparison is extremely valuable and too seldom done, but trivial tests are not interesting. Fit to data is a weak test of model validity; it’s often necessary, but never sufficient as a measure of quality. I’d much rather see the response of a model to a step input or an extreme conditions test than a model-data comparison. It’s too easy to match the model to the data with exogenous inputs, so unless I see a discussion of a multi-faceted approach to validation, I get suspicious. You might consider how your model meets the following criteria:

- Do decision rules use information actually available to real agents in the system?

- Would real decision makers agree with the decision rules attributed to them?

- Does the model conserve energy, mass, people, money, and other physical quantities?

- What happens to the behavior in extreme conditions?

- Do physical quantities always have nonnegative values?

- Do units balance?

- If you have time series output, show it with graphs – it takes a lot of work to “see” the behavior in tables. On the other hand, tables can be great for other comparisons of outcomes.

- If all of your graphs show constant values, linear increases (ramps), or exponentials, my eyes glaze over, unless you can make a compelling case that your model world is really that simple, or that people fail to appreciate the implications of those behaviors.

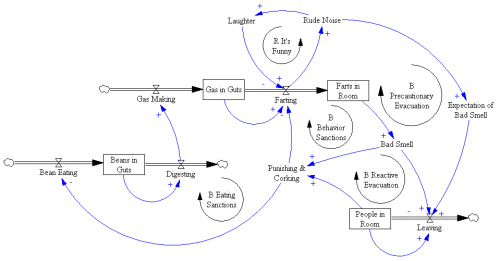

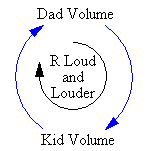

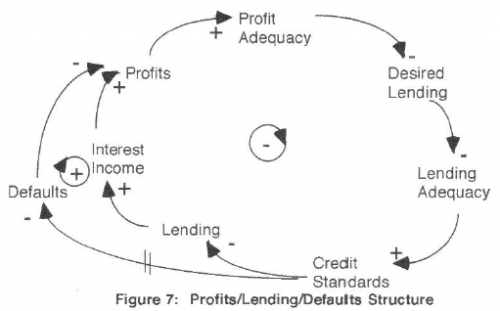

- Relate behavior to structure. I don’t care what happens in scenarios unless I know why it happens. One effective way to do this is to run tests with and without certain feedback loops or sectors of the model active.

- Discuss what lies beyond the boundary of your model. What did you leave out and why? How does this limit the applicability of the results?

- If you explore a variety of scenarios with your model (as you should), introduce the discussion with some motivation, i.e. why are the particular scenarios tested important, realistic, etc.?

- Take some time to clean up your model diagrams. Eliminate arrows that cross unnecessarily. Hide unimportant parameters. Use clear variable names.

- It’s easiest to understand behavior in deterministic experiments, so I like to see those. But the real world is noisy and uncertain, so it’s also nice to see experiments with stochastic variation or Monte Carlo exploration of the parameter space. For example, there are typically many papers on water policy in the ENV thread. Water availability is contingent on precipitation, which is variable on many time scales. A system’s response to variation or extremes of precipitation is at least as important as its mean behavior.

- Modeling aids understanding, which is intrinsically valuable, but usually the real endpoint of a modeling exercise is a decision or policy change. Sometimes, it’s enough to use the model to characterize a problem, after which the solution is obvious. More often, though, the model should be used to develop and test decision rules that solve the problem you set out to conquer. Show me some alternative strategies, discuss their limitations and advantages, and describe how they might be implemented in the real world.

- If you say that an SD model can’t predict or forecast, be very careful. SD practitioners recognized early on that forecasting was often a fool’s errand, and that insight into behavior modes for design of robust policies was a worthier goal. However, SD is generally about building good dynamic models with appropriate representations of behavior and so forth, and good models are a prerequisite to good predictions. An SD model that’s well calibrated can forecast as well as any other method, and will likely perform better out of sample than pure statistical approaches. More importantly, experimentation with the model will reveal the limits of prediction.

- It never hurts to look at your paper the way a reviewer will look at it.