This archive contains the FREE climate-economy model, as documented in my thesis. Continue reading “FREE”

Tag: feedback

A Titanic feedback reversal

Ever get in a hotel shower and turn the faucet the wrong way, getting scalded or frozen as a result? It doesn’t help when the faucet is unmarked or backwards. If a new account is correct, that’s what happened to the Titanic.

(Reuters) – The Titanic hit an iceberg in 1912 because of a basic steering error, and only sank as fast as it did because an official persuaded the captain to continue sailing, an author said in an interview published on Wednesday.

…

“They could easily have avoided the iceberg if it wasn’t for the blunder,” Patten told the Daily Telegraph.

“Instead of steering Titanic safely round to the left of the iceberg, once it had been spotted dead ahead, the steersman, Robert Hitchins, had panicked and turned it the wrong way.”

Patten, who made the revelations to coincide with the publication of her new novel “Good as Gold” into which her account of events are woven, said that the conversion from sail ships to steam meant there were two different steering systems.

Crucially, one system meant turning the wheel one way and the other in completely the opposite direction.

Once the mistake had been made, Patten added, “they only had four minutes to change course and by the time (first officer William) Murdoch spotted Hitchins’ mistake and then tried to rectify it, it was too late.”

It sounds like the steering layout violates most of Norman’s design principles (summarized here):

- Use both knowledge in the world and knowledge in the head.

- Simplify the structure of tasks.

- Make things visible: bridge the Gulfs of Execution and Evaluation.

- Get the mappings right.

- Exploit the power of constraints, both natural and artificial.

- Design for error.

- When all else fails, standardize.

Notice that these are really all about providing appropriate feedback, mental models, and robustness.

(This is a repost from Sep. 22, 2010, for the 100 year anniversary).

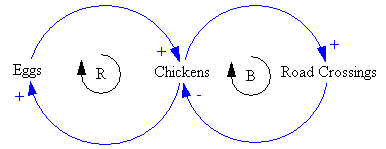

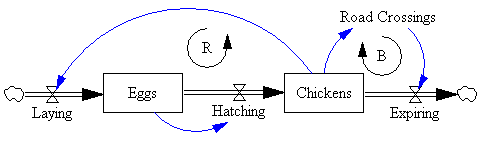

Are causal loop diagrams useful?

Reflecting on the Afghanistan counterinsurgency diagram in the NYTimes, Scott Johnson asked me whether I found causal loop diagrams (CLDs) to be useful. Some system dynamics hardliners don’t like them, and others use them routinely.

Here’s a CLD:

And here’s it’s stock-flow sibling:

My bottom line is:

- CLDs are very useful, if developed and presented with a little care.

- It’s often clearer to use a hybrid diagram that includes stock-flow “main chains”. However, that also involves a higher burden of explanation of the visual language.

- You can get into a lot of trouble if you try to mentally simulate the dynamics of a complex CLD, because they’re so underspecified (but you might be better off than talking, or making lists).

- You’re more likely to know what you’re talking about if you go through the process of building a model.

- A big, messy picture of a whole problem space can be a nice complement to a focused, high quality model.

Here’s why:

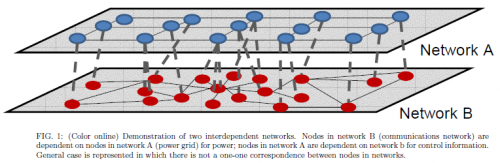

Cascading failures in interconnected networks

Wired covers a new article in Nature, investigating massive failures in linked networks.

The interesting thing is that feedback between the connected networks destabilizes the whole:

“When networks are interdependent, you might think they’re more stable. It might seem like we’re building in redundancy. But it can do the opposite,” said Eugene Stanley, a Boston University physicist and co-author of the study, published April 14 in Nature.

…

The interconnections fueled a cascading effect, with the failures coursing back and forth. A damaged node in the first network would pull down nodes in the second, which crashed nodes in the first, which brought down more in the second, and so on. And when they looked at data from a 2003 Italian power blackout, in which the electrical grid was linked to the computer network that controlled it, the patterns matched their models’ math.

Interestingly, the interconnection alters the relationship between network structure (degree distribution) and robustness:

Surprisingly, a broader degree distribution increases the vulnerability of interdependent networks to random failure, which is opposite to how a single network behaves.

Chalk one up for counter-intuitive behavior of complex systems.

What looks like last year’s version of the paper is on arXiv.

The Seven Deadly Sins of Managing Complex Systems

I was rereading the Fifth Discipline on the way to Boston the other day, and something got me started on this. Wrath, greed, sloth, pride, lust, envy, and gluttony are the downfall of individuals, but what about the downfall of systems? Here’s my list, in no particular order:

- Information pollution. Sometimes known as lying, but also common in milder forms, such as greenwash. Example: twenty years ago, the “recycled” symbol was redefined to mean “recyclable” – a big dilution of meaning.

- Elimination of diversity. Example: overconsolidation of industries (finance, telecom, …). As Jay Forrester reportedly said, “free trade is a mechanism for allowing all regions to reach all limits at once.”

- Changing the top-level rules in pursuit of personal gain. Example: the Starpower game. As long as we pretend to want to maximize welfare in some broad sense, the system rules need to provide an equitable framework, within which individuals can pursue self-interest.

- Certainty. Planning for it leads to fragile strategies. If you can’t imagine a way you could be wrong, you’re probably a fanatic.

- Elimination of slack. Normally this is regarded as a form of optimization, but a system without any slack can’t change (except catastrophically). How are teachers supposed to improve their teaching when every minute is filled with requirements?

- Superstition. Attribution of cause by correlation or coincidence, including misapplied pattern-matching.

- The four horsemen from classic SD work on flawed mental models: linear, static, open-loop, laundry-list thinking.

That’s seven (cheating a little). But I think there are more candidates that don’t quite make the big time:

- Impatience. Don’t just do something, stand there. Sometimes.

- Failure to account for delays.

- Abstention from top-level decision making (essentially not voting).

The very idea of compiling such a list only makes sense if we’re talking about the downfall of human systems, or systems managed for the benefit of “us” in some loose sense, but perhaps anthropocentrism is a sin in itself.

I’m sure others can think of more! I’d be interested to hear about them in comments.

Lindzen & Choi critique

A critique of Lindzen & Choi’s 2009 paper has just been published, debunking the notion of strong negative temperature feedback in the tropics. I had noticed that its statistical method of identifying intervals in a time series was flawed, and that models cited appeared to sometimes lack volcanic forcings, rendering correlations meaningless. I’m happy to see those observations confirmed, and a few other problems raised. (I’m happy that I was right, not that climate sensitivity is higher than Lindzen & Choi suggest, which would be good for the planet.) I haven’t read the details of the critiques, so I can’t say whether this really closes the book on the question, but it at least indicates that the original work was a bit sloppy. Since Lindzen is one of the few contrarians who knows what he’s doing, and it’s useful to have such people around, I wish he would focus less on WSJ editorials and more on scholarship.

Dynamic Drinking

Via ScienceDaily,

A large body of social science research has established that students tend to overestimate the amount of alcohol that their peers consume. This overestimation causes many to have misguided views about whether their own behaviour is normal and may contribute to the 1.8 million alcohol related deaths every year. Social norms interventions that provide feedback about own and peer drinking behaviours may help to address these misconceptions.

Erling Moxnes has looked at this problem from a dynamic perspective, in Moxnes, E. and L. C. Jensen (in press). “Drunker than intended; misperceptions and information treatments.” Drug and Alcohol Dependence. From an earlier Athens SD conference paper,

Overshooting alcohol intoxication, an experimental study of one cause and two cures

Juveniles becoming overly intoxicated by alcohol is a widespread problem with consequences ranging from hangovers to deaths. Information campaigns to reduce this problem have not been very successful. Here we use a laboratory experiment with high school students to test the hypothesis that overshooting intoxication can follow from a misperception of the delay in alcohol absorption caused by the stomach. Using simulators with a short and a long delay, we find that the longer delay causes a severe overshoot in the blood alcohol concentration. Behaviour is well explained by a simple feedback strategy. Verbal information about the delay does not lead to a significant reduction of the overshoot, while a pre test mouse-simulator experience removes the overshoot. The latter policy helps juveniles lessen undesired consequences of drinking while preserving the perceived positive effects. The next step should be an investigation of simulator experience on real drinking behaviour.

Washboard Evolution

Via ScienceDaily,

Just about any road with a loose surface ’” sand or gravel or snow ’” develops ripples that make driving a very shaky experience. A team of physicists from Canada, France and the United Kingdom have recreated this “washboard” phenomenon in the lab with surprising results: ripples appear even when the springy suspension of the car and the rolling shape of the wheel are eliminated. The discovery may smooth the way to designing improved suspension systems that eliminate the bumpy ride.

“The hopping of the wheel over the ripples turns out to be mathematically similar to skipping a stone over water,” says University of Toronto physicist, Stephen Morris, a member of the research team.

“To understand the washboard road effect, we tried to find the simplest instance of it, he explains. We built lab experiments in which we replaced the wheel with a suspension rolling over a road with a simple inclined plow blade, without any spring or suspension, dragging over a bed of dry sand. Ripples appear when the plow moves above a certain threshold speed.”

“We analyzed this threshold speed theoretically and found a connection to the physics of stone skipping. A skipping stone needs to go above a specific speed in order to develop enough force to be thrown off the surface of the water. A washboarding plow is quite similar; the main difference is that the sandy surface “remembers” its shape on later passes of the blade, amplifying the effect.”