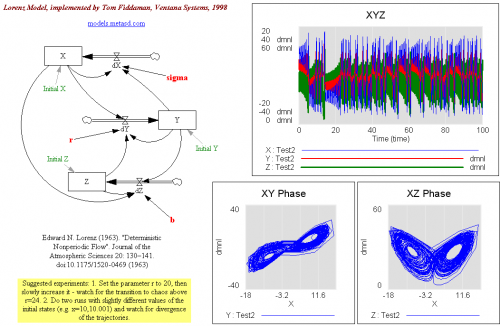

In a continuous time dynamic model, representing noise as a random draw at every time step can be problematic. As the time step is decreased, the high frequency power of the noise spectrum increases accordingly, potentially changing the behavior. In the limit of small time steps, the resulting white noise has infinite power, which is not physically realistic.

The solution is to use pink noise, which is essentially white noise filtered to cut off high frequencies. SD models from the bad old days typically employed a pink noise generating structure that employed uniformly distributed white noise, relying on the central limit theorem to yield a normally distributed output. Ed Anderson improved that structure to incorporate a normally distributed input, which works better, especially if the cutoff frequency is close to the inverse of the time step.

Two versions of the model are attached: one for advanced versions of Vensim, which permit implementation as a :MACRO:, for efficient reuse. The other works with Vensim PLE.

PinkNoise2010.mdl PinkNoise2010.vmf PinkNoise2010.vpm

PinkNoise2010-PLE.vmf PinkNoise2010-PLE.vpm

Contributed by Ed Anderson, updated by Tom Fiddaman

Notes (also in the model files):

Description: The pink noise molecule described generates a simple random series with autocorrelation. This is useful in representing time series, like rainfall from day to day, in which today’s value has some correlation with what happened yesterday. This particular formulation will also have properties such as standard deviation and mean that are insensitive both to the time step and the correlation (smoothing) time. Finally, the output as a whole and the difference in values between any two days is guaranteed to be Gaussian (normal) in distribution.

Behavior: Pink noise series will have both a historical and a random component during each period. The relative “trend-to-noise” ratio is controlled by the length of the correlation time. As the correlation time approaches zero, the pink noise output will become more independent of its historical value and more “noisy.” On the other hand, as the correlation time approaches infinity, the pink noise output will approximate a continuous time random walk or Brownian motion. Displayed above are two time series with correlation times of 1 and 8 months. While both series have approximately the same standard deviation, the 1-month correlation time series is less smooth from period to period than the 8-month series, which is characterized by “sustained” swings in a given direction. Note that this behavior will be independent of the time-step. The “pink” in pink noise refers to the power spectrum of the output. A time series in which each period’s observation is independent of the past is characterized by a flat or “white” power spectrum. Smoothing a time series attenuates the higher or “bluer” frequencies of the power spectrum, leaving the lower or “redder” frequencies relatively stronger in the output.

Caveats: This assumes the use of Euler integration with a time step of no more than 1/4 of the correlation time. Very long correlation times should be avoided also as the multiplication in the scaled white noise will become progressively less accurate.

Technical Notes: This particular form of pink noise is superior to that of Britting presented in Richardson and Pugh (1981) because the Gaussian (Normal) distribution of the output does not depend on the Central Limit Theorem. (Dynamo did not have a Gaussian random number generator and hence R&P had to invoke the CLM to get a normal distribution.) Rather, this molecule’s normal output is a result of the observations being a sum of Gaussian draws. Hence, the series over short intervals should better approximate normality than the macro in R&P.

MEAN: This is the desired mean for the pink noise.

STD DEVIATION: This is the desired standard deviation for the pink noise.

CORRELATION TIME: This is the smooth time for the noise, or for the more technically minded this is the inverse of the filter’s cut-off frequency in radians.