Roger Pielke’s blog has an interesting guest post by Ryan Meyer, reporting on a paper that questions the meaning of claims about the robustness of conclusions from multiple models. From the abstract:

Climate modelers often use agreement among multiple general circulation models (GCMs) as a source of confidence in the accuracy of model projections. However, the significance of model agreement depends on how independent the models are from one another. The climate science literature does not address this. GCMs are independent of, and interdependent on one another, in different ways and degrees. Addressing the issue of model independence is crucial in explaining why agreement between models should boost confidence that their results have basis in reality.

Later in the paper, they outline the philosophy as follows,

In a rough survey of the contents of six leading climate journals since 1990, we found 118 articles in which the authors relied on the concept of agreement between models to inspire confidence in their results. The implied logic seems intuitive: if multiple models agree on a projection, the result is more likely to be correct than if the result comes from only one model, or if many models disagree. … this logic only holds if the models under consideration are independent from one another. … using multiple models to analyze the same system is a ‘‘robustness’’ strategy. Every model has its own assumptions and simplifications that make it literally false in the sense that the modeler knows that his or her mathematics do not describe the world with strict accuracy. When multiple independent models agree, however, their shared conclusion is more likely to be true.

I think they’re barking up the right tree, but one important clarification is in order. We don’t actually care about the independence of models per se. In fact, if we had an ensemble of perfect models, they’d necessarily be identical. What we really want is for the models to be right. To the extent that we can’t be right, we’d at least like to have independent systematic errors, so that (a) there’s some chance that mistakes average out and (b) there’s an opportunity to diagnose the differences.

For example, consider three models of gravity, of the form F=G*m1*m2/r^b. We’d prefer an ensemble of models with b = {1.9,2.0,2.1} to one with b = {1,2,3}, even though some metrics of independence (such as the state space divergence cited in the paper) would indicate that the first ensemble is less independent than the second. This means that there’s a tradeoff: if b is a hidden parameter, it’s harder to discover problems with the narrow ensemble, but harder to get good answers out of the dispersed ensemble, because its members are more wrong.

For climate models, ensembles provide some opportunity to discover systematic errors from numerical schemes, parameterization of poorly-understood sub-grid scale phenomena and program bugs, to the extent that models rely on different codes and approaches. As in my gravity example, differences would be revealed more readily by large perturbations, but I’ve never seen extreme conditions tests on GCMs (although I understand that they at least share a lot with models used to simulate other planets). I’d like to see more of that, plus an inventory of major subsystems of GCMs, and the extent to which they use different codes.

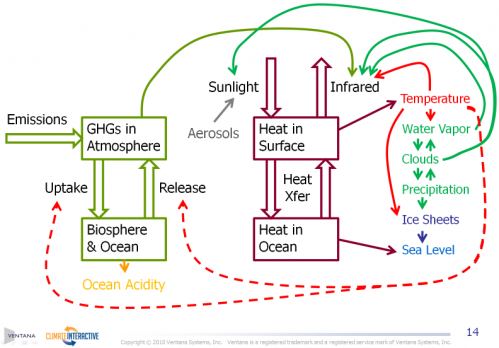

While GCMs are essentially the only source of regional predictions, which are a focus of the paper, it’s important to realize that GCMs are not the only evidence for the notion that climate sensitivity is nontrivial. For that, there are also simple energy balance models and paleoclimate data. That means that there are at least three lines of evidence, much more independent than GCM ensembles, backing up the idea that greenhouse gases matter.

It’s interesting that this critique comes up with reference to GCMs, because it’s actually not GCMs we should worry most about. For climate models, there are vague worries about systematic errors in cloud parameterization and other phenomena, but there’s no strong a priori reason, other than Murphy’s Law, to think that they are a problem. Economic models in the climate policy space, on the other hand, nearly all embody notions of economic equilibrium and foresight which we can be pretty certain are wrong, perhaps spectacularly so. That’s what we should be worrying about.