There’s been a long running debate about nuclear safety, which boils down to, what’s the probability of significant radiation exposure? That in turn has much to do with the probability of core meltdowns and other consequential events that could release radioactive material.

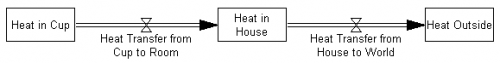

I asked my kids about an analogy to the problem: determining whether a die was fair. They concluded that it ought to be possible to simply roll the die enough times to observe whether the outcome was fair. Then I asked them how that would work for rare events – a thousand-sided die, for example. No one wanted to roll the dice that much, but they quickly hit on the alternative: use a computer. But then, they wondered, how do you know if the computer model is any good?

Those are basically the choices for nuclear safety estimation: observe real plants (slow, expensive), or use models of plants.

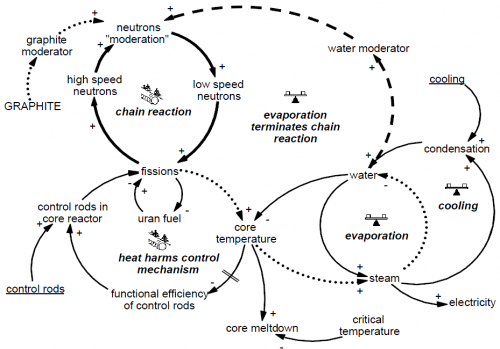

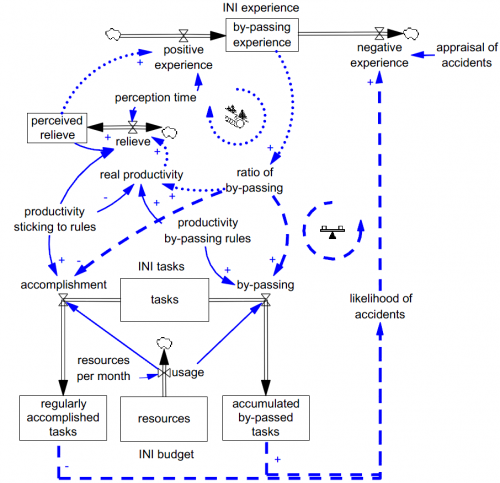

If you go the model route, you introduce an additional layer of uncertainty, because you have to validate the model, which in itself is difficult. It’s easy to misjudge reactor safety by doing five things:

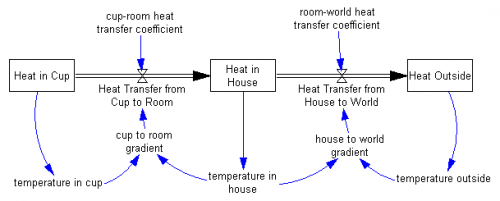

- Ignore the dynamics of the problem. For example, use a statistical model that doesn’t capture feedback. Presumably there have been a number of reinforcing feedbacks operating at the Fukushima site, causing spillovers from one system to another, or one plant to another:

- Collateral damage (catastrophic failure of part A damages part B)

- Contamination (radiation spewed from one reactor makes it unsafe to work on others)

- Exhaustion of common resources (operators, boron)

- Ignore the covariance matrix. This can arise in part from ignoring the dynamics above. But there are other possibilities as well: common design elements, or colocation of reactors, that render failure events non-independent.

- Model an idealized design, not a real plant: ignore components that don’t perform to spec, nonlinearities in responses to extreme conditions, and operator error.

- Draw a narrow boundary around the problem. Over the last week, many commentators have noted that reactor containment structures are very robust, and explicitly designed to prevent a major radiation release from a worst-case core meltdown. However, that ignores spent fuel stored outside of containment, which is apparently a big part of the Fukushima hazard now.

- Ignore the passage of time. This can both help and hurt: newer reactor designs should benefit from learning about problems with older ones; newer designs might introduce new problems; life extension of old reactors introduces its own set of engineering issues (like neutron embrittlement of materials).

- Ignore the unknown unknowns (easy to say, hard to avoid).

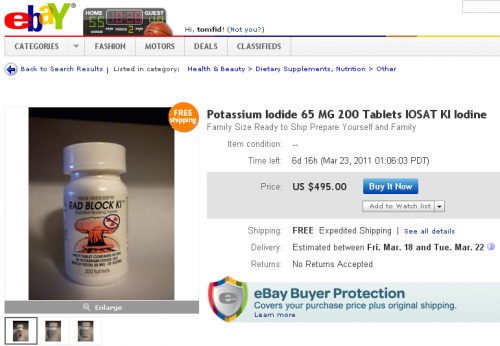

I haven’t read much of the safety literature, so I can’t say to what extent the above issues apply to existing risk analyses based on statistical models or detailed plant simulation codes. However, I do see a bit of a disconnect between actual performance and risk numbers that are often bandied about from such studies: the canonical risk of 1 meltdown per 10,000 reactor years, and other even smaller probabilities on the order of 1 per 100,000 or 1,000,000 reactor years.

I built myself a little model to assess the data, using WNA data to estimate reactor-years of operation and a wiki list of accidents. One could argue at length which accidents should be included. Only light water reactors? Only modern designs? I tend to favor a liberal policy for including accidents. As soon as you start coming up with excuses to exclude things, you’re headed toward an idealized world view, where operators are always faithful, plants are always shiny and new, or at least retired on schedule, etc. Still, I was a bit conservative: I counted 7 partial or total meltdown accidents in commercial or at least quasi-commercial reactors, including Santa Susana, Fermi, TMI, Chernobyl, and Fukushima (I think I missed Chapelcross). Then I looked at maximum likelihood estimates of meltdown frequency over various intervals. Using all the data, assuming Poisson arrivals of meltdowns, you get .6 failures per thousand reactor-years (95% confidence interval .3 to 1). That’s up from .4 [.1,.8] before Fukushima. Even if you exclude the early incidents and Fukushima, you’re looking at .2 [.04,.6] meltdowns per thousand reactor years – twice the 1-per-10,000 target. For the different subsets of the data, the estimates translate to an expected meltdown frequency of about once to thrice per decade, assuming continuing operations of about 450 reactors. That seems pretty bad.

In other words, the actual experience of rolling the dice seems to be yielding a riskier outcome than risk models suggest. One could argue that most of the failing reactors were old, built long ago, or poorly designed. Maybe so, but will we ever have a fleet of young rectors, designed and operated by demigods? That’s not likely, but surely things will get somewhat better with the march of technology. So, the question is, how much better? Areva’s 10x improvement seems inadequate if it’s measured against the performance of existing plants, at least if we plan to grow the plant fleet by much more than a factor of 10 to replace fossil fuels. There are newer designs around, but they depart from the evolutionary path of light water reactors, which means that “past performance is no indication of future returns” applies – will greater passive safety outweigh the effects of jumping to a new, less mature safety learning curve?

It seems to me that we need models of plant safety that square with the actual operational history of plants, to reconcile projected risk with real-world risk experience. If engineers promote analysis that appears unjustifiably optimistic, the public will do what it always does: discount the results of formal models, in favor of mental models that may be informed by superstition and visions of mushroom clouds.