Cap & Trade is suspended in Europe and dead in the US, and the techno delusion may not be far behind. Some strange bedfellows have lined up behind the idea of R&D-driven climate policy. But now it appears that clean energy research is not a bipartisan no-brainer after all. Energy committee member Rand Paul’s bill would not only cut energy R&D funding by eliminating DOE altogether, it would cut our ability to even monitor the global environment by gutting NOAA and NASA. That only leaves one option:

13 In the otherwise dull year 2327, mankind successfully contacts aliens. Well, technically their answering machine, as the aliens themselves have gone to Alpha Centauri for the summer.

14 Desperate for help, humans leave increasingly stalker-y messages, turning off the aliens with how clingy our species is.

15 The aliens finally agree to equip Earth with a set of planet-saving carbon neutralizers, but work drags on as key parts must be ordered from a foreign supplier in the Small Magellanic Cloud.

16 The job comes in $3.7 quadrillion above estimate. Humanity thinks it is being taken advantage of but isn’t sure.

Seriously, where does that leave us? In terms of what we should do, I don’t think much has changed. As I wrote a while back, the climate policy table needs four legs:

- Prices

- Technology (the landscape of possibilities on which we make decisions)

- Institutional rules and procedures

- Preferences, operating within social networks

Preferences and technology are really the fundamentals among the four. Technology represents the set of options available to us for transforming energy and resources into life and play. Preferences guide how we choose among those options. Prices and rules are really just the information signals that allow us to coordinate those decisions.

However, neither preferences nor technology are as fundamental as they look. Models generally take preferences as a given, but in fact they’re endogenous. What we want on a day to day basis is far removed from our most existential needs. Instead, we construct preferences on the basis of technologies we know about, prices, rules, and the preferences and choices of others. That creates norms, fads, marketing, keep-up-with-the Joneses and other positive feedback mechanisms. Similarly, technology is more than discovery of principles and invention of devices. Those innovations don’t do anything until they’re woven into the fabric of society, guided by (you guessed it), prices, institutions, and preferences. That creates more positive feedbacks, like the chicken-egg problems of alternative fuel vehicle deployment.

If we could all get up in the morning and work out in our heads how to make Pareto-efficient decisions, we might not need prices and institutions, but we can’t, so we do. Prices matter because they’re a primary carrier of information through the economy. Not every decision is overtly economic, so we also have institutions, rules and routinized procedures to guide behavior. The key is that these signals should serve our values (the deeply held ones we’d articulate upon reflection, which might differ from the preferences revealed by transactions), not the other way around.

Preferences clearly can have a lot of direct leverage on behavior – if we all equated driving a big gas guzzler with breaking wind in a crowded elevator, we’d probably see different cars on the lot. However, most decisions are not so transparent. It’s already hard to choose “paper or plastic?” How about “desktop or server?” When you add multiple layers of supply chain and varied national origins to the picture, it becomes very hard to create a green information system paralleling the price system. It’s probably even harder to get individuals and firms to conform to such a system, when there are overwhelming evolutionary rewards to defection. Borrowing from Giraudoux, the secret to success is sustainability; once you can fake that you’ve got it made.

Similarly, the sheer complexity of society makes it hard to predict which technologies constitute a winning combination for creating low-carbon happiness. A technology-led strategy runs the risk of failing in the attempt to recreate a high-carbon lifestyle with low-carbon inputs. I don’t think anyone has the foresight to select that portfolio. Even if we could do it, there’s no guarantee that, absent other signals, new technologies will be put to their intended uses, or that they will survive the “valley of death” between R&D and commercialization. It’s like airdropping a tyrannosaurus into an arctic ecosystem – sure, he’s got big teeth, but will he survive?

Complexity also militates against a rules-led approach. It’s simply too cumbersome to codify a rich set of tradeoffs in command-and-control regulations, which can become an impediment to innovation and are subject to regulatory capture. Also, systems like the CAFE standard create shadow prices of compliance, rather than explicit prices. This makes it hard to diagnose the effects of constraints and to coordinate them with other policies. There’s a niche for rules, but they shouldn’t be the big stick (on the other hand, eliminating the legacy of some past measures could be a win-win).

That’s why emissions pricing is really a keystone policy. Once you have prices aligned with the long term value of stable climate (and other resources), it’s easier to align the other legs of the table. Emissions prices create huge incentives for private R&D, leaving a smaller gap for government to fill – just the market failures in appropriation of benefits of technology. The points of pain where institutions are inadequate, or stand in the way of progress, will be more evident and easier to correct, and there will be less burden on policy making institutions, because they won’t have to coordinate many small programs to do the job of one big signal. Preferences will start evolving in a low-carbon direction, with rewards to those who (through luck or altruism) have already done so. Most importantly, emissions pricing gets some changes moving now, not after a decade or two of delay.

Concretely, I still think an upstream, revenue-neutral carbon tax is a practical implementation route. If there’s critical mass among trade partners, it could even evolve into a harmonized global system through the pressure of border carbon adjustments. The question is, how to get started?

The

The

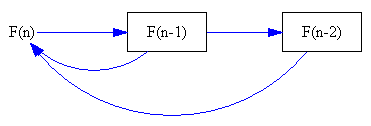

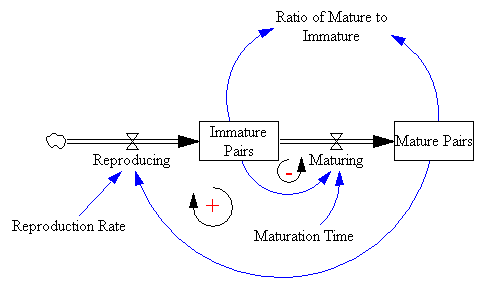

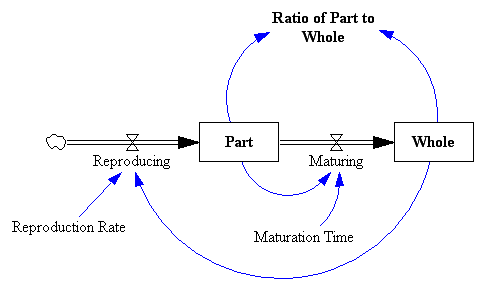

However, that representation is a little too abstract to immediately reveal the connection to rabbits. Instead, I prefer to revert to Fibonacci’s problem description to construct an operational representation:

However, that representation is a little too abstract to immediately reveal the connection to rabbits. Instead, I prefer to revert to Fibonacci’s problem description to construct an operational representation:

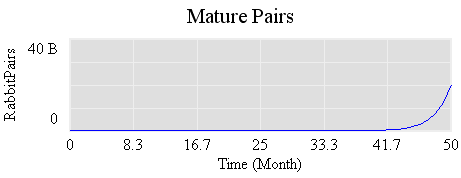

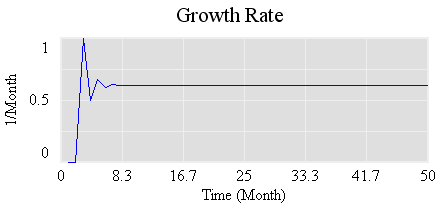

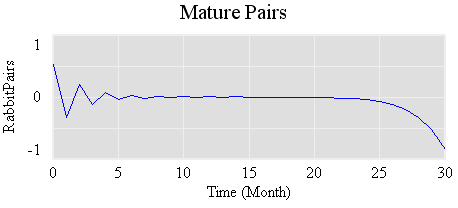

Its steady-state value is .61803… (61.8%/month), which is the

Its steady-state value is .61803… (61.8%/month), which is the

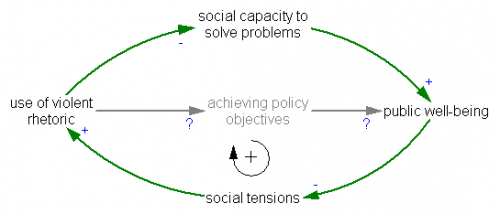

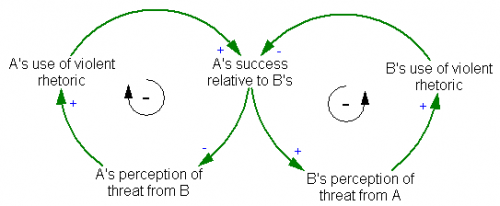

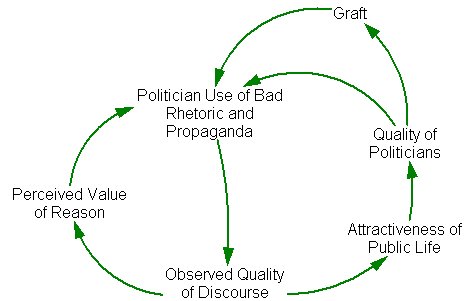

In the escalation archetype, two sides struggle to maintain an advantage over each other. This creates two inner negative feedback loops, which together create a positive feedback loop (a figure-8 around the two negative loops). It’s interesting to note that, so far, the use of violent rhetoric is fairly one-sided – the escalation is happening within the political right (candidates vying for attention?) more than between left and right.

In the escalation archetype, two sides struggle to maintain an advantage over each other. This creates two inner negative feedback loops, which together create a positive feedback loop (a figure-8 around the two negative loops). It’s interesting to note that, so far, the use of violent rhetoric is fairly one-sided – the escalation is happening within the political right (candidates vying for attention?) more than between left and right. The positive feedbacks around violent rhetoric create a societal trap, from which it may be difficult to extricate ourselves. If there’s a general systems insight about vicious cycles, it’s that the best policy is prevention – just don’t start down that road (if you doubt this, play the

The positive feedbacks around violent rhetoric create a societal trap, from which it may be difficult to extricate ourselves. If there’s a general systems insight about vicious cycles, it’s that the best policy is prevention – just don’t start down that road (if you doubt this, play the