Mengers & Sirelli call for systems thinking in the nuclear industry in IEEE Xplore:

Need for Change Towards Systems Thinking in the U.S. Nuclear Industry

Until recently, nuclear has been largely considered as an established power source with no need for new developments in its generation and the management of its power plants. However, this idea is rapidly changing due to reasons discussed in this study. Many U.S. nuclear power plants are receiving life extensions decades beyond their originally planned lives, which requires the consideration of new risks and uncertainties. This research first investigates those potential risks and sheds light on how nuclear utilities perceive and plan for these risks. After that, it examines the need for systems thinking for extended operation of nuclear reactors in the U.S. Finally, it concludes that U.S. nuclear power plants are good examples of systems in need of change from a traditional managerial view to a systems approach.

In this talk from the MIT SDM conference, NRC commissioner George Apostolakis is already there:

Systems Issues in Nuclear Reactor Safety

This presentation will address the important role system modeling has played in meeting the Nuclear Regulatory Commission’s expectation that the risks from nuclear power plants should not be a significant addition to other societal risks. Nuclear power plants are designed to be fundamentally safe due to diverse and redundant barriers to prevent radiation exposure to the public and the environment. A summary of the evolution of probabilistic risk assessment of commercial nuclear power systems will be presented. The summary will begin with the landmark Reactor Safety Study performed in 1975 and continue up to the risk-informed Reactor Oversight Process. Topics will include risk-informed decision making, risk assessment limitations, the philosophy of defense-in-depth, importance measures, regulatory approaches to handling procedural and human errors, and the influence of safety culture as the next level of nuclear power safety performance improvement.

The presentation is interesting, in that it’s about 20% engineering and 80% human factors. Figuring out how people interact with a really complicated control system is a big challenge.

This thesis looks like an example of what Apostolakis is talking about:

Perfect plant operation with high safety and economic performance is based on both good physical design and successful organization. However, in comparison with the affection that has been paid to technology research, the effort that has been exerted to enhance NPP management and organization, namely human performance, seems pale and insufficient. There is a need to identify and assess aspects of human performance that are predictive of plant safety and performance and to develop models and measures of these performance aspects that can be used for operation policy evaluation, problem diagnosis, and risk-informed regulation. The challenge of this research is that: an NPP is a system that is comprised of human and physics subsystems. Every human department includes different functional workers, supervisors, and managers; while every physical component can be in normal status, failure status, or a being-repaired status. Thus, an NPP’s situation can be expressed as a time-dependent function of the interactions among a large number of system elements. The interactions between these components are often non-linear and coupled, sometime there are direct or indirect, negative or positive feedbacks, and hence a small interference input either can be suppressed or can be amplified and may result in a severe accident finally. This research expanded ORSIM (Nuclear Power Plant Operations and Risk Simulator) model, which is a quantitative computer model built by system dynamics methodology, on human reliability aspect and used it to predict the dynamic behavior of NPP human performance, analyze the contribution of a single operation activity to the plant performance under different circumstances, diagnose and prevent fault triggers from the operational point of view, and identify good experience and policies in the operation of NPPs.

The cool thing about this, from my perspective, is that it’s a blend of plant control with classic SD maintenance project management. It looks at the plant as a bunch of backlogs to be managed, and defines instability as a circumstance in which the rate of creation of new work exceeds the capacity to perform tasks. This is made operational through explicit work and personnel stocks, right down to the matter of who’s in charge of the control room. Advisor Michael Golay has written previously about SD in the nuclear industry.

Others in the SD community have looked at some of the “outer loops” operating around the plant, using group model building. Not surprisingly, this yields multiple perspectives and some counterintuitive insights – for example:

Regulatory oversight was initially and logically believed by the group to be independent of the organization and its activities. It was therefore identified as a policy variable.

However in constructing the very first model at the workshop it became apparent that for the event and system under investigation the degree of oversight was influenced by the number of event reports (notifications to the regulator of abnormal occurrences or substandard conditions) the organization was producing. …

The top loop demonstrates the reinforcing effect of a good safety culture, as it encourages compliance, decreases the normalisation of unauthorised changes, therefore increasing vigilance for any outlining unauthorised deviations from approved actions and behaviours, strengthening the safety culture. Or if the opposite is the case an erosion of the safety culture results in unauthorised changes becoming accepted as the norm, this normalisation disguises the inherent danger in deviating from the approved process. Vigilance to these unauthorised deviations and the associated potential risks decreases, reinforcing the decline of the safety culture by reducing the means by which it is thought to increase. This is however balanced by the paradoxical notion set up by the feedback loop involving oversight. As safety improves, the number of reportable events, and therefore reported events can decrease. The paradoxical behaviour is induced if the regulator perceives this lack of event reports as an indication that the system is safe, and reduces the degree of oversight it provides.

Tsuchiya et al. reinforce the idea that change management can be part of the problem as well as part of the solution,

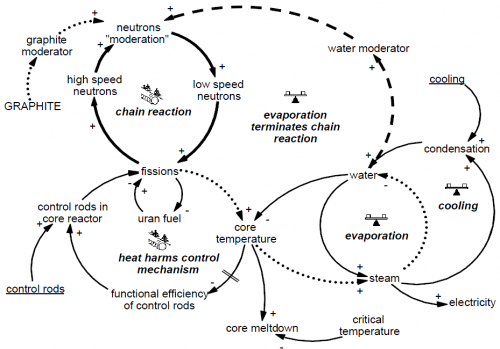

Markus Salge provides a nice retrospective on the Chernobyl accident, best summarized in pictures:

Key feedback structure of a graphite-moderated reactor like Chernobyl

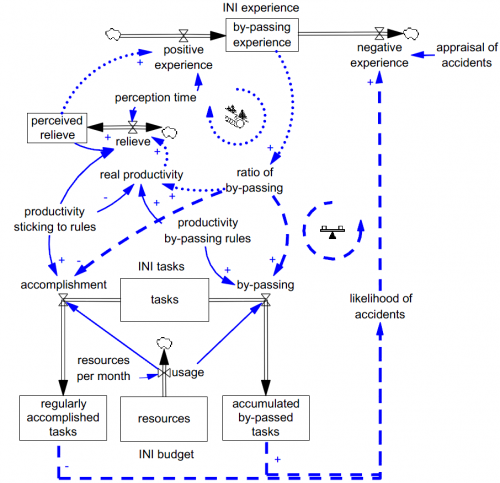

“Flirting with Disaster” dynamics

Others are looking at the nuclear fuel cycle and the role of nuclear power in energy systems.