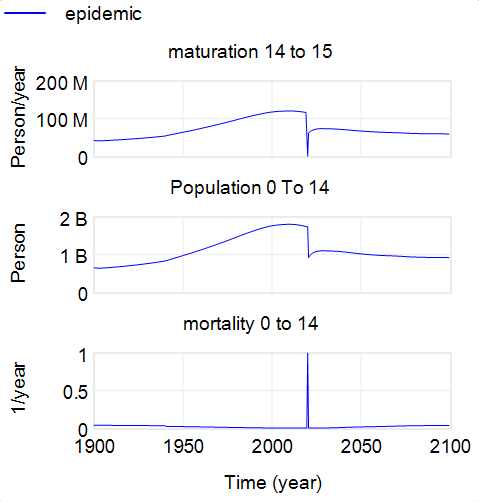

There’s a recent talk by Stefan Rahmstorf that gives a good overview of the tipping point in the AMOC, which has huge implications.

I thought it would be neat to add the Stommel box model to my library, because it’s a nice low-order example of a tipping point. I turned to a recent update of the model by Wei & Zhang in GRL.

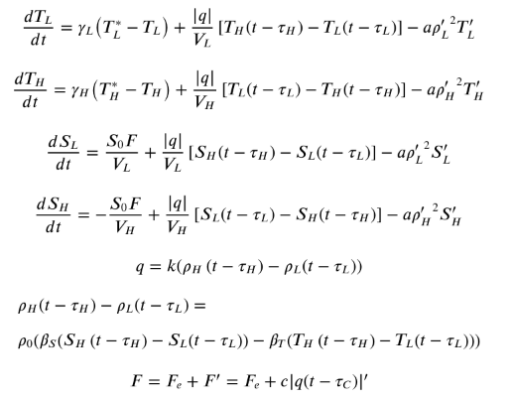

It’s an interesting paper, but it turns out that documentation falls short of the standards we like to see in SD, making it a pain to replicate. The good part is that the equations are provided:

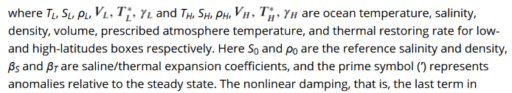

The bad news is that the explanation of these terms is brief to the point of absurdity:

The bad news is that the explanation of these terms is brief to the point of absurdity:

This paragraph requires you to maintain a mental stack of no less than 12 items if you want to be able to match the symbols to their explanations. You also have to read carefully if you want to know that ‘ means “anomaly” rather than “derivative”.

This paragraph requires you to maintain a mental stack of no less than 12 items if you want to be able to match the symbols to their explanations. You also have to read carefully if you want to know that ‘ means “anomaly” rather than “derivative”.

The supplemental material does at least include a table of parameters – but it’s incomplete. To find the delay taus, for example, you have to consult the text and figure captions, because they vary. Initial conditions are also not conveniently specified.

I like the terse mathematical description of a system because you can readily take in the entirety of a state variable or even the whole system at a glance. But it’s not enough to check the “we have Greek letters” box. You also need to check the “serious person could reproduce these results in a reasonable amount of time” box.

Code would be a nice complement to the equations, though that comes with it’s own problems: tower-of-Babel language choices and extraneous cruft in the code. In this case, I’d be happy with just a more complete high-level description – at least:

- A complete table of parameters and units, with values used in various experiments.

- Inclusion of initial conditions for each state variable.

- Separation of terms in the RhoH-RhoL equation.

A lot of these issues are things you wouldn’t even know are there until you attempt replication. Unfortunately, that is something reviewers seldom do. But electrons are cheap, so there’s really no reason not to do a more comprehensive documentation job.