I’d like to revisit Jay Forrester’s Next 50 Years article, with particular attention to a couple things I think about every day: forecasting and prediction. I previously tackled Forrester’s view on data.

Along with unwise simplification, we also see system dynamics being drawn into attempting what the client wants even when that is unwise or impossible. Of particular note are two kinds of effort—using system dynamics for forecasting, and placing emphasis on a model’s ability to exactly fit historical data.

With regard to forecasting specific future conditions, we face the same barrier that has long plagued econometrics.

Aside from what Forrester is about to discuss, I think there’s also a key difference, as of the time this was written. Econometric models typically employed lots of data and fast computation, but suffered from severe restrictions on functional form (linearity or log-linearity, Normality of distributions, etc.). SD models had essentially unrestricted functional form, particularly with respect to integration and arbitrary nonlinearity, but suffered from insufficient computational power to do everything we would have liked. To some extent, the fields are converging due to loosening of these constraints, in part because the computer under my desk today is now bigger than the fastest supercomputer in the world when I finished my dissertation years ago.

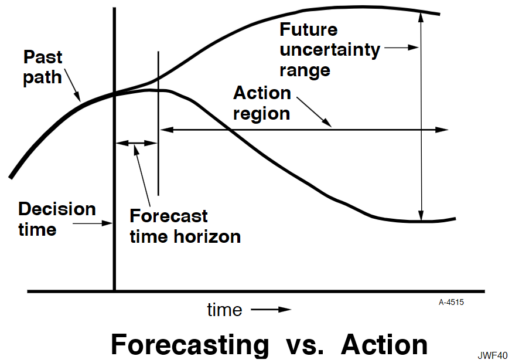

Econometrics has seldom done better in forecasting than would be achieved by naïve extrapolation of past trends. The reasons for that failure also afflict system dynamics. The reasons why forecasting future conditions fail are fundamental in the nature of systems. The following diagram may be somewhat exaggerated, but illustrates my point.

A system variable has a past path leading up to the current decision time. In the short term, the system has continuity and momentum that will keep it from deviating far from an extrapolation of the past. However, random events will cause an expanding future uncertainty range. An effective forecast for conditions at a future time can be made only as far as the forecast time horizon, during which past continuity still prevails. Beyond that horizon, uncertainty is increasingly dominant. However, the forecast is of little value in that short forecast time horizon because a responding decision will be defeated by the very continuity that made the forecast possible. The resulting decision will have its effect only out in the action region when it has had time to pressure the system away from its past trajectory. In other words, one can forecast future conditions in the region where action is not effective, and one can have influence in the region where forecasting is not reliable. You will recall a more complete discussion of this in Appendix K of Industrial Dynamics.

I think Forrester is basically right. However, I think there’s a key qualification. Some things – particularly physical systems – can be forecast quite well, not just because momentum permits extrapolation, but because there is a good understanding of the system. There’s a continuum of forecast skill, between “all models are wrong” and “some models are useful,” and you need to know where you are on that.

Fortunately, your model can tell you about the prospects for forecasting. You can characterize the uncertainty in the model parameters and environmental drivers, generate a distribution of outcomes, and use that to understand where forecasts will begin to fall apart. This is extremely valuable knowledge, and it may be key for implementation. Stakeholders want to know what your intervention is going to do to the system, and if you can’t tell them – with confidence bounds of some sort – they may have no reason to believe your subsequent attributions of success or failure.

In the hallways of SD, I sometimes hear people misconstrue Forrester, to say that “SD doesn’t predict.” This is balderdash. SD is all about prediction. We may not make point predictions of the future state of a system, but we absolutely make predictions about the effects of a policy change, contingent on uncertainties about parameters, structure and external disturbances. If we didn’t do that, what would be the point of the exercise? That’s precisely what JWF is getting at here:

The emphasis on forecasting future events diverts attention from the kind of forecast that system dynamics can reliably make, that is, the forecasting of the kind of continuing effect that an enduring policy change might cause in the behavior of the system. We should not be advising people on the decision they should now make, but rather on how to change policies that will guide future decisions. A properly designed system dynamics model is effective in forecasting how different decision-making policies lead to different kinds of system behavior.

Thanks, Tom. Nice reminder of these questions, and answers. I refer to that graphic showing the area of future system uncertainty as “Forrester’s Dilemma.” So, in reality, it is not an area of unknown outcomes, but one of many possible patterns, each with some level of probability.