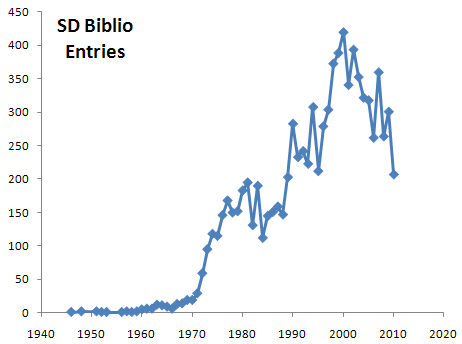

Here’s a time series of the number of entries in the system dynamics bibliography:

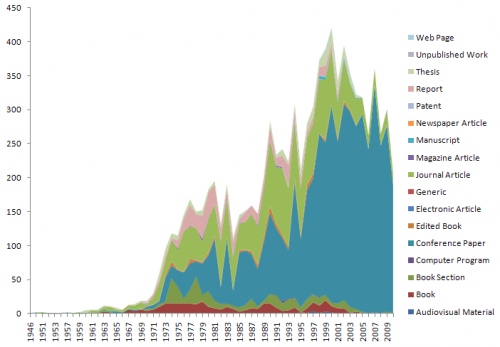

The peak was in 2000 with 420 entries. If you break out the types, it looks like the conference has saturated at about 250-300 papers, while journal, report and book publications have fallen off.

I suspect that some of the decline is explained by a long reporting lag, and some is “defection” of SD work into journals that aren’t captured in the bibliography (probably a good thing). It would be interesting to see a corrected series, to see what it says about the health of the field. The ideal way to do the correction would be to build a simple dynamic model of actual and measured publication rates, estimating the parameters from data (student project, anyone?).

I suspect that some of the decline is explained by a long reporting lag, and some is “defection” of SD work into journals that aren’t captured in the bibliography (probably a good thing). It would be interesting to see a corrected series, to see what it says about the health of the field. The ideal way to do the correction would be to build a simple dynamic model of actual and measured publication rates, estimating the parameters from data (student project, anyone?).

Tom,

Does this really say anything meaningful about the health of the field? To me it says something about the SD Biblio Entries though what are the meaningful implications beyond that which can actually be substantiated?

be well,

Gene

I think it says we need to update the SD bibliography.

I tend to think it’s mostly a long reporting lag and increasing spillover into other publications that aren’t monitored. The conference is basically saturated with papers, as is the journal, so for those streams to increase, we’d have to somehow take a leap to create another (doubling throughput).

Maybe the question is, how do we keep up on good work – maybe encourage authors to self-report what they’re up to (and make it really easy to do so)?

Tom – Thanks for the charts. Makes quite clear that there is a gap between the academic research (and the papers) and its inclusion in daily work. To my knowledge, and I am active in this for now five years (since my first contact with SD back in 2006) there is no broadly used and seen SD application.

Recently we had again rather high flooding of the Elbe that flows through Dresden and the question in the paper today arises why the predictions had been so bad.

Days before the flooding I asked on Facebook, whether there would be any publicly available “Bathtub”-like simulations for such events. The answer was, “We hope the experts have!”

This makes the relevance of the field today quite clear (other than in the early 70s when SD was also to be found in schools, universities, and elsewhere.

Isn’t it time to get into broader acceptance now in these fast changing times? Jay’s vision from 2007 in Boston is still holding, and to be put into reality!

Cheers, Ralf

Interesting example of the Elbe flood … It seems like there must be big, detailed river basin models that could have been used. I wonder what went wrong? Perhaps the models are too cumbersome to use in real time, or the institutional arrangements isolate the modelers from the decision makers? Or, perhaps the combination of limited measurements and model uncertainty actually makes point prediction impossible? Either way, it seems like some simple metamodel simulation tools would be helpful for giving decision makers insights about how the system works, and how they ought to behave (even if they can’t predict specific flooding instances).