Indirect Emissions from Biofuels: How Important?

Jerry M. Melillo,1,* John M. Reilly,2 David W. Kicklighter,1 Angelo C. Gurgel,2,3 Timothy W. Cronin,1,2 Sergey Paltsev,2 Benjamin S. Felzer,1,4 Xiaodong Wang,2,5 Andrei P. Sokolov,2 C. Adam Schlosser2

A global biofuels program will lead to intense pressures on land supply and can increase greenhouse gas emissions from land-use changes. Using linked economic and terrestrial biogeochemistry models, we examined direct and indirect effects of possible land-use changes from an expanded global cellulosic bioenergy program on greenhouse gas emissions over the 21st century. Our model predicts that indirect land use will be responsible for substantially more carbon loss (up to twice as much) than direct land use; however, because of predicted increases in fertilizer use, nitrous oxide emissions will be more important than carbon losses themselves in terms of warming potential. A global greenhouse gas emissions policy that protects forests and encourages best practices for nitrogen fertilizer use can dramatically reduce emissions associated with biofuels production.

1 The Ecosystems Center, Marine Biological Laboratory (MBL), 7 MBL Street, Woods Hole, MA 02543, USA.

2 Joint Program on the Science and Policy of Global Change, Massachusetts Institute of Technology (MIT), 77 Massachusetts Avenue, MIT E19-411, Cambridge, MA 02139-4307, USA.

3 Department of Economics, University of São Paulo, Ribeirão Preto 4EES, Brazil.

4 Department of Earth and Environmental Sciences, Lehigh University, 31 Williams Drive, Bethlehem, PA 18015, USA.

5 School of Public Administration, Zhejiang University, Hangzhou 310000, Zhejiang Province, People’s Republic of China (PRC).

* To whom correspondence should be addressed. E-mail: jmelillo@mbl.edu

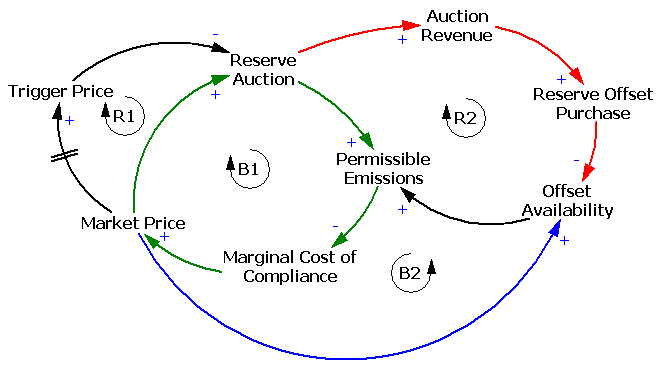

Expanded use of bioenergy causes land-use changes and increases in terrestrial carbon emissions (1, 2). The recognition of this has led to efforts to determine the credit toward meeting low carbon fuel standards (LCFS) for different forms of bioenergy with an accounting of direct land-use emissions as well as emissions from land use indirectly related to bioenergy production (3, 4). Indirect emissions occur when biofuels production on agricultural land displaces agricultural production and causes additional land-use change that leads to an increase in net greenhouse gas (GHG) emissions (2, 4). The control of GHGs through a cap-and-trade or tax policy, if extended to include emissions (or credits for uptake) from land-use change combined with monitoring of carbon stored in vegetation and soils and enforcement of such policies, would eliminate the need for such life-cycle accounting (5, 6). There are a variety of concerns (5) about the practicality of including land-use change emissions in a system designed to reduce emissions from fossil fuels, and that may explain why there are no concrete proposals in major countries to do so. In this situation, fossil energy control programs (LCFS or carbon taxes) must determine how to treat the direct and indirect GHG emissions associated with the carbon intensity of biofuels.

The methods to estimate indirect emissions remain controversial. Quantitative analyses to date have ignored these emissions (1), considered those associated with crop displacement from a limited area (2), confounded these emissions with direct or general land-use emissions (6–8), or developed estimates in a static framework of today’s economy (3). Missing in these analyses is how to address the full dynamic accounting of biofuel carbon intensity (CI), which is defined for energy as the GHG emissions per megajoule of energy produced (9), that is, the simultaneous consideration of the potential of net carbon uptake through enhanced management of poor or degraded lands, nitrous oxide (N2O) emissions that would accompany increased use of fertilizer, environmental effects on terrestrial carbon storage [such as climate change, enhanced carbon dioxide (CO2) concentrations, and ozone pollution], and consideration of the economics of land conversion. The estimation of emissions related to global land-use change, both those on land devoted to biofuel crops (direct emissions) and those indirect changes driven by increased demand for land for biofuel crops (indirect emissions), requires an approach to attribute effects to separate land uses.

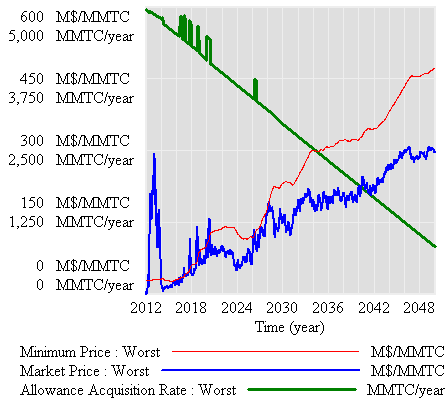

We applied an existing global modeling system that integrates land-use change as driven by multiple demands for land and that includes dynamic greenhouse gas accounting (10, 11). Our modeling system, which consists of a computable general equilibrium (CGE) model of the world economy (10, 12) combined with a process-based terrestrial biogeochemistry model (13, 14), was used to generate global land-use scenarios and explore some of the environmental consequences of an expanded global cellulosic biofuels program over the 21st century. The biofuels scenarios we focus on are linked to a global climate policy to control GHG emissions from industrial and fossil fuel sources that would, absent feedbacks from land-use change, stabilize the atmosphere’s CO2 concentration at 550 parts per million by volume (ppmv) (15). The climate policy makes the use of fossil fuels more expensive, speeds up the introduction of biofuels, and ultimately increases the size of the biofuel industry, with additional effects on land use, land prices, and food and forestry production and prices (16).

We considered two cases in order to explore future land-use scenarios: Case 1 allows the conversion of natural areas to meet increased demand for land, as long as the conversion is profitable; case 2 is driven by more intense use of existing managed land. To identify the total effects of biofuels, each of the above cases is compared with a scenario in which expanded biofuel use does not occur (16). In the scenarios with increased biofuels production, the direct effects (such as changes in carbon storage and N2O emissions) are estimated only in areas devoted to biofuels. Indirect effects are defined as the differences between the total effects and the direct effects.

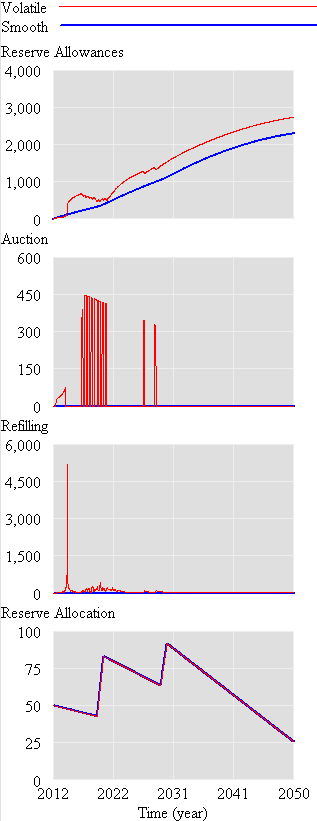

At the beginning of the 21st century, ~31.5% of the total land area (133 million km2) was in agriculture: 12.1% (16.1 million km2) in crops and 19.4% (25.8 million km2) in pasture (17). In both cases of increased biofuels use, land devoted to biofuels becomes greater than all area currently devoted to crops by the end of the 21st century, but in case 2 less forest land is converted (Fig. 1). Changes in net land fluxes are also associated with how land is allocated for biofuels production (Fig. 2). In case 1, there is a larger loss of carbon than in case 2, especially at mid-century. Indirect land use is responsible for substantially greater carbon losses than direct land use in both cases during the first half of the century. In both cases, there is carbon accumulation in the latter part of the century. The estimates include CO2 from burning and decay of vegetation and slower release of carbon as CO2 from disturbed soils. The estimates also take into account reduced carbon sequestration capacity of the cleared areas, including that which would have been stimulated by increased ambient CO2 levels. Smaller losses in the early years in case 2 are due to less deforestation and more use of pasture, shrubland, and savanna, which have lower carbon stocks than forests and, once under more intensive management, accumulate soil carbon. Much of the soil carbon accumulation is projected to occur in sub-Saharan Africa, an attractive area for growing biofuels in our economic analyses because the land is relatively inexpensive (10) and simple management interventions such as fertilizer additions can dramatically increase crop productivity (18).

View larger version (19K):

[in this window]

[in a new window] |

Fig. 1. Projected changes in global land cover for land-use case 1 (A) and case 2 (B). In either case, biofuels supply most of the world’s liquid fuel needs by 2100. In case 1, 365 EJ of biofuel is produced in 2100, using 16.2% (21.6 million km2) of the total land area; natural forest area declines from 34.4 to 15.1 million km2 (56%), and pasture area declines from 25.8 to 22.1 million km2 (14%). In case 2, 323 EJ of biofuels are produced in 2100, using 20.6 million km2 of land; pasture areas decrease by 10.3 million km2 (40%), and forest area declines by 8.4 million km2 (24% of forest area). Simulations show that these major land-use changes will take place in the tropics and subtropics, especially in Africa and the Americas (fig. S2). |

|

View larger version (14K):

[in this window]

[in a new window] |

Fig. 2. Partitioning of direct (dark gray) and indirect effects (light gray) on projected cumulative land carbon flux since the year 2000 (black line) from cellulosic biofuel production for land-use case 1 (A) and case 2 (B). Positive values represent carbon sequestration, whereas negative values represent carbon emissions by land ecosystems. In case 1, the cumulative loss is 92 Pg CO2eq by 2100, with the maximum loss (164 Pg CO2eq) occurring in the 2050 to 2055 time frame, indirect losses of 110 Pg CO2eq, and direct losses of 54 Pg CO2eq. In the second half of the century, there is net accumulation of 72 Pg CO2eq mostly in the soil in response to the use of nitrogen fertilizers. In case 2, land areas are projected to have a net accumulation of 75 Pg CO2eq as a result of biofuel production, with maximum loss of 26 Pg CO2eq in the 2035 to 2040 time frame, followed by substantial accumulation. |

|

Estimates of land devoted to biofuels in our two scenarios (15 to 16%) are well below the estimate of  50% in a recent analysis (6) that does not control land-use emissions. The higher number is based on an analysis that has a lower concentration target (450 ppmv CO2), does not account for price-induced intensification of land use, and does not explicitly consider concurrent changes in other environmental factors. In analyses that include land-use emissions as part of the policy (6–8), less area is estimated to be devoted to biofuels (3 to 8%). The carbon losses associated with the combined direct and indirect biofuel emissions estimated for our case 1 are similar to a previous estimate (7), which shows larger losses of carbon per unit area converted to biofuels production. These larger losses per unit area result from a combination of factors, including a greater simulated response of plant productivity to changes in climate and atmospheric CO2 (15) and the lack of any negative effects on plant productivity of elevated tropospheric ozone (19, 20).

50% in a recent analysis (6) that does not control land-use emissions. The higher number is based on an analysis that has a lower concentration target (450 ppmv CO2), does not account for price-induced intensification of land use, and does not explicitly consider concurrent changes in other environmental factors. In analyses that include land-use emissions as part of the policy (6–8), less area is estimated to be devoted to biofuels (3 to 8%). The carbon losses associated with the combined direct and indirect biofuel emissions estimated for our case 1 are similar to a previous estimate (7), which shows larger losses of carbon per unit area converted to biofuels production. These larger losses per unit area result from a combination of factors, including a greater simulated response of plant productivity to changes in climate and atmospheric CO2 (15) and the lack of any negative effects on plant productivity of elevated tropospheric ozone (19, 20).

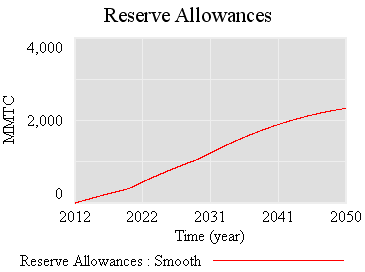

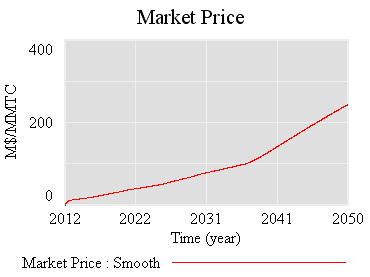

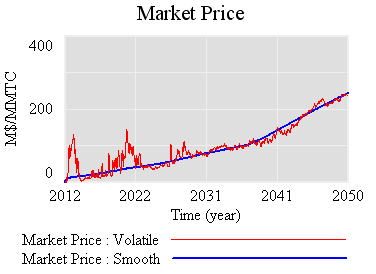

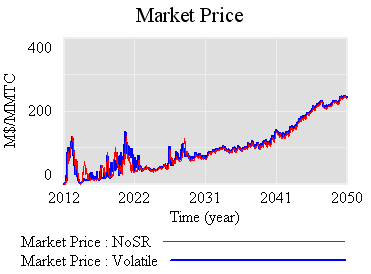

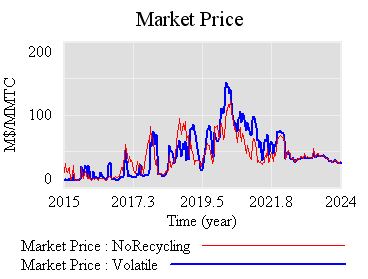

We also simulated the emissions of N2O from additional fertilizer that would be required to grow biofuel crops. Over the century, the N2O emissions become larger in CO2 equivalent (CO2eq) than carbon emissions from land use (Fig. 3). The net GHG effect of biofuels also changes over time; for case 1, the net GHG balance is –90 Pg CO2eq through 2050 (a negative sign indicates a source; a positive sign indicates a sink), whereas it is +579 through 2100. For case 2, the net GHG balance is +57 Pg CO2eq through 2050 and +679 through 2100. We estimate that by the year 2100, biofuels production accounts for about 60% of the total annual N2O emissions from fertilizer application in both cases, where the total for case 1 is 18.6 Tg N yr–1 and for case 2 is 16.1 Tg N yr–1. These total annual land-use N2O emissions are about 2.5 to 3.5 times higher than comparable estimates from an earlier study (8). Our larger estimates result from differences in the assumed proportion of nitrogen fertilizer lost as N2O (21) as well as differences in the amount of land devoted to food and biofuel production. Best practices for the use of nitrogen fertilizer, such as synchronizing fertilizer application with plant demand (22), can reduce N2O emissions associated with biofuels production.

View larger version (16K):

[in this window]

[in a new window] |

Fig. 3. Partitioning of greenhouse gas balance since the year 2000 (black line) as influenced by cellulosic biofuel production for land-use case 1 (A) and case 2 (B) among fossil fuel abatement (yellow), net land carbon flux (blue), and fertilizer N2O emissions (red). Positive values are abatement benefits, and negative values are emissions. Net land carbon flux is the same as in Fig. 2. For case 1, N2O emissions over the century are 286 Pg CO2eq; for case 2, N2O emissions are 238 Pg CO2eq. |

|

The CI of fuel was also calculated across three time periods (Table 1) so as to compare with displaced fossil energy in a LCFS and to identify the GHG allowances that would be required for biofuels in a cap-and-trade program. Previous CI estimates for California gasoline (3) suggest that values less than ~96 g CO2eq MJ–1 indicate that blending cellulosic biofuels will help lower the carbon intensity of California fuel and therefore contribute to achieving the LCFS. Entries that are higher than 96 g CO2eq MJ–1 would raise the average California fuel carbon intensity and thus be at odds with the LCFS. Therefore, the CI values for case 1 are only favorable for biofuels if the integration period extends into the second half of the century. For case 2, the CI values turn favorable for biofuels over an integration period somewhere between 2030 and 2050. In both cases, the CO2 flux has approached zero by the end of the century when little or no further land conversion is occurring and emissions from decomposition are approximately balancing carbon added to the soil from unharvested components of the vegetation (roots). Although the carbon accounting ends up as a nearly net neutral effect, N2O emissions continue. Annual estimates start high, are variable from year to year because they depend on climate, and generally decline over time.

50% in a recent analysis (

50% in a recent analysis (