Taking my own advice, I grabbed a simple project model and did a Monte Carlo experiment to see if project performance had a heavy tailed distribution in response to normal and uniform inputs.

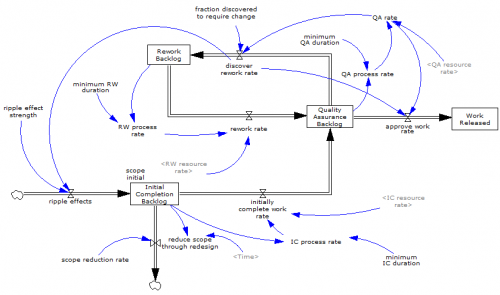

The model is the project tipping point model from Taylor, T. and Ford, D.N. Managing Tipping Point Dynamics in Complex Construction Projects ASCE Journal of Construction Engineering and Management. Vol. 134, No. 6, pp. 421-431. June, 2008, kindly supplied by David.

I made a few minor modifications to the model, to eliminate test inputs, and constructed a sensitivity input on a few parameters, similar to that described here. I used project completion time (the time at which 99% of work is done) as a performance metric. In this model, that’s perfectly correlated with cost, because the workforce is constant.

The core structure is the flow of tasks through the rework cycle to completion:

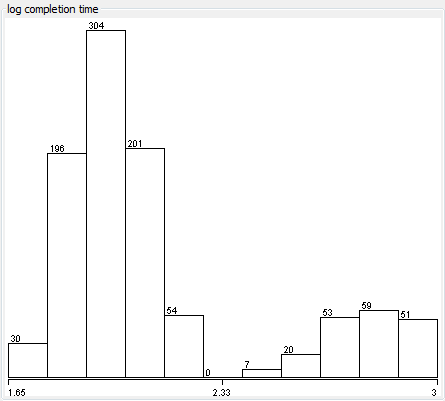

The initial results were baffling. The distribution of completion times was bimodal:

Worse, the bimodality didn’t appear to be correlated with any particular input:

Excerpt from a Weka scatterplot matrix of sensitivity inputs vs. log completion time.

Trying to understand these results with a purely black-box statistical approach is a hard road. The sensible thing is to actually look at the model to develop some insight into how the structure determines the behavior. So, I fired it up in Synthesim and did some exploration.

It turns out that there are (at least) two positive loops that cause projects to explode in this model. One is the rework cycle: work that is done wrong the first time has to be reworked – and it might be done wrong the second time, too. This is a positive loop with gain < 1, so the damage is bounded, but large if the error rate is high. A second, related loop is “ripple effects” – the collateral damage of rework.

My Monte Carlo experiment was, in some cases, pushing the model into a region with ripple+rework effects approaching 1, so that every task done creates an additional task. That causes the project to spiral into the right sub-distribution, where it is difficult or impossible to complete.

This is interesting, but more pathological than what I was interested in exploring. I moderated my parameter choices and eliminated a few test inputs in the model, and repeated the experiment.

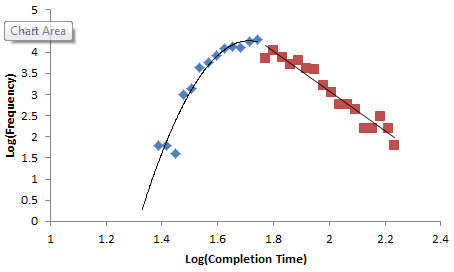

Voila:

Normal+uniformly-distributed uncertainty in project estimation, productivity and ripple/rework effects generates a lognormal-ish left tail (parabolic on the log-log axes above) and a heavy Power Law right tail.*

Normal+uniformly-distributed uncertainty in project estimation, productivity and ripple/rework effects generates a lognormal-ish left tail (parabolic on the log-log axes above) and a heavy Power Law right tail.*

The interesting thing about this is that conventional project estimation methods will completely miss it. There are no positive loops in the standard CPM/PERT/Gantt view of a project. This means that a team analyzing project uncertainty with Normal errors in will get Normal errors out, completely missing the potential for catastrophic Black Swans.

* This is statistically and dynamically fast & loose. Replicating my experiment (or failing to!), with more attention to model structure, experimental design, statistics and power law generating mechanism would make a cool paper.

1 thought on “A project power law experiment”