I recently worked on a fascinating project that combined Big Data and System Dynamics (SD) to good effect. Neither method could have stood on its own, but the outcome really emphasized some of the strategic limitations of the data-driven approach. Including SD in the project simultaneously lowered the total cost of analysis, by avoiding data processing for things that could be determined a priori, and increased its value by connecting the data to business context.

I can’t give a direct account of what we did, because it’s proprietary, but here’s my best shot at the generalizable insights. The context was health care for some conditions that particularly affect low income and indigent populations. The patients are hard to track and hard to influence.

Two efforts worked in parallel: Big Data (led by another vendor) and System Dynamics (led by Ventana). I use the term “SD” loosely, because much of what we ultimately did was data-centric: agent based modeling and estimation of individual-level nonlinear dynamic models in Vensim. The Big Data vendor’s budget was two orders of magnitude greater than ours, mostly due to some expensive system integration tasks, but partly due to the caché of their brand and flashy approach, I suspect.

Predict Patient Events

Big Data idea #1 was to use machine learning methods to predict imminent expensive events, so that patients could be treated preemptively, saving the cost of ER visits and other expensive procedures. This involved statistical analysis of extremely detailed individual patient histories. In parallel, we modeled the delivery system and aggregate patient trajectories through many different system states. (Google’s Verily is pursuing something similar.)

Ultimately, the Big Data approach didn’t pan out. I think the cause was largely data limitations. Patient records have severe quality issues, including (we hypothesized) unobserved feedback from providers gaming the system to work around health coverage limitations. More importantly, it’s still problematic to correlate well-observed aspects of patient care with other important states of the patient, like employment and social network support.

Some of the problems were not evident until we began looking at things from a stock-flow perspective. For example, it turned out that admission and release records were not conserving people. Test statistics on the data might have revealed this, but no one even thought to look until we created an operational description of the system and started trying to balance units and apply conservation laws. Machine learning algorithms were perfectly happy to consume the data uncritically.

Describing the system operationally in an SD model revealed a number of constraints that would have made implementation of the predictive approach difficult, even if data and algorithm constraints were eliminated. We also found that some of the insights from the Big Data approach were available from first principles in simple, aggregate “thinkpiece” models at a tiny fraction of the cost.

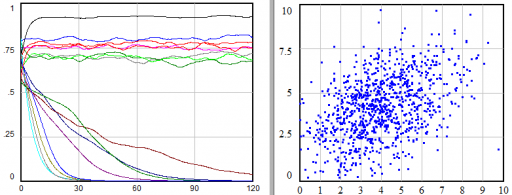

Things weren’t entirely rosy for the simulations, however. Building structural models is pretty quick, but calibrating them and testing alternative formulations is a slow process. Switching function calls to try a different machine learning algorithm is much easier. We really need hybrids that combine the best of both approaches.

An amusing aside: after data problems began to turn up, we found that we needed to understand more about patient histories. We made a movie that depicted a couple of years of events for tens of thousands of patients. It ran for almost an hour, with nothing but strips of colored dots. It has to be one of the most boring productions in the history of cinema, and yet it was absolutely riveting for anyone who understood the problem.

Segment Patient Populations & Buy Provider Risk

Big Data idea #2 was to abandon individual prediction and focus on population methods. The hope was to earn premium prices by improving on prevention in general, using that advantage to essentially sell reinsurance to providers through a patient performance contract.

The big data aspect involved a pharma marketing science favorite: segmentation. Cluster analysis and practical rules were used to identify “interesting” subpopulations of patients.

In parallel, we began to build agent and aggregate models of patient state trajectories, so see how the contract would actually work. Alarmingly, we discovered that the business rules of the proposal were not written down or fully specified anywhere. We made them up, but in the process discovered that there were many idiosyncratic cases that would need to be handled, like what to do with escrowed funds from patients whose performance history was incomplete at the time they churned out of a provider.

When we started to plug real numbers into the aggregate model, we immediately ran into a puzzle. We sampled costs from the (very large) full dataset, but we couldn’t replicate the relative costs of patients on and off the prevention protocol. After much digging, it turned out that there were two problems.

First, the distribution of patient costs is heavy-tailed (a power law). So, if clustering rules shift even a few high cost patients (out of millions) from one business category to another, the average cost can change significantly. Median costs are more stable, but the business doesn’t pay the median (this is essentially dictated by conservation of money).

Second, it turns out that clustering patients with short records is problematic, because it introduces selection and truncation biases into the determination of who’s at risk. The only valid fallback is to use each patient as its own control, using an errors in variables model. Once we refined the estimation techniques, we found that the short term savings from prevention didn’t pencil out very well when combined with the reinsurance business rules. (Fortunately, that’s not a general insight – we have another project underway that focuses on bigger advantages from prevention in the long run.)

Both of these issues should have been revealed by statistical red flags, poor confidence bounds, or failure to cross-validate. But for whatever, reason, that didn’t happen until we put the estimates into practice. Fortunately, that happened in our simulation model, not an expensive real trial.

Insights

Looking back at this adventure, I think we learned some important things:

- There are no hard lines – we did SD with tens of thousands of agents, aggregate models, discrete event simulations, large statistical estimates by brute force simulation, and the Big Data approach did a lot of simple aggregation.

- Detailed and aggregate approaches are complementary.

- Big Data measurements contribute to SD or detailed simulations;

- SD modeling vettes Big Data results in multiple ways.

- Statistical approaches need business context, in-place validation, and synthetic data from simulations.

- Anything you can do a priori, without data collection and processing, is an order of magnitude cheaper.

- Simulation lets you rule out a lot of things that don’t matter and design experiments to focus on data that does matter, before you spend a ton of money.

- SD raises questions you didn’t know to ask.

- Hybrid approaches that embed machine learning algorithms in the decisions in SD models, or automate cross-validated calibration with structural experiments on SD models, would be very powerful.

Interestingly, the most enduring and interesting models, out of a dozen or so built over the course of the project, were some low-order SD models of patient life histories and adherence behavior. I hope to cover those in future posts.

Thanks for the post, Tom! This is very timely for me, as we are currently proposing a combined SD/Big Data/Machine Learning approach for a utility client of ours. This insight is great food for thought. Warm regards, Cory

Glad it’s helpful!

Nice piece, Tom. There were a couple of subtleties that I hadn’t thought about.

Reminded me a of a couple of projects I did a while back. In one, we used a model to test which data not then being collected were the most important to begin collecting.

The other had to do with nursing home patients and their changing financial statuses. As we were gathering data for the initialization of parameters and model testing, one of us got nursing home data regarding source of admissions (mostly hospitals) and I got hospital discharge data to nursing homes. They should have at least been approximately similar, right? Of course, they were not. The hospital discharge data showed about 150,000 discharges to nursing homes. The nursing home data showed about 200,000 patients admitted from hospitals. As they had a bigger stake in their data being accurate, not surprisingly, when we used the nursing home data, the model produced a vastly better picture of the system in equilibrium.

Sounds familiar. These kinds of mismatches come up every time we start merging organizational data streams to get a handle on the big picture. If data hasn’t been used across the organization before, it’s almost always misunderstood, limited or just wrong in some way.