Colleagues just pointed out the Virgin Earth Challenge, “a US$25 million prize for an environmentally sustainable and economically viable way to remove greenhouse gases from the atmosphere.”

John Sterman writes:

I think it inevitable that we will see more and more interest in CO2 removal. And IF it can be done without undermining mitigation I’d be all for it. I do like biochar as a possibility; though I am very skeptical of direct air capture and CCS. But the IF in the prior sentence is clearly not true: if there were effective removal technology it would create moral hazard leading to less mitigation and more emissions.

Even more interesting, direct air capture is not thermodynamically favored; needs lots of energy. All the finalists claim that they will use renewable energy or “waste” heat from other processes to power their removal technology, but how about using those renewable sources and waste heat to directly offset fossil fuels and reduce emissions instead of using them to power less efficient removal processes? Clearly, any wind/solar/geothermal that is used to power a removal technology could have been used directly to reduce fossil emissions, and will be cheaper and offset more net emissions. Same for waste heat unless the waste heat is too low temp to be used to offset fossil fuels. Result: these capture schemes may increase net CO2 flux into the atmosphere.

Every business knows it’s always better to prevent the creation of a defect than to correct it after the fact. No responsible firm would say “our products are killing the customers; we know how to prevent that, but we think our money is best spent on settling lawsuits with their heirs.” (Oh: GM did exactly that, and look how it is damaging them). So why is it ok for people to say “fossil fuel use is killing us; we know how to prevent that, but we’ve decided to spend even more money to try to clean up the mess after the pollution is already in the air”?

To me, many of these schemes reflect a serious lack of systems thinking, and the desire for a technical solution that allows us to keep living the way we are living without any change in our behavior. Can’t work.

I agree with John, and I think there are some additional gaps in systemic thinking about these technologies. Here are some quick reflections, in pictures.

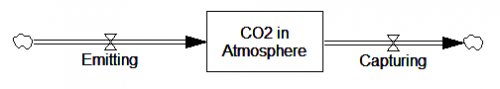

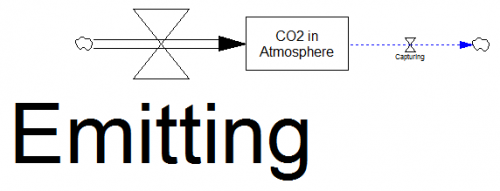

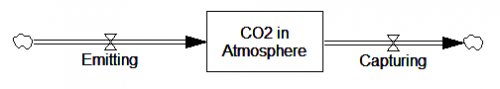

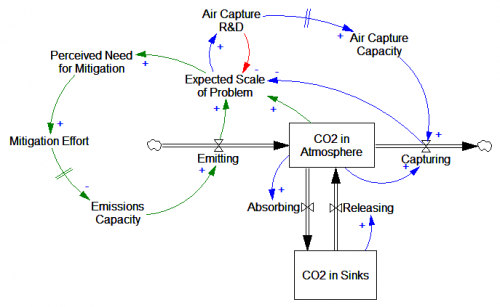

A basic point for any system is that you can lower the level of a stock (all else equal) by reducing the inflow or increasing the outflow. So the idea of capturing CO2 is not totally bonkers. In fact, it lets you do at least one thing that you can’t do by reducing emissions. When emissions fall to 0, there’s no leverage to reduce CO2 in the atmosphere further. But capture could actively draw down the CO2 stock. However, we are very far from 0 emissions, and this is harder than it seems:

A basic point for any system is that you can lower the level of a stock (all else equal) by reducing the inflow or increasing the outflow. So the idea of capturing CO2 is not totally bonkers. In fact, it lets you do at least one thing that you can’t do by reducing emissions. When emissions fall to 0, there’s no leverage to reduce CO2 in the atmosphere further. But capture could actively draw down the CO2 stock. However, we are very far from 0 emissions, and this is harder than it seems:

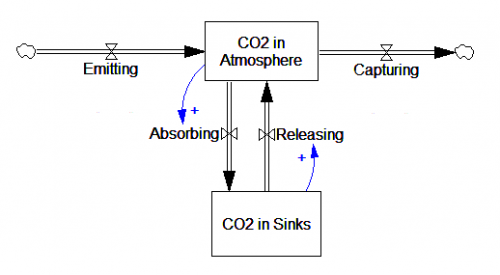

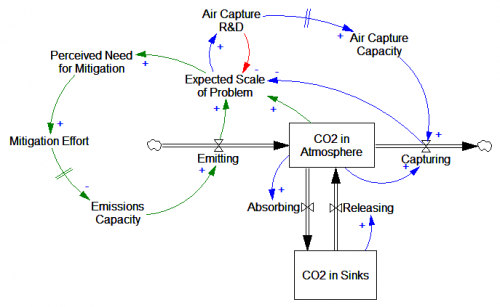

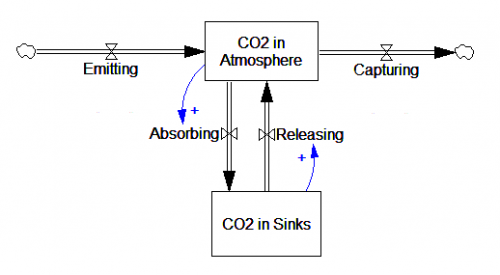

Natural sinks have been graciously absorbing roughly half of our CO2 emissions for a long time. If we reduce emissions dramatically, and begin capturing, nature will be happy to give us back that CO2, ton for ton. So, the capture problem is actually twice as big you’d think from looking at the excess CO2 in the atmosphere.

Natural sinks have been graciously absorbing roughly half of our CO2 emissions for a long time. If we reduce emissions dramatically, and begin capturing, nature will be happy to give us back that CO2, ton for ton. So, the capture problem is actually twice as big you’d think from looking at the excess CO2 in the atmosphere.

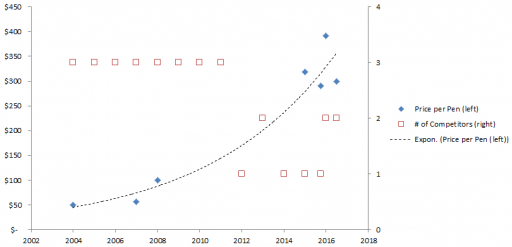

Currently, there’s also a problem of scale. Emissions are something like two orders of magnitude larger than potential markets for CO2, so there’s a looong way to go. And capture doesn’t scale like like a service running on Amazon Elastic Cloud servers; it’s bricks and mortar.

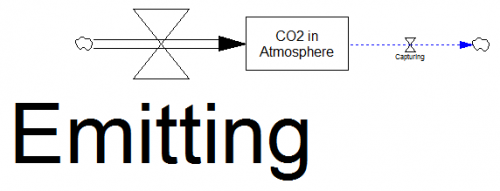

And where does that little cloud go, anyway? Several proposals gloss over this, as in:

And where does that little cloud go, anyway? Several proposals gloss over this, as in:

The process involves a chemical solution (that naturally absorbs CO2) being brought into contact with the air. This solution, now containing the captured CO2, is sent to through a regeneration cycle which simultaneously extracts the CO2 as a high-pressure pipeline-quality product (ready to be put to numerous commercial uses) …

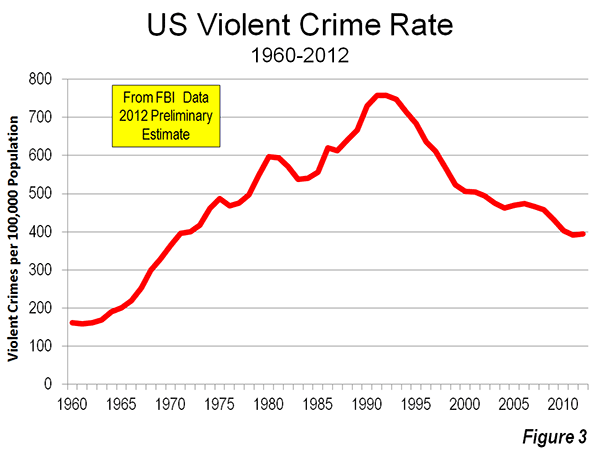

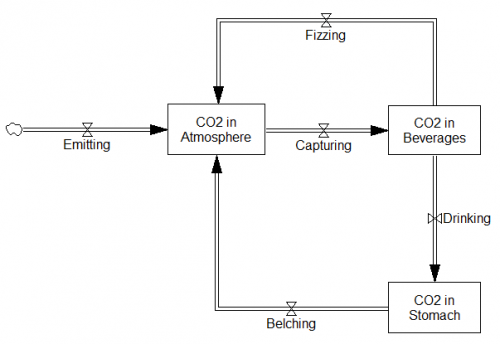

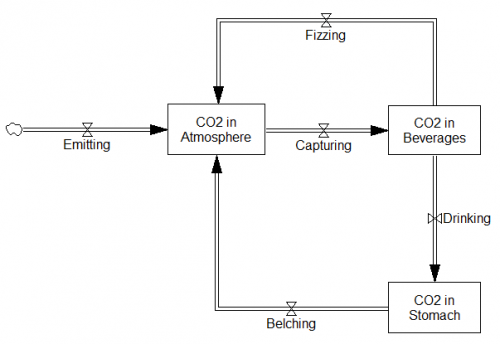

The biggest commercial uses I know of are beverage carbonation and enhanced oil recovery (EOR). Consider the beverage system:

CO2 sequestered in beverages doesn’t stay there very long! You’d have to start stockpiling vast quantities of Coke in salt mines to accumulate a significant quantity. This reminds me of Nike’s carbon-sucking golf ball. EOR is just as bad, because you put CO2 down a hole (hopefully it stays there), and oil and gas come back up, which are then burned … emitting more CO2. Fortunately the biochar solutions do not suffer so much from this problem.

CO2 sequestered in beverages doesn’t stay there very long! You’d have to start stockpiling vast quantities of Coke in salt mines to accumulate a significant quantity. This reminds me of Nike’s carbon-sucking golf ball. EOR is just as bad, because you put CO2 down a hole (hopefully it stays there), and oil and gas come back up, which are then burned … emitting more CO2. Fortunately the biochar solutions do not suffer so much from this problem.

Next up, delays and moral hazard:

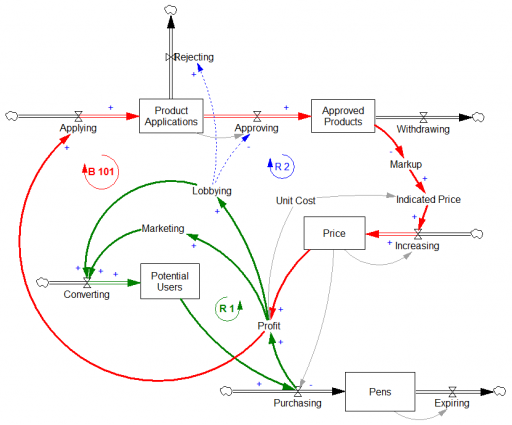

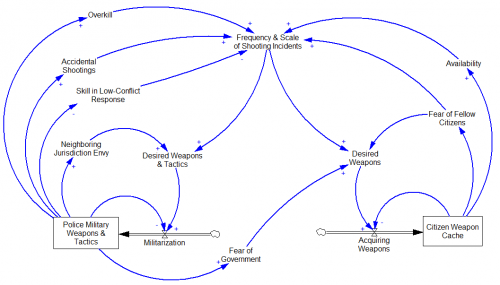

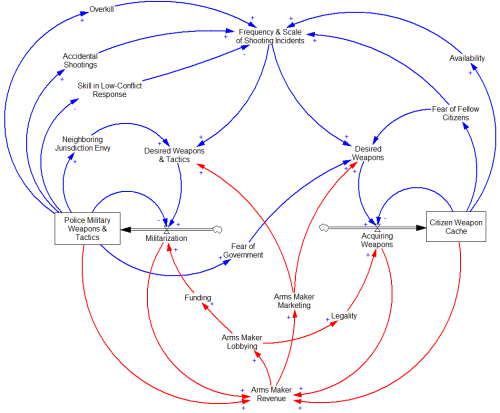

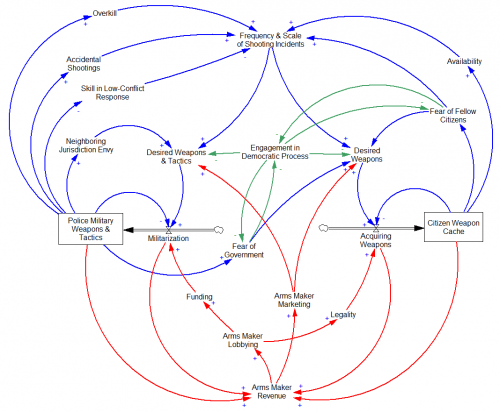

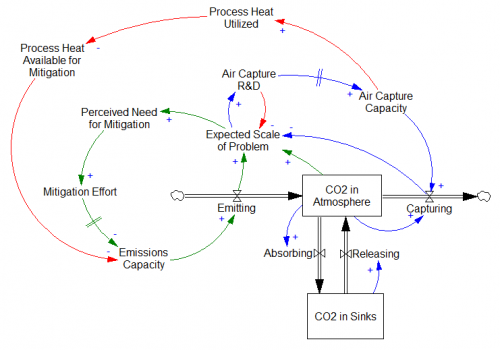

This is a cartoonish view of the control system driving mitigation and capture effort. The good news is that air capture gives us another negative loop (blue, top) by which we can reduce CO2 in the atmosphere. That’s good, especially if we mismanage the green loop. The moral hazard side effect is that the mere act of going through the motions of capture R&D reduces the perceived scale of the climate problem (red link), and therefore reduces mitigation, which actually makes the problem harder to solve.

This is a cartoonish view of the control system driving mitigation and capture effort. The good news is that air capture gives us another negative loop (blue, top) by which we can reduce CO2 in the atmosphere. That’s good, especially if we mismanage the green loop. The moral hazard side effect is that the mere act of going through the motions of capture R&D reduces the perceived scale of the climate problem (red link), and therefore reduces mitigation, which actually makes the problem harder to solve.

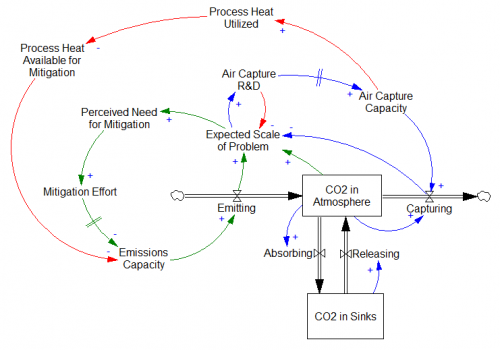

Capture also competes with mitigation for resources, as in John’s process heat example:

It’s even worse than that, because a lot of mitigation efforts have fairly rapid effects on emissions. There are certainly long-lived aspects of energy and infrastructure that must be considered, but behavior can change a lot of emissions quickly and with off-the-shelf technology. The delay between air capture R&D and actual capturing, on the other hand, is bound to be fairly long, because it’s in its infancy, and has to make it through multiple discover/develop/deploy hurdles.

One of those hurdles is cost. Why would anyone bother to pay for air capture, especially in cases where it’s a sure loser in terms of thermodynamics and capital costs? Altruism is not a likely candidate, so it’ll take a policy driver. There are essentially two choices: standards and emissions pricing.

A standard might mandate (as the EPA and California have) that new power plants above a certain emissions intensity must employ some kind of offsetting capture. If coal wants to stay in business, it has to ante up. The silly thing about this, apart from inevitable complexity, is that any technology that meets the standard without capture, like combined cycle gas electricity currently, pays 0 for its emissions, even though they too are harmful.

Similarly, you could place a subsidy or bounty on tons of CO2 captured. That would be perverse, because taxpayers would then have to fund capture – not likely a popular measure. The obvious alternative would be to price emissions in general – positive for emissions, negative for capture. Then all sources and sinks would be on a level playing field. That’s the way to go, but of course we ought to do it now, so that mitigation starts working, and air capture joins in later if and when it’s a viable competitor.

I think it’s fine if people work on carbon capture and sequestration, as long as they don’t pretend that it’s anywhere near a plausible scale, or even remotely possible without comprehensive changes in incentives. I won’t spend my own time on a speculative, low-leverage policy when there are more effective, immediate and cheaper mitigation alternatives. And I’ll certainly never advise anyone to pursue a geoengineered world, any more than I’d advise them to keep smoking but invest in cancer research.