After I wrote my last post, it occurred to me that perhaps I should cut Ellis some slack. I still don’t think most people who ponder limits think of them as fixed. But, as a kind of shorthand, we sometimes talk about them that way. Consider my slides from the latest SD conference, in which I reflected on World Dynamics,

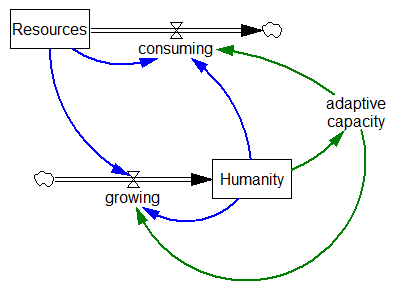

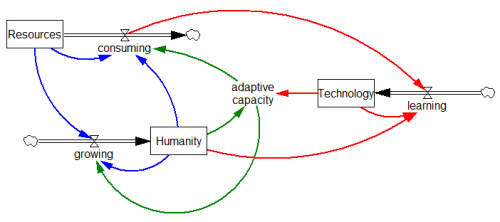

It would be easy to get the wrong impression here.

It would be easy to get the wrong impression here.

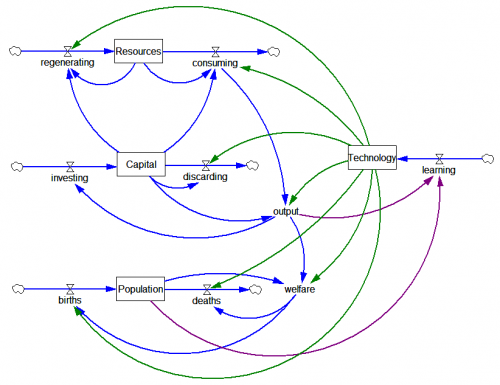

Of course, I was talking about World Dynamics, which doesn’t have an explicit technology stock – Forrester considered technology to be part of the capital accumulation process. That glosses over an important point, by fixing the ratios of economic activity to resource consumption and pollution. World3 shares this limitation, except in some specific technology experiments.

So, it’s really no wonder that, in 1973, it was hard to talk to economists, who were operating with exogenous technical progress (the Solow residual) and substitution along continuous production functions in mind.

Unlimited or exogenous technology doesn’t really make any more sense than no technology, so who’s right?

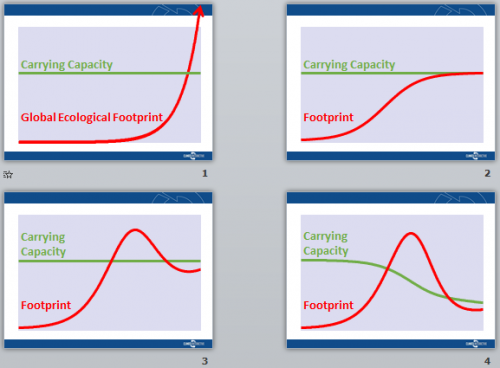

As I said last time, the answer boils down to whether technology proceeds faster than growth or not. That in turn depends on what you mean by “technology”. Narrowly, there’s fairly abundant evidence that the intensity (per capita or GDP) of use of a variety of materials is going down more slowly than growth. As a result, resource consumption (fossil fuels, metals, phosphorus, gravel, etc.) and persistent pollution (CO2, for example) are increasing steadily. By these metrics, sustainability requires a reversal in growth/tech trend magnitudes.

But taking a broad view of technology, including product scope expansions and lifestyle, what does that mean? The consequences of these material trends don’t matter if we can upload ourselves into computers or escape to space fast enough. Space doesn’t look very exponential yet, and I haven’t really seen credible singularity metrics. This is really the problem with the Marchetti paper that Ellis links, describing a global carrying capacity of 1 trillion humans, with more room for nature than today, living in floating cities. The question we face is not, can we imagine some future global equilibrium with spectacular performance, but, can we get there from here?

Nriagu, Tales Told in Lead, Science

For the Romans, there was undoubtedly a more technologically advanced future state (modern Europe), but they failed to realize it, because social and environmental feedbacks bit first. So, while technology was important then as now, the possibility of a high tech future state does not guarantee its achievement.

For Ellis, I think this means that he has to specify much more clearly what he means by future technology and adaptive capacity. Will we geoengineer our way out of climate constraints, for example? For proponents of limits, I think we need to be clearer in our communication about the technical aspects of limits.

For all sides of the debate, models need to improve. Many aspects of technology remain inadequately formulated, and therefore many mysteries remain. Why does the diminishing adoption time for new technologies not translate to increasing GDP growth? What do technical trends look like when measured by welfare indices rather than GDP? To what extent does social IT change the game, vs. serving as the icing on a classical material cake?

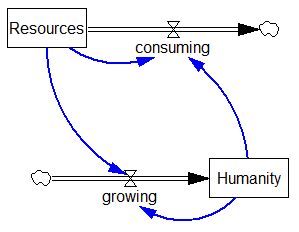

Of course, the fact that the green structure exists does not mean that the blue structure does not exist. It just means that there are multiple causes competing for dominance in this system.

Of course, the fact that the green structure exists does not mean that the blue structure does not exist. It just means that there are multiple causes competing for dominance in this system.

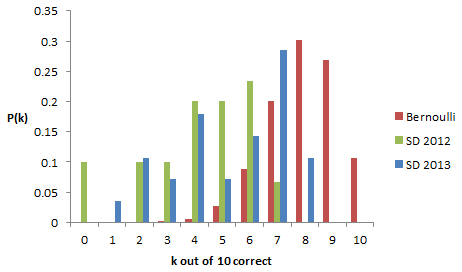

5 right is well above the typical performance of the public, but sadly this means that few among us are destined to be CEOs, who are often wildly overconfident (console yourself – abject failure on the quiz can make you a titan of industry).

5 right is well above the typical performance of the public, but sadly this means that few among us are destined to be CEOs, who are often wildly overconfident (console yourself – abject failure on the quiz can make you a titan of industry).