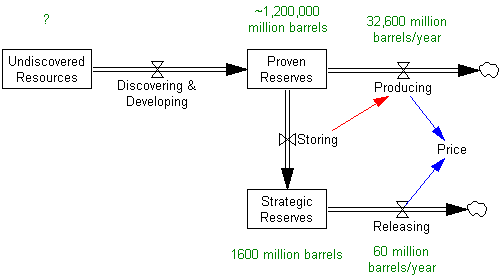

A coordinated release of emergency oil stockpiles is underway. It’s almost as foolish as that timeless chain email, the Great American Gasout (now migrated to Facebook, it seems), and for the same stock-flow reasons.

Like the Gasout, strategic reserve operations don’t do anything about demand; they just shuffle it around in time. Releasing oil does increase supply by augmenting production, which causes a short term price break. But at some point you have to refill the reserve. All else equal, storing oil has to come at the expense of producing it for consumption, which means that price goes back up at some other time.

The implicit mental model here is that governments are going to buy low and sell high, releasing oil at high prices when there’s a crisis, and storing it when peaceful market conditions return. I rather doubt that political entities are very good at such things, but more importantly, where are the prospects for cheap refills, given tight supplies, strategic behavior by OPEC, and (someday) global recovery? It’s not even clear that agencies were successful at keeping the release secret, so a few market players may have captured a hefty chunk of the benefits of the release.

Setting dynamics aside, the strategic reserve release is hardly big enough to matter – the 60 million barrels planned isn’t even a day of global production. It’s only 39 days of Libyan production. Even if you have extreme views on price elasticity, that’s not going to make a huge difference – unless the release is extended. But extending the release through the end of the year would consume almost a quarter of world strategic reserves, without any clear emergency at hand.

We should be saving those reserves for a real rainy day, and increasing the end-use price through taxes, to internalize environmental and security costs and recapture OPEC rents.