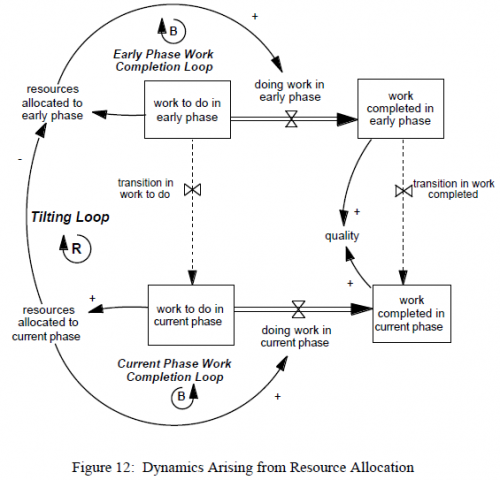

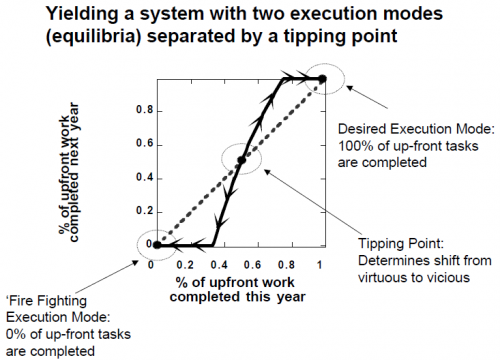

The tipping loop, a positive feedback that drives sequential or concurrent projects into permanent firefighting mode, is actually just one of a number of positive feedbacks that create project management traps. Here are some others:

- Rework – the rework cycle is central to project dynamics. Rework arises when things aren’t done right the first time. When errors are discovered, tasks have to be reworked, and there’s no guarantee that they’ll be done right the second time either. This creates a reinforcing loop that bloats project tasks beyond what’s expected with perfect execution.

- Brooks’ Law – adding resources to a late project makes it later. There are actually several feedback loops involved:

- Rookie effects: new resources take time to get up to speed. Until they do, they eat up the time of senior staff, decreasing output. Also, they’re likely to be more error prone, creating more rework to be dealt with downstream.

- Diseconomies of scale from communication overhead.

- Burnout – under schedule pressure, it’s tempting to work harder and longer. That works briefly, but sustained overtime is likely to be counterproductive, due to decreases in productivity, turnover, and increases in error rates.

- Congestion – in construction or assembly, a delay in early phases may not delay the arrival of materials from suppliers. Unused materials stack up, congesting the work site and slowing progress further.

- Dilution – trying to overcome stalled phases by tackling too many tasks in parallel thins resources to the point that overhead consumes all available time, and progress grinds to a halt.

- Hopelessness – death marches are no fun, and the mere fact that a project is going astray hurts morale, leading to decreased productivity and loss of resources as rats leave the sinking ship.

Any number of things can contribute to schedule pressure that triggers these traps. Often the trigger is external, such as late-breaking change orders or regulatory mandates. However, it can also arise internally through scope creep. As long as it appears that a project is on schedule (a supposition that’s likely to prove false in hindsight), it’s hard to resist additional feature requests and suppress gold-plating urges of developers.

Taylor & Ford integrate a number of these dynamics into a simple model of single-project tipping points. They generically characterize the “ripple effect” via a few parameters: one characterizes “the amount of impact that reworked portions of the project have on the total work required to complete the project” and another captures the effect of schedule pressure on generation of rework. They suggest a robust design approach that keeps projects out of trouble, by ensuring that the vicious cycles created by these loops do not become dominant.

Because projects are complicated nests of feedback, it’s not surprising that we manage them poorly. Cognitive biases and learned heuristics can exacerbate the effect of vicious cycles arising from the structure of the work itself. For example,

… many organizations reward and promote engineers based on their ability to save troubled projects. Consider, for example, one senior manager’s reflection on how developers in his organizations were rewarded:

Occasionally there is a superstar of an engineer or a manager that can take one of these late changes and run through the gauntlet of all the possible ways that it could screw up and make it a success. And then we make a hero out of that person. And everybody else who wants to be a hero says “Oh, that is what is valued around here.” It is not valued to do the routine work months in advance and do the testing and eliminate all the problems before they become problems. …

… allowing managers to “save” troubled projects, and therefore receive accolades and benefits, creates a situation in which, for those interested in advancement, there is little incentive to execute a project properly from start to finish. While allowing such heroics may help in the short run, the long run health of the development system is better served by not rewarding them.

– Repenning, Gonçalves & Black (2001) CMR

… much of the complexity of concurrent development—and the implementation failures that plague many organizations—arises from interactions between the technical and behavioral dimensions. We use a dynamic project model that explicitly represents these interactions to investigate how a ‘‘Liar’s Club’’—concealing known rework requirements from managers and colleagues—can aggravate the ‘‘90% syndrome,’’ a common form of schedule failure, and disproportionately degrade schedule performance and project quality.

– Sterman & Ford (2003) Concurrent Engineering

Once caught in a downward spiral, managers must make some attribution of cause. The psychology literature also contains ample evidence suggesting that managers are more likely to attribute the cause of low performance to the attitudes and dispositions of people working within the process rather than to the structure of the process itself …. Thus, as performance begins to decline due to the downward spiral of fire fighting, managers are not only unlikely to learn to manage the system better, they are also likely to blame participants in the process. To make matters even worse, the system provides little evidence to discredit this hypothesis. Once fire fighting starts, system performance continues to decline even if the workload returns to its initial level. Further, managers will observe engineers spending a decreasing fraction of their time on up-front activities like concept development, providing powerful evidence confirming the managers’ mistaken belief that engineers are to blame for the declining performance.

Finally, having blamed the cause of low performance on those who work within the process, what actions do managers then take? Two are likely. First, managers may be tempted to increase their control over the process via additional surveillance, more detailed reporting requirements, and increasingly bureaucratic procedures. Second, managers may increase the demands on the development process in the hope of forcing the staff to be more efficient. The insidious feature of these actions is that each amounts to increasing resource utilization and makes the system more prone to the downward spiral. Thus, if managers incorrectly attribute the cause of low performance, the actions they take both confirm their faulty attribution and make the situation worse rather than better. The end result of this dynamic is a management team that becomes increasingly frustrated with an engineering staff that they perceive as lazy, undisciplined, and unwilling to follow a pre-specified development process, and an engineering staff that becomes increasingly frustrated with managers that they feel do not understand the realities of the system and, consequently, set unachievable objectives.

There’s a long history of the use of SD models to solve these problems, or to resolve conflicts over attribution after the fact.