Box’s famous comment, that “all models are wrong,” gets repeated ad nauseum (even by me). I think it’s essential to be aware of this in the sloppy sciences, but it does a disservice to modeling and simulation in general.

As far as I’m concerned, a lot of models are basically right. I recently worked with some kids on an air track experiment in physics. We timed the acceleration of a sled released from various heights, and plotted the data. Then we used a quadratic fit, based on a simple dynamic model, to predict the next point. We were within a hundredth of a second, confirmed by video analysis.

Sure, we omitted lots of things, notably air resistance and relativity. But so what? There’s no useful sense in which the model was “wrong,” anywhere near the conditions of the experiment. (Not surprisingly, you can find a few cranks who contest Newton’s laws anyway.)

I think a lot of uncertain phenomena in social sciences operate on a backbone of the same kind of “physics.” The future behavior of the government is quite unpredictable, but there isn’t much uncertainty about accounting, e.g., that increasing the deficit increases the debt.

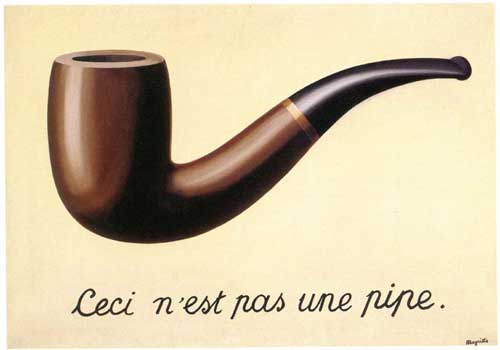

The domain of wrong but useful models remains large (within an even bigger sea of simple ignorance), but I think more and more things are falling into the category of models that are basically right. The trick is to be able to spot the difference. Some people clearly can’t:

A&G provide no formal method to distinguish between situations in which models yield useful or spurious forecasts. In an earlier paper, they claimed rather broadly,

‘To our knowledge, there is no empirical evidence to suggest that presenting opinions in mathematical terms rather than in words will contribute to forecast accuracy.’ (page 1002)

This statement may be true in some settings, but obviously not in general. There are many situations in which mathematical models have good predictive power and outperform informal judgments by a wide margin.

I wonder how well one could do with verbal predictions of a simple physical system? Score one for the models.