File under “this would be funny if it weren’t frightening.”

HOUSE BILL No. 2366

By Committee on Energy and Environment

(a) No public funds may be used, either directly or indirectly, to promote, support, mandate, require, order, incentivize, advocate, plan for, participate in or implement sustainable development.

(2) “sustainable development” means a mode of human development in which resource use aims to meet human needs while preserving the environment so that these needs can be met not only in the present, but also for generations to come, but not to include the idea, principle or practice of conservation or conservationism.

Surely it’s not the “resource use aims to meet human needs” part that the authors find objectionable, so it must be the “preserving the environment so that these needs can be met … for generations to come” that they reject. The courts are going to have a ball developing a legal test separating that from conservation. I guess they’ll have to draw a line that distinguishes “present” from “generations to come” and declares that conservation is for something other than the future. Presumably this means that Kansas must immediately abandon all environment and resource projects with a payback time of more than a year or so.

But why stop with environment and resource projects? Kansas could simply set its discount rate for public projects to 100%, thereby terminating all but the most “present” of its investments in infrastructure, education, R&D and other power grabs by generations to come.

Another amusing contradiction:

(b) Nothing in this section shall be construed to prohibit the use of public funds outside the context of sustainable development: (1) For planning the use, development or extension of public services or resources; (2) to support, promote, advocate for, plan for, enforce, use, teach, participate in or implement the ideas, principles or practices of planning, conservation, conservationism, fiscal responsibility, free market capitalism, limited government, federalism, national and state sovereignty, individual freedom and liberty, individual responsibility or the protection of personal property rights;

So, what happens if Kansas decides to pursue conservation the libertarian way, by allocating resource property rights to create markets that are now missing? Is that sustainable development, or promotion of free market capitalism? More fun for the courts.

Perhaps this is all just a misguided attempt to make the Montana legislature look sane by comparison.

h/t Bloomberg via George Richardson

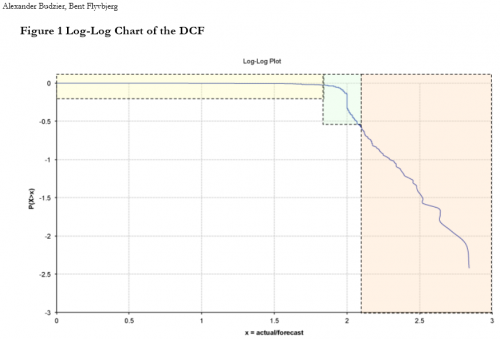

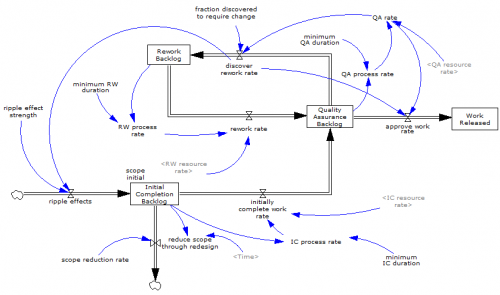

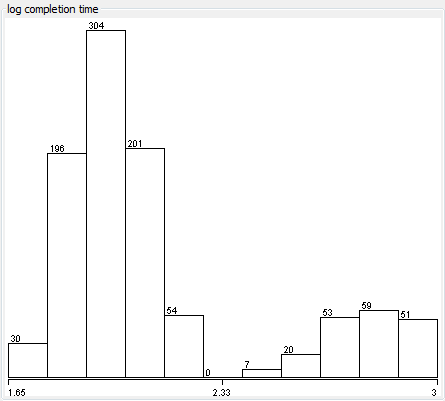

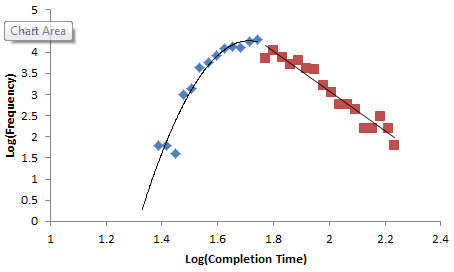

Normal+uniformly-distributed uncertainty in project estimation, productivity and ripple/rework effects generates a lognormal-ish left tail (parabolic on the log-log axes above) and a heavy Power Law right tail.

Normal+uniformly-distributed uncertainty in project estimation, productivity and ripple/rework effects generates a lognormal-ish left tail (parabolic on the log-log axes above) and a heavy Power Law right tail.