I’ve been watching the debate over AI with some amusement, as if it were some other planet at risk. The Musk-Zuckerberg kerfuffle is the latest installment. Ars Technica thinks they’re both wrong:

At this point, these debates are largely semantic.

I don’t see how anyone could live through the last few years and fail to notice that networking and automation have enabled an explosion of fake news, filter bubbles and other information pathologies. These are absolutely policy relevant, and smarter AI is poised to deliver more of what we need least. The problem is here now, not from some impending future singularity.

Ars gets one point sort of right:

Plus, computer scientists have demonstrated repeatedly that AI is no better than its datasets, and the datasets that humans produce are full of errors and biases. Whatever AI we produce will be as flawed and confused as humans are.

I don’t think the data is really the problem; it’s the assumptions the data’s treated with and the context in which that occurs that’s really problematic. In any case, automating flawed aspects of ourselves is not benign!

Here’s what I think is going on:

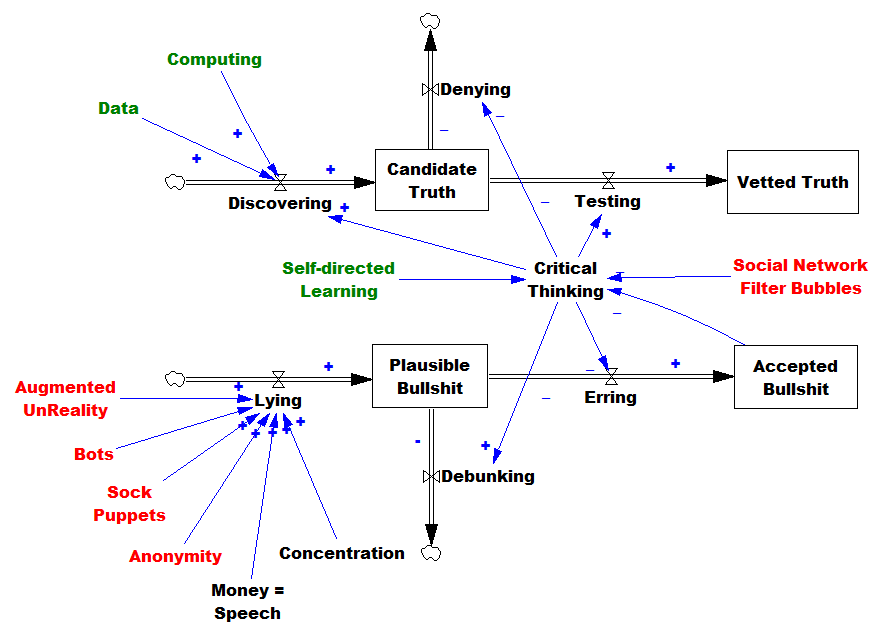

AI, and more generally computing and networks are doing some good things. More data and computing power accelerate the discovery of truth. But truth is still elusive and expensive. On the other hand, AI is making bullsh!t really cheap (pardon the technical jargon). There are many mechanisms by which this occurs:

- CGI and digital editing make it possible to fake anything (“augmented unreality” above).

- Bots that can pass the crude Turing test of social media streams produce disinformation far faster than humans can.

- Sockpuppet automation platforms assist bad actors at disinforming.

- Anonymity limits the effectiveness of reputation.

- Social networks make it easy for people to coalesce into tribes, rejecting information that might disconfirm their biases.

These amplifiers of disinformation serve increasingly concentrated wealth and power elites that are isolated from their negative consequences, and benefit from fueling the process. We wind up wallowing in a sea of information pollution (the deadliest among the sins of managing complex systems).

As BS becomes more prevalent, various reinforcing mechanisms start kicking in. Accepted falsehoods erode critical thinking abilities, and promote the rejection of ideas like empiricism that were the foundation of the Enlightenment. The proliferation of BS requires more debunking, taking time away from discovery. A general erosion of trust makes it harder to solve problems, opening the door for opportunistic rent-seeking non-solutions.

I think it’s a matter of survival for us to do better at critical thinking, so we can shift the balance between truth and BS. That might be one area where AI could safely assist. We have other assets as well, like the explosion of online learning opportunities. But I think we also need some cultural solutions, like better management of trust and anonymity, brakes on concentration, sanctions for lying, rewards for prediction, and more time for reflection.

Right on the money Tom! Moreover, we are still far from understanding our Cognition let alone making machines that outsmart humans. This is nothing but hype energized by deep learning, Watson etc. We are still far from AI that comes close to our capabilities. Nevertheless, many rent seekers are surfing on this wave and spreading much BS. That is dangerous!

Exactly – we’re mechanizing rent seeking and our own cognitive biases.

Like any technology, it can be used for good or bad purposes. The problem so far is that AI has few legitimate uses in social/political discourse (analysis and creative media being the interesting exceptions), but it’s really great for generating disinformation.

Put this in combo with this: http://www.motherjones.com/politics/2017/08/trump-nonstop-lies/

We’re on a dark road.

The Economist has a new article that relates: “Fake news: you ain’t seen nothing yet” https://www.economist.com/news/science-and-technology/21724370-generating-convincing-audio-and-video-fake-events-fake-news-you-aint-seen

sounds like people are afraid to see both sides

I find it amusing that a forum like this is so ill informed on how much AI is alrady running the planet and doing things faster and far more then what a normal being can do

ignorance is always a bliss

I find it amusing that at least one AI cheerleader is willing to make thoughtless attributions but lacks the courage to use a real name.