I think the many chapters of health care changes in the Ryan proposal are actually a distraction from the primary change. It’s this:

- Provides individual income tax payers a choice of how to pay their taxes – through existing law, or through a highly simplified code …

- Simplifies tax rates to 10 percent on income up to $100,000 for joint filers, and $50,000 for single filers; and 25 percent on taxable income above these amounts. … [A minor quibble: it’s stupid to have a stepwise tax rate, especially with a huge jump from 10 to 25%. Why can’t congress get a grip on simple ideas like piecewise linearity?]

- Eliminates the alternative minimum tax [AMT].

- Promotes saving by eliminating taxes on interest, capital gains, and dividends; also eliminates the death tax.

- Replaces the corporate income tax – currently the second highest in the industrialized world – with a border-adjustable business consumption tax of 8.5 percent. …

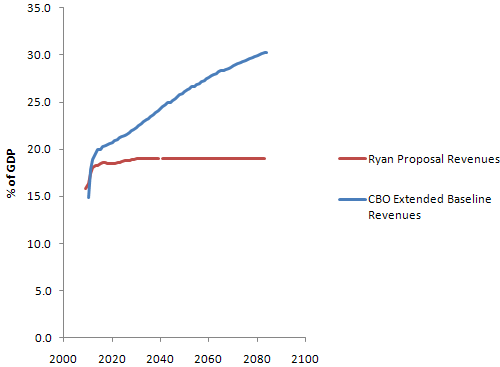

This ostensibly results in a revenue trajectory that rises to a little less than 19% of GDP, roughly the postwar average. The CBO didn’t analyze this; it used a trajectory from Ryan’s staff. The numbers appear to me to be delusional.

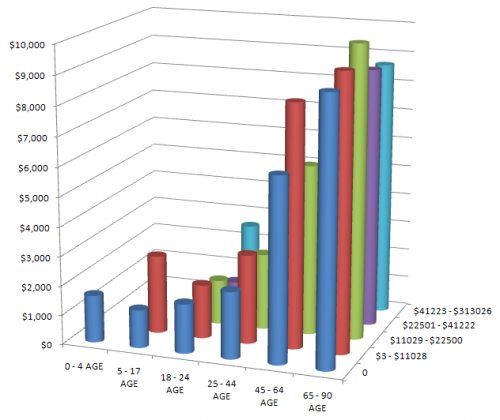

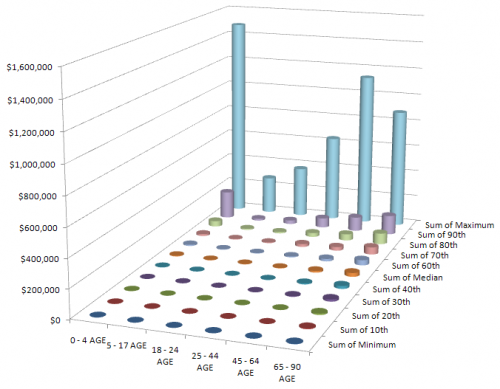

For sub-$50k returns in the new 10% bracket, this does not appear to be a break. Of those returns, currently over 2/3 pay less than a 5% average tax rate. It’s not clear what the distribution of income is within this bracket, but an individual would only have to make about $25k to be worse off than the median earner, it appears. The same appears to be true in the $100k-200k bracket. A $150k return with a $39k exemption for a family of four would pay 18.5% on average, while the current median is 10-15%. This is certainly not a benefit to wage earners, though the net effect is ambiguous (to me at least) because of the change in treatment of asset income.

The elimination of tax on interest, dividends and capital gains is really the big story here. For returns over $200k, wages are less than 42% of AGI. Interest, dividends and gains are over 35%. The termination of asset taxes means that taxes fall by about a third on high income returns (the elimination of the mortgage interest deduction does little to change that). The flat 25% marginal rate can’t possibly make up for this, because it’s not different enough from the ~20% median effective tax rate in that bracket. For the top 400 returns in the US, exemption of asset income would reduce the income basis by 70%, and reduce the marginal tax rate from the ballpark of 35% to 25%.

It seems utterly delusional to imagine that this somehow returns to something resembling the postwar average tax burden, unless setting taxes on assets to zero is accompanied by a net increase in other taxes (i.e. wages, which constitute about 70% of total income). That in turn implies a tax increase for the lower brackets, a substantial cut on returns over $200k, and a ginormous cut for the very highest earners.

This is all exacerbated by the simultaneous elimination of corporate taxes, which are already historically low and presumably have roughly the same incidence as individual asset income, making the cut another gift to the top decile. With rates falling from 35% at the margin to 8.5% on “consumption” (a misnomer – the title calls it a “business consumption tax” but the language actually taxes “gross profits”, which is in turn a misnomer because investment is treated as a current year expense). The repeal of the estate tax, of which 80% is currently collected on estates over $5 million (essentially 0% below $2 million) has a similar distributional effect.

I think it’s reasonable to discuss cutting corporate taxes, which do appear to be cross sectionally high. But if you’re going to do that, you need to somehow maintain the distributional characteristics of the tax system, or come up with a rational reason not to, in the face of increasing inequity of wealth.

I can’t help wondering whether there’s any analysis behind these numbers, or if they were just pulled from a hat by lawyers and lobbyists. This simply isn’t a serious proposal, except for people who are serious about top-bracket tax cuts and drowning the government in a bathtub.

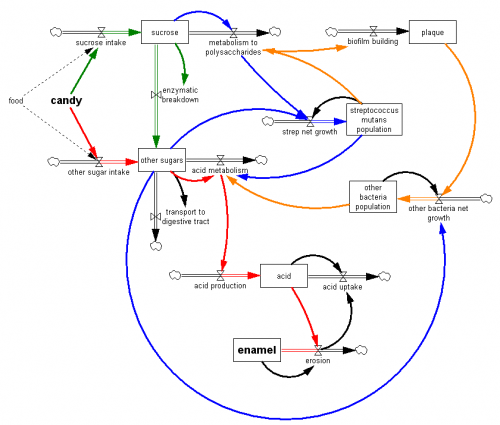

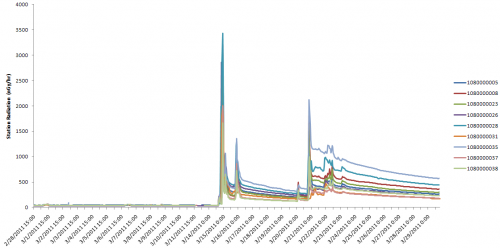

Given that the IRS knows the distribution of individual income in exquisite detail, and that much of the aggregate data needed to analyze proposals like those above is readily available on the web, it’s hard to fathom why anyone would even entertain the idea of discussing a complex revenue proposal like Ryan’s without some serious analytic support and visualization. This isn’t rocket science, or even bathtub dynamics. It’s just basic accounting – perfect stuff for a spreadsheet. So why are we reviewing this proposal with 19th century tools – an overwhelming legal text surrounded by a stew of bogus rhetoric?

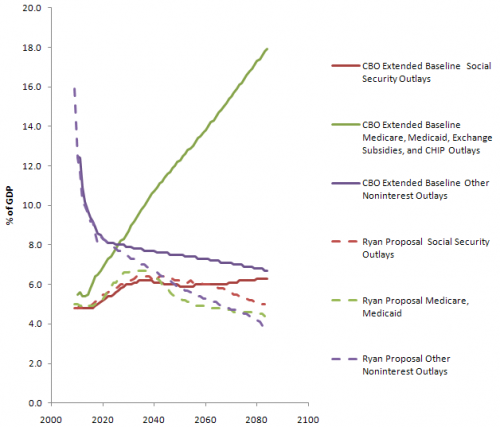

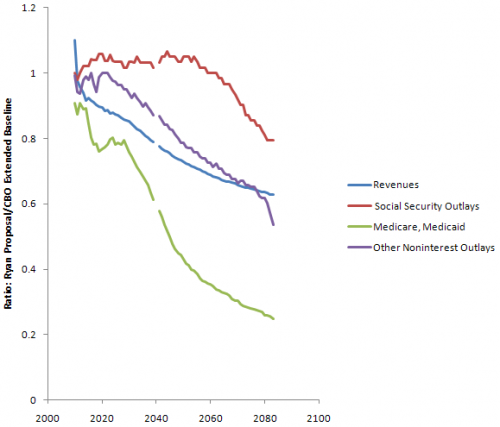

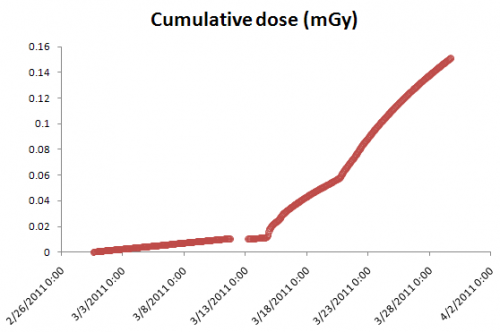

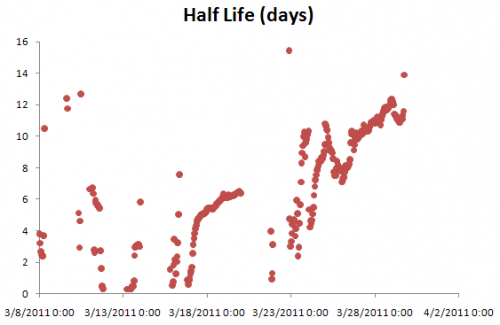

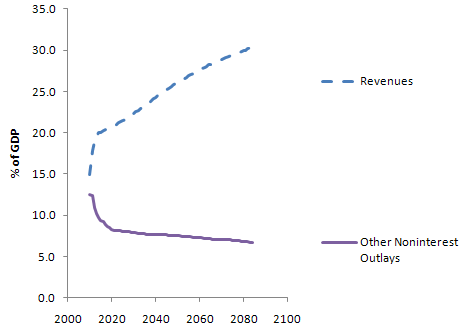

There’s a huge transient in each, due to the current financial mess. (Actually this behavior is to some extent deliberately Keynesian – the loss of revenue in a recession is amplified over the contraction of GDP, because people fall into lower tax brackets and profits are more volatile than gross activity. Increased borrowing automatically takes up the slack, maintaining more stable spending.) The transient makes it tough to sort out what’s real change, and what is merely the shifting sands of the GDP denominator. This graph also points out another irritation: there’s no history. Is this plausible, or unprecedented behavior?

There’s a huge transient in each, due to the current financial mess. (Actually this behavior is to some extent deliberately Keynesian – the loss of revenue in a recession is amplified over the contraction of GDP, because people fall into lower tax brackets and profits are more volatile than gross activity. Increased borrowing automatically takes up the slack, maintaining more stable spending.) The transient makes it tough to sort out what’s real change, and what is merely the shifting sands of the GDP denominator. This graph also points out another irritation: there’s no history. Is this plausible, or unprecedented behavior? It’s not clear how the 19% revenue level arises; the CBO used a trajectory from Ryan’s staff, not its own analysis. Ryan’s proposal

It’s not clear how the 19% revenue level arises; the CBO used a trajectory from Ryan’s staff, not its own analysis. Ryan’s proposal