Fred Krupp, President of EDF, has an opinion on climate policy in the WSJ. I have to give him credit for breaking into a venue that is staunchly ignorant the realities of climate change. An excerpt:

If both sides can now begin to agree on some basic propositions, maybe we can restart the discussion. Here are two:

The first will be uncomfortable for skeptics, but it is unfortunately true: Dramatic alterations to the climate are here and likely to get worse—with profound damage to the economy—unless sustained action is taken. As the Economist recently editorialized about the melting Arctic: “It is a stunning illustration of global warming, the cause of the melt. It also contains grave warnings of its dangers. The world would be mad to ignore them.”

The second proposition will be uncomfortable for supporters of climate action, but it is also true: Some proposed climate solutions, if not well designed or thoughtfully implemented, could damage the economy and stifle short-term growth. As much as environmentalists feel a justifiable urgency to solve this problem, we cannot ignore the economic impact of any proposed action, especially on those at the bottom of the pyramid. For any policy to succeed, it must work with the market, not against it.

If enough members of the two warring climate camps can acknowledge these basic truths, we can get on with the hard work of forging a bipartisan, multi-stakeholder plan of action to safeguard the natural systems on which our economic future depends.

I wonder, though, if the price of admission was too high. Krupp equates two risks: climate impacts, and policy side effects. But this is a form of false balance – these risks are not in the same league.

Policy side effects are certainly real – I’ve warned against inefficient policies multiple times (e.g., overuse of standards). But the effects of a policy are readily visible to well-defined constituencies, mostly short term, and diverse across jurisdictions with different implementations. This makes it easy to learn what’s working and to stop doing what’s not working (and there’s never a shortage of advocates for the latter), without suffering large cumulative effects. Most of the inefficient approaches (like banning the bulb) are economically miniscule.

Climate risk, on the other hand, accrues largely to people in far away places, who aren’t even born yet. It’s subject to reinforcing feedbacks (like civil unrest) and big uncertainties, known and unknown, that lend it a heavy tail of bad outcomes, which are not economically marginal.

The net balance of these different problem characteristics is that there’s little chance of catastrophic harm from climate policy, but a substantial chance from failure to have a climate policy. There’s also almost no chance that we’ll implement a too-stringent climate policy, or that it would stick if we did.

The ultimate irony is that EDF’s preferred policy is cap & trade, which trades illusory environmental certainty for considerable economic inefficiency.

Does this kind of argument reach a wavering middle ground? Or does it fail to convince skeptics, while weakening the position of climate policy proponents by conceding strawdog growth arguments?

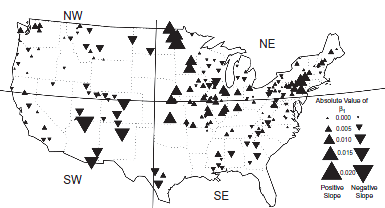

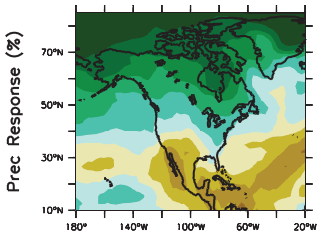

The pattern looks suspiciously like the paper’s spatial distribution of regression coefficients:

The pattern looks suspiciously like the paper’s spatial distribution of regression coefficients: