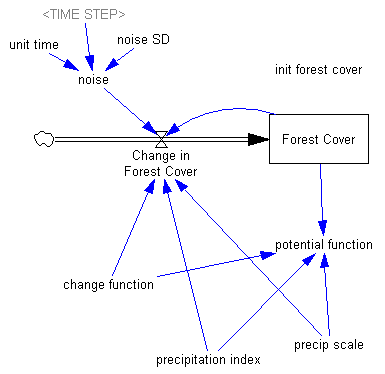

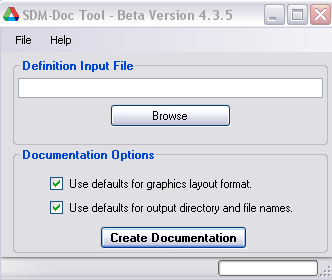

This is a logistic growth model for Facebook’s user base, with a very simple financial projection attached. It’s inspired by:

Quis pendit ipsa pretia: facebook valuation and diagnostic of a bubble based on nonlinear demographic dynamics

Peter Cauwels, Didier Sornette

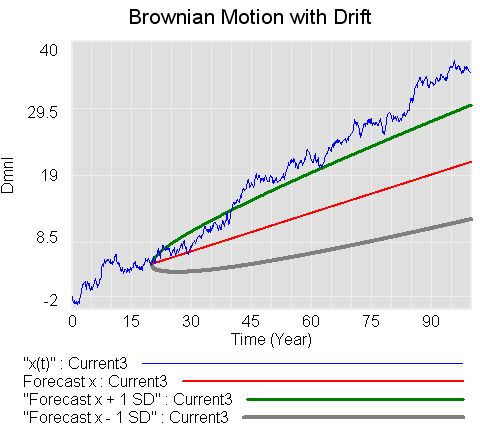

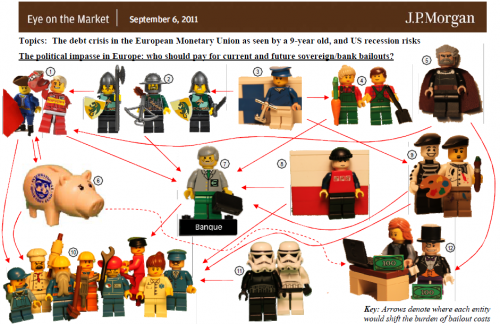

We present a novel methodology to determine the fundamental value of firms in the social-networking sector based on two ingredients: (i) revenues and profits are inherently linked to its user basis through a direct channel that has no equivalent in other sectors; (ii) the growth of the number of users can be calibrated with standard logistic growth models and allows for reliable extrapolations of the size of the business at long time horizons. We illustrate the methodology with a detailed analysis of facebook, one of the biggest of the social-media giants. There is a clear signature of a change of regime that occurred in 2010 on the growth of the number of users, from a pure exponential behavior (a paradigm for unlimited growth) to a logistic function with asymptotic plateau (a paradigm for growth in competition). We consider three different scenarios, a base case, a high growth and an extreme growth scenario. Using a discount factor of 5%, a profit margin of 29% and 3.5 USD of revenues per user per year yields a value of facebook of 15.3 billion USD in the base case scenario, 20.2 billion USD in the high growth scenario and 32.9 billion USD in the extreme growth scenario. According to our methodology, this would imply that facebook would need to increase its profit per user before the IPO by a factor of 3 to 6 in the base case scenario, 2.5 to 5 in the high growth scenario and 1.5 to 3 in the extreme growth scenario in order to meet the current, widespread, high expectations. …

(via the arXiv blog)

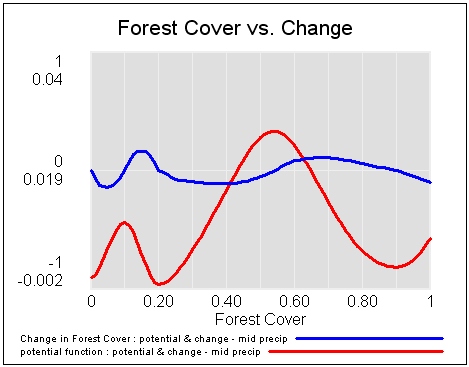

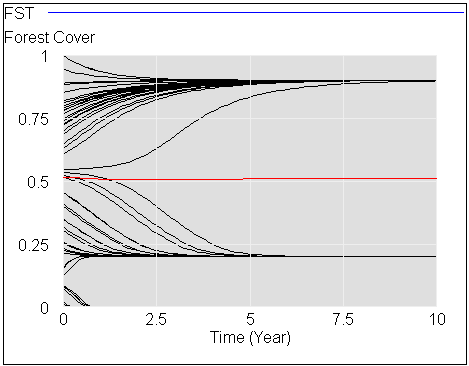

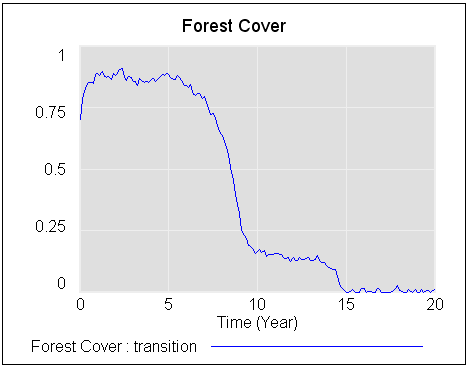

This is not an exact replication of the model (though you can plug in the parameters from C&S’ paper to replicate their results). I used slightly different estimation methods, a generalization of the logistic (for saturation exponent <> 1), and variable revenues and interest rates in the projections (also optional).

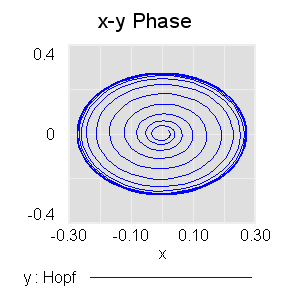

This is a good illustration of how calibration payoffs work. The payoff in this model is actually a policy payoff, because the weighted sum-squared-error is calculated explicitly in the model. That makes it possible to generate Monte Carlo samples and filter them by SSE, and also makes it easier to estimate the scale and variation in the standard error of user base reports.

The model is connected to input data in a spreadsheet. Most is drawn from the paper, but I updated users and revenues with the latest estimates I could find.

A command script replicates optimization runs that fit the model to data for various values of the user carrying capacity K.

Note that there are two views, one for users, and one for financial projections.

See my accompanying blog post for some reflections on the outcome.

This model requires Vensim DSS, Pro, or the Model Reader. facebook 3.vpm or facebook3.zip (The .zip is probably easier if you have DSS or Pro and want to work with the supplementary control files.)

Update: I’ve added another set of models for Groupon: groupon 1.vpm, groupon 2.vpm and groupon.zip groupon3.zip

See my latest blog post for details.