The device designed to cut the oil flow after BP’s oil rig exploded was faulty, the head of a congressional committee said on Wednesday … the rig’s underwater blowout preventer had a leak in its hydraulic system and the device was not powerful enough to cut through joints to seal the drill pipe. …

Markey joked about BP’s proposal to stuff the blowout preventer with golf balls, oil tires “and other junk” to block the spewing oil.

“When we heard the best minds were on the case, we expected MIT, not the PGA,” said Markey, referring to the professional golfing group. “We already have one hole in the ground and now their solution is to shoot a hole in one?”

Month: May 2010

Kerry-Lieberman "American Power Act" leaked

I think it’s a second-best policy, but perhaps the most we can hope for, and better than nothing.

Climate Progress has a first analysis and links to the leaked draft legislation outline and short summary of the Kerry-Lieberman American Power Act. [Update: there’s now a nice summary table.] For me, the bottom line is, what are the emissions and price trajectories, what emissions are covered, and where does the money go?

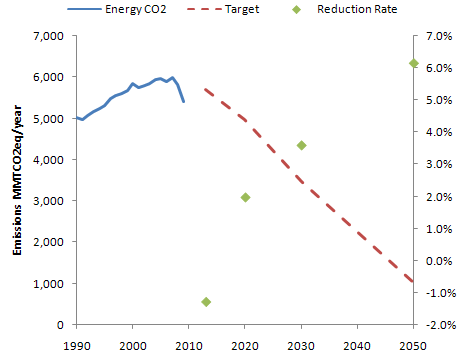

The target is 95.25% of 2005 by 2013, 83% by 2020, 58% by 2030, and 17% by 2050, with six Kyoto gases covered. Entities over 25 MTCO2eq/year are covered. Sector coverage is unclear; the summary refers to “the three major emitting sectors, power plants, heavy industry, and transportation” which is actually a rather incomplete list. Presumably the implication is that a lot of residential, commercial, and manufacturing emissions get picked up upstream, but the mechanics aren’t clear.

The target looks like this [Update: ignoring minor gases]:

This is not much different from ACES or CLEAR, and like them it’s backwards. Emissions reductions are back-loaded. The rate of reduction (green dots) from 2030 to 2050, 6.1%/year, is hardly plausible without massive retrofit or abandonment of existing capital (or negative economic growth). Given that the easiest reductions are likely to be the first, not the last, more aggressive action should be happening up front. (Actually there are a multitude of reasons for front-loading reductions as much as reasonable price stability allows).

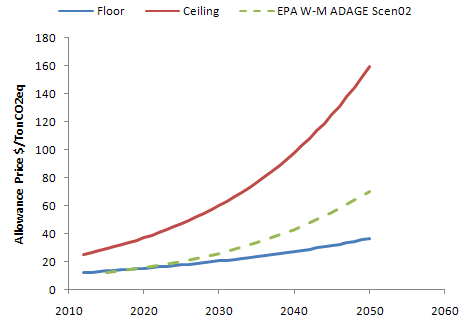

There’s also a price collar:

These mechanisms provide a predictable price corridor, with the expected prices of the EPA Waxman-Markey analysis (dashed green) running right up the middle. The silly strategic reserve is gone. Still, I think this arrangement is backwards, in a different sense from the target. The right way to manage the uncertainty in the long run emissions trajectory needed to stabilize climate without triggering short run economic dislocation is with a mechanism that yields stable prices over the short to medium term, while providing for adaptive adjustment of the long term price trajectory to achieve emissions stability. A cap and trade with no safety valve is essentially the opposite of that: short run volatility with long run rigidity, and therefore a poor choice. The price collar bounds the short term volatility to 2:1 (early) to 4:1 (late) price movements, but it doesn’t do anything to provide for adaptation of the emissions target or price collar if emissions reductions turn out to be unexpectedly hard, easy, important, etc. It’s likely that the target and collar will be regarded as property rights and hard to change later in the game.

I think we should expect the unexpected. My personal guess is that the EPA allowance price estimates are way too low. In that case, we’ll find ourselves stuck on the price ceiling, with targets unmet. 83% reductions in emissions at an emissions price corresponding with under $1/gallon for fuel just strike me as unlikely, unless we’re very lucky technologically. My preference would be an adaptive carbon price, starting at a substantially higher level (high enough to prevent investment in new carbon intensive capital, but not so high initially as to strand those assets – maybe $50/TonCO2). By default, the price should rise at some modest rate, with an explicit adjustment process taking place at longish intervals so that new information can be incorporated. Essentially the goal is to implement feedback control that stabilizes long term climate without short term volatility (as here or here and here).

Some other gut reactions:

Good:

- Clean energy R&D funding.

- Allowance distribution by auction.

- Border adjustments (I can only find these in the summary, not the draft outline).

Bad:

- More subsidies, guarantees and other support for nuclear power plants. Why not let the first round play out first? Is this really a good use of resources or a level playing field?

- Subsidized CCS deployment. There are good reasons for subsidizing R&D, but deployment should be primarily motivated by the economic incentive of the emissions price.

- Other deployment incentives. Let the price do the work!

- Rebates through utilities. There’s good evidence that total bills are more salient to consumers than marginal costs, so this at least partially defeats the price signal. At least it’s temporary (though transient measures have a way of becoming entitlements).

Indifferent:

- Preemption of state cap & trade schemes. Sorry, RGGI, AB32, and WCI. This probably has to happen.

- Green jobs claims. In the long run, employment is controlled by a bunch of negative feedback loops, so it’s not likely to change a lot. The current effects of the housing bust/financial crisis and eventual effects of big twin deficits are likely to overwhelm any climate policy signal. The real issue is how to create wealth without borrowing it from the future (e.g., by filling up the atmospheric bathtub with GHGs) and sustaining vulnerability to oil shocks, and on that score this is a good move.

- State preemption of offshore oil leasing within 75 miles of its shoreline. Is this anything more than an illusion of protection?

- Banking, borrowing and offsets allowed.

Unclear:

- Performance standards for coal plants.

- Transportation efficiency measures.

- Industry rebates to prevent leakage (does this defeat the price signal?).

Dynamics on the iPhone

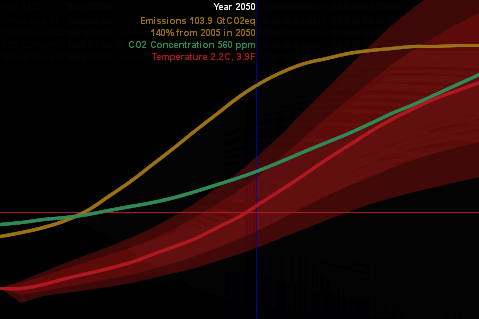

Scott Johnson asks about C-LITE, an ultra-simple version of C-ROADS, built in Processing – a cool visually-oriented language.

With this experiment, I was striving for a couple things:

- A reduced-form version of the climate model, with “good enough” accuracy and interactive speed, as in Vensim’s Synthesim mode (no client-server latency).

- Tufte-like simplicity of the UI (no grids or axis labels to waste electrons). Moving the mouse around changes the emissions trajectory, and sweeps an indicator line that gives the scale of input and outputs.

- Pervasive representation of uncertainty (indicated by shading on temperature as a start).

This is just a prototype, but it’s already more fun than models with traditional interfaces.

I wanted to run it on the iPhone, but was stymied by problems translating the model to Processing.js (javascript) and had to set it aside. Recently Travis Franck stepped in and did a manual translation, proving the concept, so I took another look at the problem. In the meantime, a neat export tool has made it easy. It turns out that my code problem was as simple as replacing “float []” with “float[]” so now I have a javascript version here. It runs well in Firefox, but there are a few glitches on Safari and iPhones – text doesn’t render properly, and I don’t quite understand the event model. Still, it’s cool that modest dynamic models can run realtime on the iPhone. [Update: forgot to mention that I sued Michael Schieben’s touchmove function modification to processing.js.]

The learning curve for all of this is remarkably short. If you’re familiar with Java, it’s very easy to pick up Processing (it’s probably easy coming from other languages as well). I spent just a few days fooling around before I had the hang of building this app. The core model is just standard Euler ODE code:

initialize parameters

initialize levels

do while time < final time

compute rates & auxiliaries

compute levels

The only hassle is that equations have to be ordered manually. I built a Vensim prototype of the model halfway through, in order to stay clear on the structure as I flew seat-of-the pants.

With the latest Processing.js tools, it’s very easy to port to javascript, which runs on nearly everything. Getting it running on the iPhone (almost) was just a matter of discovering viewport meta tags and a line of CSS to set zero margins. The total codebase for my most complicated version so far is only 500 lines. I think there’s a lot of potential for sharing model insights through simple, appealing browser tools and handheld platforms.

As an aside, I always wondered why javascript didn’t seem to have much to do with Java. The answer is in this funny programming timeline. It’s basically false advertising.

Complexity is not the enemy

Following its misguided attack on complex CLDs, a few of us wrote a letter to the NYTimes. Since they didn’t publish, here it is:

Dear Editors, Systemic Spaghetti Slide Snookers Scribe. Powerpoint Pleases Policy Players

“We Have Met the Enemy and He Is PowerPoint” clearly struck a deep vein of resentment against mindless presentations. However, the lead “spaghetti” image, while undoubtedly too much to absorb quickly, is in fact packed with meaning for those who understand its visual lingo. If we can’t digest a mere slide depicting complexity, how can we successfully confront the underlying problem?

The diagram was not created in Powerpoint. It is a “causal loop diagram,” one of a several ways to describe relationships that influence the evolution of messy problems like the war in the Middle East. It’s a perfect illustration of General McMaster’s observation that, “Some problems in the world are not bullet-izable.” Diagrams like this may not be intended for public consumption; instead they serve as a map that facilitates communication within a group. Creating such diagrams allows groups to capture and improve their understanding of very complex systems by sharing their mental models and making them open to challenge and modification. Such diagrams, and the formal computer models that often support them, help groups to develop a more robust understanding of the dynamics of a problem and to develop effective and elegant solutions to vexing challenges.

It’s ironic that so many call for a return to pure verbal communication as an antidote for Powerpoint. We might get a few great speeches from that approach, but words are ill-suited to describe some data and systems. More likely, a return to unaided words would bring us a forgettable barrage of five-pagers filled with laundry-list thinking and unidirectional causality.

The excess supply of bad presentations does not exist in a vacuum. If we want better presentations, then we should determine why organizational pressures demand meaningless propaganda, rather than blaming our tools.

Tom Fiddaman of Ventana Systems, Inc. & Dave Packer, Kristina Wile, and Rebecca Niles Peretz of The Systems Thinking Collaborative

Other responses of note:

We have met an ally and he is Storytelling (Chris Soderquist)

Why We Should be Suspect of Bullet Points and Laundry Lists (Linda Booth Sweeney)

Java Vensim helper

MIT’s Climate Collaboratorium has posted java code that it used to wrap C-LEARN as a web service using the multicontext .dll. If you’re doing something similar, you may find the code useful, particularly the VensimHelper class. The liberal MIT license applies. However, be aware that you’ll need a license for the Vensim multicontext .dll to go with it.

Copenhagen – the breaking point

Der Spiegel has obtained audio of the heads of state negotiating in the final hours of COP15. Its fascinating stuff. The headline reads, How China and India Sabotaged the UN Climate Summit. This point was actually raised back in December by Mark Lynas at the Guardian (there’s a nice discussion and event timeline at Inside-Out China). On the surface the video supports the view that China and India were the material obstacle to agreement on a -50% by 2050 target. However, I think it’s still hard to make attributions about motive. We don’t know, for example, whether China is opposed because it anticipates raising emissions to levels that would make 50% cuts physically impossible, or because it sees the discussion of cuts as fundamentally linked to the unaddressed question of responsibility, as hinted by He Yafei near the end of the video. Was the absence of Wen Jiabao obstruction or a defensive tactic? We have even less information about India, merely that it objected to “prejudging options,” whatever that means.

What the headline omits is the observation in the final pages of the article, that the de facto US position may not have been so different from China’s:

Part 3: Obama Stabs the Europeans in the Back

…

But then Obama stabbed the Europeans in the back, saying that it would be best to shelve the concrete reduction targets for the time being. “We will try to give some opportunities for its resolution outside of this multilateral setting … And I am saying that, confident that, I think China still is as desirous of an agreement, as we are.”

‘Other Business to Attend To’

At the end of his little speech, which lasted 3 minutes and 42 seconds, Obama even downplayed the importance of the climate conference, saying “Nicolas, we are not staying until tomorrow. I’m just letting you know. Because all of us obviously have extraordinarily important other business to attend to.”

Some in the room felt queasy. Exactly which side was Obama on? He couldn’t score any domestic political points with the climate issue. The general consensus was that he was unwilling to make any legally binding commitments, because they would be used against him in the US Congress. Was he merely interested in leaving Copenhagen looking like an assertive statesman?

It was now clear that Obama and the Chinese were in fact in the same boat, and that the Europeans were about to drown.

This article and video almost makes up for Spiegel’s terrible coverage of the climate email debacle.

Related analysis of developed-developing emissions trajectories:

Cumulative Normal Distribution

Vensim doesn’t have a function for the cumulative normal distribution, but it’s easy to implement via a macro. I used to use a polynomial cited in Numerical Recipes (error function, Ch. 6.2):

:MACRO: NCDF(x)

NCDF = 1-Complementary Normal CDF

~ dmnl

~ |

Complementary Normal CDF= ERFCy/2 ~ dmnl ~ |

ERFCy = IF THEN ELSE(y>=0,ans,2-ans) ~ dmnl ~ http://www.library.cornell.edu/nr/bookcpdf/c6-2.pdf |

y = x/sqrt(2) ~ dmnl ~ |

ans=t*exp(-z*z-1.26551+t*(1.00002+t*(0.374092+t*(0.0967842+ t*(-0.186288+t*(0.278868+t*(-1.1352+t*(1.48852+ t*(-0.822152+t*0.170873))))))))) ~ dmnl ~ |

t=1/(1+0.5*z) ~ dmnl ~ |

z = ABS(y) ~ dmnl ~ |

:END OF MACRO:

I recently discovered a better approximation here, from algorithm 26.2.17 in Abromowitz and Stegun, Handbook of Mathematical Functions:

:MACRO: NCDF2(x)NCDF2 = IF THEN ELSE(x >= 0,

(1 - c * exp( -x * x / 2 ) * t *

( t *( t * ( t * ( t * b5 + b4 ) + b3 ) + b2 ) + b1 )), ( c * exp( -x * x / 2 ) * t *

( t *( t * ( t * ( t * b5 + b4 ) + b3 ) + b2 ) + b1 ))

)

~ dmnl

~ From http://www.sitmo.com/doc/Calculating_the_Cumulative_Normal_Distribution

Implements algorithm 26.2.17 from Abromowitz and Stegun, Handbook of Mathematical

Functions. It has a maximum absolute error of 7.5e^-8.

http://www.math.sfu.ca/

|

c = 0.398942

~ dmnl

~ |

t = IF THEN ELSE( x >= 0, 1/(1+p*x), 1/(1-p*x))

~ dmnl

~ |

b5 = 1.33027

~ dmnl

~ |

b4 = -1.82126

~ dmnl

~ |

b3 = 1.78148

~ dmnl

~ |

b2 = -0.356564

~ dmnl

~ |

b1 = 0.319382

~ dmnl

~ |

p = 0.231642

~ dmnl

~ |

:END OF MACRO:

In advanced Vensim versions, paste the macro into the header of your model (View>As Text). Otherwise, you can implement the equations inside the macro directly in your model.

Stop talking, start studying?

Roger Pielke Jr. poses a carbon price paradox:

The carbon price paradox is that any politically conceivable price on carbon can do little more than have a marginal effect on the modern energy economy. A price that would be high enough to induce transformational change is just not in the cards. Thus, carbon pricing alone cannot lead to a transformation of the energy economy.

Advocates for a response to climate change based on increasing the costs of carbon-based energy skate around the fact that people react very negatively to higher prices by promising that action won’t really cost that much. … If action on climate change is indeed “not costly” then it would logically follow the only reasons for anyone to question a strategy based on increasing the costs of energy are complete ignorance and/or a crass willingness to destroy the planet for private gain. … There is another view. Specifically that the current ranges of actions at the forefront of the climate debate focused on putting a price on carbon in order to motivate action are misguided and cannot succeed. This argument goes as follows: In order for action to occur costs must be significant enough to change incentives and thus behavior. Without the sugarcoating, pricing carbon (whether via cap-and-trade or a direct tax) is designed to be costly. In this basic principle lies the seed of failure. Policy makers will do (and have done) everything they can to avoid imposing higher costs of energy on their constituents via dodgy offsets, overly generous allowances, safety valves, hot air, and whatever other gimmick they can come up with.

His prescription (and that of the Breakthrough Institute) is low carbon taxes, reinvested in R&D:

We believe that soon-to-be-president Obama’s proposal to spend $150 billion over the next 10 years on developing carbon-free energy technologies and infrastructure is the right first step. … a $5 charge on each ton of carbon dioxide produced in the use of fossil fuel energy would raise $30 billion a year. This is more than enough to finance the Obama plan twice over.

… We would like to create the conditions for a virtuous cycle, whereby a small, politically acceptable charge for the use of carbon emitting energy, is used to invest immediately in the development and subsequent deployment of technologies that will accelerate the decarbonization of the U.S. economy.

…

Stop talking, start solving

As the nation begins to rely less and less on fossil fuels, the political atmosphere will be more favorable to gradually raising the charge on carbon, as it will have less of an impact on businesses and consumers, this in turn will ensure that there is a steady, perhaps even growing source of funds to support a process of continuous technological innovation.

This approach reminds me of an old joke:

Lenin, Stalin, Khrushchev and Brezhnev are travelling together on a train. Unexpectedly the train stops. Lenin suggests: “Perhaps, we should call a subbotnik, so that workers and peasants fix the problem.” Kruschev suggests rehabilitating the engineers, and leaves for a while, but nothing happens. Stalin, fed up, steps out to intervene. Rifle shots are heard, but when he returns there is still no motion. Brezhnev reaches over, pulls the curtain, and says, “Comrades, let’s pretend we’re moving.”

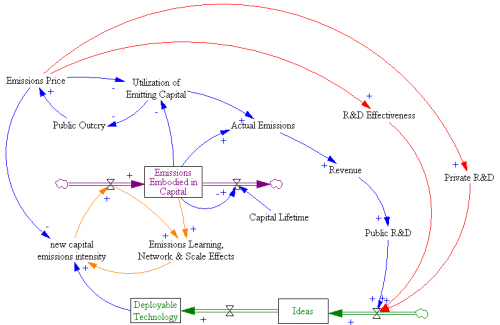

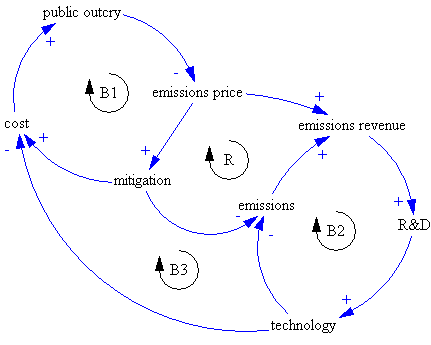

I translate the structure of Pielke’s argument like this:

Implementation of a high emissions price now would be undone politically (B1). A low emissions price triggers a virtuous cycle (R), as revenue reinvested in technology lowers the cost of future mitigation, minimizing public outcry and enabling the emissions price to go up. Note that this structure implies two other balancing loops (B2 & B3) that serve to weaken the R&D effect, because revenues fall as emissions fall.

If you elaborate on the diagram a bit, you can see why the technology-led strategy is unlikely to work:

First, there’s a huge delay between R&D investment and emergence of deployable technology (green stock-flow chain). R&D funded now by an emissions price could take decades to emerge. Second, there’s another huge delay from the slow turnover of the existing capital stock (purple) – even if we had cars that ran on water tomorrow, it would take 15 years or more to turn over the fleet. Buildings and infrastructure last much longer. Together, those delays greatly weaken the near-term effect of R&D on emissions, and therefore also prevent the virtuous cycle of reduced public outcry due to greater opportunities from getting going. As long as emissions prices remain low, the accumulation of commitments to high-emissions capital grows, increasing public resistance to a later change in direction. Continue reading “Stop talking, start studying?”

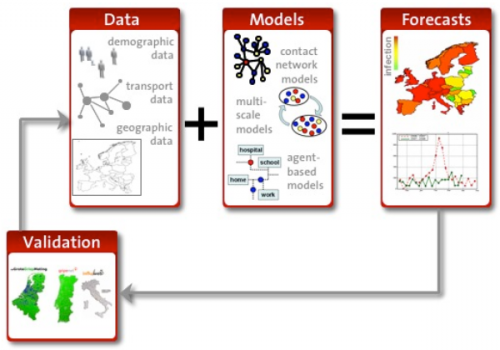

The model that ate Europe

arXiv covers modeling on an epic scale in Europe’s Plan to Simulate the Entire Earth: a billion dollar plan to build a huge infrastructure for global multiagent models. The core is a massive exaflop “Living Earth Simulator” – essentially the socioeconomic version of the Earth Simulator.

I admire the audacity of this proposal, and there are many good ideas captured in one place:

- The goal is to take on emergent phenomena like financial crises (getting away from the paradigm of incremental optimization of stable systems).

- It embraces uncertainty and robustness through scenario analysis and Monte Carlo simulation.

- It mixes modeling with data mining and visualization.

- The general emphasis is on networks and multiagent simulations.

I have no doubt that there might be many interesting spinoffs from such a project. However, I suspect that the core goal of creating a realistic global model will be an epic failure, for three reasons. Continue reading “The model that ate Europe”

John Sterman on solving our biggest problems

The key message is that climate, health, and other big messy problems don’t have purely technical fixes. Therefore Manhattan Project approaches to solving them won’t work. Creating and deploying solutions to these problems requires public involvement and widespread change with distributed leadership. The challenge is to get public understanding of climate to carry the same sense of urgency that drove the civil rights movement. From a series at the IBM Almaden Institute conference.