My confidence bounds on future behavior of the epidemic are still pretty wide. While there’s good reason to be optimistic about a lot of locations, there are also big uncertainties looming. No matter how things shake out, I’m confident in this:

The antiscience crowd will be out in force. They’ll cherry-pick the early model projections of an uncontrolled epidemic, and use that to claim that modelers predicted a catastrophe that didn’t happen, and conclude that there was never a problem. This is the Cassandra’s curse of all successful modeling interventions. (See Nobody Ever Gets Credit for Fixing Problems that Never Happened for a similar situation.)

But it won’t stop there. A lot of people don’t really care what the modelers actually said. They’ll just make stuff up. Just today I saw a comment at the Bozeman Chronicle to the effect of, “if this was as bad as they said, we’d all be dead.” Of course that was never in the cards, or the models, but that doesn’t matter in Dunning Krugerland.

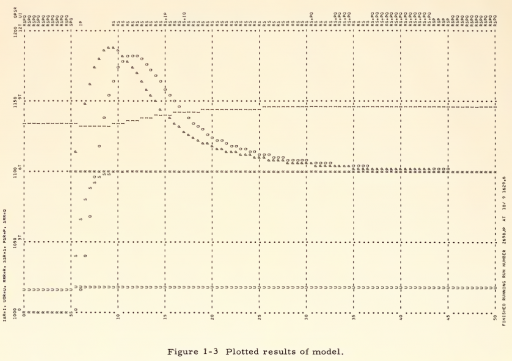

Modelers, be prepared for a lot more of this. I think we need to be thinking more about defensive measures, like forecast archiving and presentation of results only with confidence bounds attached. However, it’s hard to do that and to produce model results at a pace that keeps up with the evolution of the epidemic. That’s something we need more infrastructure for.