I was flipping through the SD Discussion List archive and ran across this gem from George Richardson, responding to Bernadette O’Regan’s query about critiques of SD:

The significant or well-known criticisms of system dynamics include:

William Nordhaus, Measurement without Data (The Economic Journal, 83,332;

Dec. 1973)

[Nordhaus objects to the fact that Forrester seriously proposes a

world model fit to essentially only two data points. He simplifies the

model to help him analyze it, carries through some investigations that

cause him to doubt the model, and makes the mistake of critiquing a

univariate relation (effect of material standard of living on births)

using multivariate real world data — the real-world data has all the

other influences in the system at work, while Nordhaus wants to pull out

just the effect of standard of living). Sadly, a very influential

critique in the economics literature.]See Forrester’s response in Jay. W. Forrester, Gilbert W. Low, and

Nathaniel J. Mass, The Debate on World Dynamics: a Response to Nordhaus

(Policy Sciences 5 (1974).Joseph Weizenbaum, Computer Power and Human Reason (W.H. Freeman, 1976).

[Weizenbaum, a professor of computer science at MIT, was the author of

the speech processing and recognition program ELIZA. He became very

distressed at what people were proposing we could do with computers (e.g.,

use ELIZA seriously to counsel emotionally disturbed people), and wrote

this impassioned book about what in his view computers can do well and

what they cant. Contains sections on system dynamics in various places

and finds Forrester’s claims for the approach to be too broad and, like

Herbert Simon’s, “very simple.”]Robert Boyd, World Dynamics: A Note (Science, 177, August 11, 1972).

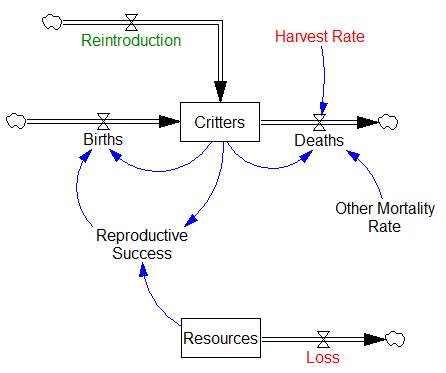

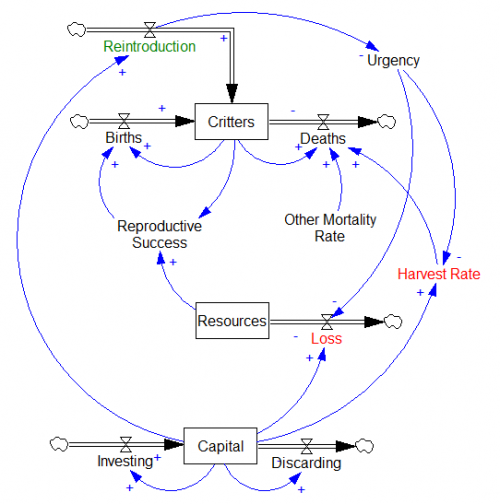

[Boyd’s very original and interesting critique of World Dynamics tries

to use Forrester’s model itself to argue that World Dynamics did not solve

the essential question about limits to growth — whether technology can

avert the limits explicitly assumed in World Dynamics and the Limits to

Growth models. Boyd adds a Technology level to World Dynamics and

incorporates four effects on things like pollution generated per capita,

and finds that one can incorporate assumptions in the model that make the

problem go away. Unfortunately for his argument, Boyd’s additions are

extremely sensitive to particular parameter values and he unrealistically

assumes things like the second law of thermodynamics doesn’t apply. We

used to give this as an exercise: step 1 — build Boyd’s additions into

Forrester’s model and investigate; step 2 — incorporate Boyd’s

assumptions in Forrester’s original model just by changing parameters;

step 3 — reflect on what you’ve learned. Still a great exercise.]Robert M. Solow, Notes on Doomsday Models (Proceedings of the National

Academy of Science 69,12, pp. 3832-3833, dec. 1972).

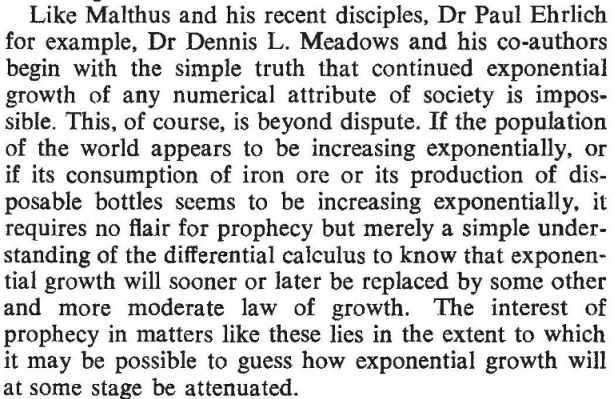

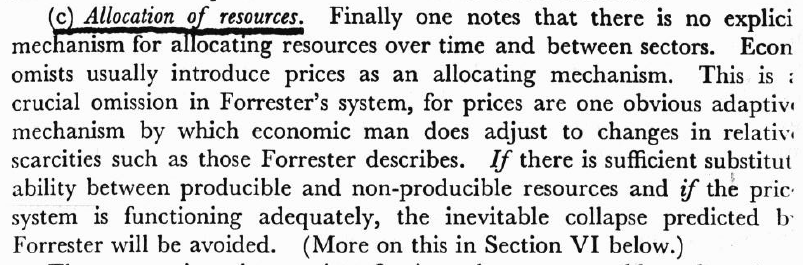

[Solow, an Institute Professor at MIT, critiqued the World Dynamics and

Limits to Growth models on structure (saying their conclusions were built

in), absence of a price system, and poor-to-nonexistent empirical

foundation. The differences between an econometric approach and a system

dynamics approach are quite vivid in this critique.]H. Igor Ansoff and Dennis Slevin, An Appreciation of Industrial Dynamics.

(Management Science, 14,7, March 1968).

[Unfortunately, I no longer have a copy of this critique, so I cant

summarize it, but its worth finding in a library. See also Forrester’s

“A Response to Ansoff and Slevin” which also appeared in Management

Science (vol. 14, 9m May 1968), and is reprinted in Forrester’s Collected

Papers, available from Productivity Press.]These are all rather ancient, “classical” critiques. I am not really

familiar with current critiques, either because they exist but have not

come to my attention or because they are few and far between. If the

latter, that could be because we are doing less controversial work these

days or because the critics think we’re not really a threat anymore.I hope we’re still a threat.

…GPR

George P. Richardson

Rockefeller College of Public Affairs and Policy, SUNY, Albany

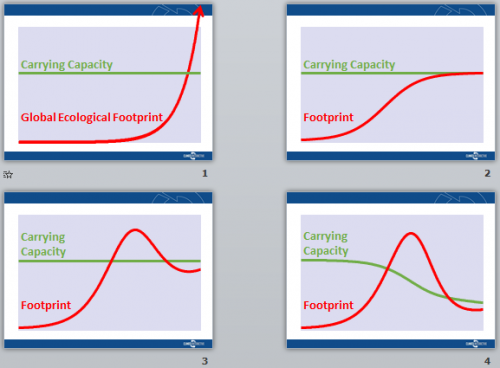

I’ll add a few more when I get a chance. These critiques really concern World Dynamics and the Limits to Growth rather than SD per se, but many have thrown the baby out with the bathwater. Some of these critiques have not aged well. But some are also still true. For example, Solow’s critique of World Dynamics starts with the absence of a price system, and Boyd’s critique center’s on the absence of technology. There are lots of SD models with prices and technology in them, but there isn’t really a successor to World Dynamics or World3 that does a good job of addressing these critiques. At the same time, I think it’s now obvious that neither prices nor technology has brought stability to the environment and resources.