Democrats have pulled the plug on a sweeping energy bill this year. There is no heir apparent. This is not cause for panic. In climate, as in education, there are no emergencies.

However, the underlying reasons may be cause for panic. It seems that voters are unwilling to accept any policy that will significantly raise the price of emissions. Given that price is a predominant information carrier in our economy, other polices are unlikely to work efficiently, absent a price signal. That leaves us in a bit of a pickle. What to do?

If you don’t want to buck public opinion, advise the people to invest in (then pray for) a technological miracle. Ask yourself, “Do I feel lucky?” It might even work.

Alternatively, you might conclude that the public hasn’t quite grasped the nature of the problem – that wait and see is not a good policy in systems with long delays. But then you’d be accused of scientism, for the equivalent of challenging the efficient market hypothesis or the notion that the customer is always right. That’s rather puzzling, given that there’s direct evidence that people don’t intuitively appreciate the dynamics of accumulation, and that snowstorms in the East cause half of Americans to question the reality of climate change.

The anti-scientism, pro-technology crowd takes opposition to meaningful mitigation policy as a sure sign that the public is on to something. The wisdom of crowds is powerful when there’s diverse information and rapid feedback, as in price discovery through a market. But it has a pretty disastrous history in the runup to bubbles and other catastrophes, as we’ve recently seen. Surely there are some legitimate worries about current climate proposals (I’ve expressed a number here), but it doesn’t follow that pricing emissions is a bad decision.

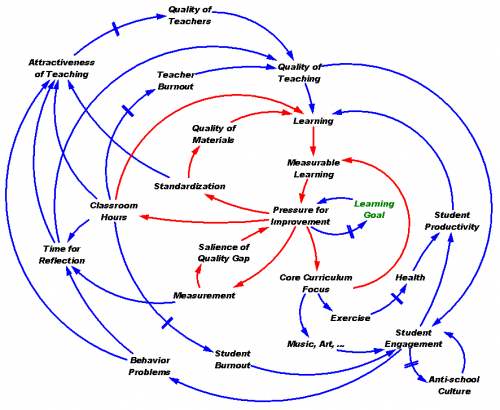

So, what’s a modeler to do? Opening up political debates is a good idea, though not quite in the way that I think proponents intend. We already have plenty of political debates. The problem is that they tend to lack ready access to scientific or other information that can be agreed upon or at least presented in a way that permits testing of hypotheses against data or evaluation of decisions against contingencies. That means that questions of values and distribution of benefits (which politics is rightfully about) get mixed up with muddled thinking about science, economics, and social system dynamics.

The solution typically proposed is to open up science and models to more public scrutiny. That’s a good idea for a variety of reasons, but by itself it’s a losing proposition for scientists- they get all the criticism, and the public process doesn’t assimilate much of their insights. What’s needed is a fair exchange, where everyone shows their hands. Scientists make their stuff accessible, and in return participants in policy debates actually use it, and additionally submit to formalization of their arguments to facilitate shared understanding and testing.

Coming back to cap & trade, I don’t see that the major political players are willing to do that. Following a successful round of multi-stakeholder workshops that brought a systems perspective to conversations about climate policy, funded by the petro industry in California, we spent a fair amount of time marketing the idea of a model-assisted deliberation process targeted at shared design of federal climate policy. Lobbyists at some of the big stakeholders told us very forthrightly that they were unwilling to engage in any process with an outcome that they couldn’t predict and control.

In an environment where everyone’s happy with their own entrenched position, their isn’t much hope for a good solution to emerge. The only solution I see is to make an end run around the big players, and go straight to the public with better information, in order to expand the set of things they’ll accept. I hope there’s time for that to work.