Roger Pielke Jr., Tom Wigley, and Christopher Green have a nice commentary in this week’s Nature. It argues that current scenarios are dangerously reliant on business-as-usual technical improvement to reduce greenhouse gas intensity:

Here we show that two-thirds or more of all the energy efficiency improvements and decarbonization of energy supply required to stabilize greenhouse gases is already built into the IPCC reference scenarios. This is because the scenarios assume a certain amount of spontaneous technological change and related decarbonization. Thus, the IPCC implicitly assumes that the bulk of the challenge of reducing future emissions will occur in the absence of climate policies. We believe that these assumptions are optimistic at best and unachievable at worst, potentially seriously underestimating the scale of the technological challenge associated with stabilizing greenhouse-gas concentrations.

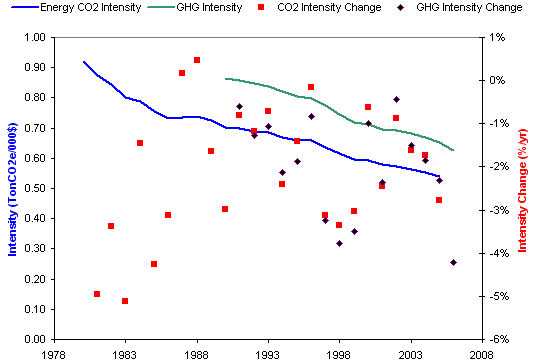

They note that assumed rates of decarbonization exceed reality:

The IPCC scenarios include a wide range of possibilities for the future evolution of energy and carbon intensities. Many of the scenarios are arguably unrealistic and some are likely to be unachievable. For instance, the IPCC assumptions for decarbonization in the short term (2000’“2010) are already inconsistent with the recent evolution of the global economy (Fig. 2). All scenarios predict decreases in energy intensity, and in most cases carbon intensity, during 2000 to 2010. But in recent years, both global energy intensity and carbon intensity have risen, reversing the trend of previous decades.

In an accompanying news article, several commenters object to the notion of a trend reversal:

Energy efficiency has in the past improved without climate policy, and the same is very likely to happen in the future. Including unprompted technological change in the baseline is thus logical. It is not very helpful to discredit emission scenarios on the sole basis of their being at odds with the most recent economic trends in China. Chinese statistics are not always reliable. Moreover, the period in question is too short to signify a global trend-break. (Detlef van Vuuren)

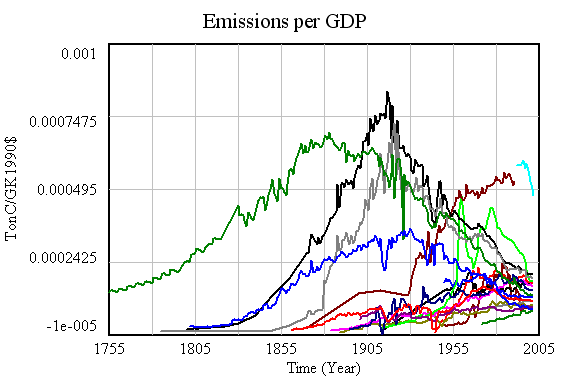

Having seen several trend breaks evaporate, including the dot.com productivity miracle and the Chinese emissions reductions coincident with the Asian crisis, I’m inclined to agree that gloom may be premature. On the other hand, Pielke, Wigley and Green are conservative in that they don’t consider the possible pressure for recarbonization created by a transition from conventional oil and gas to coal and tar sands. A look at the long term is helpful:

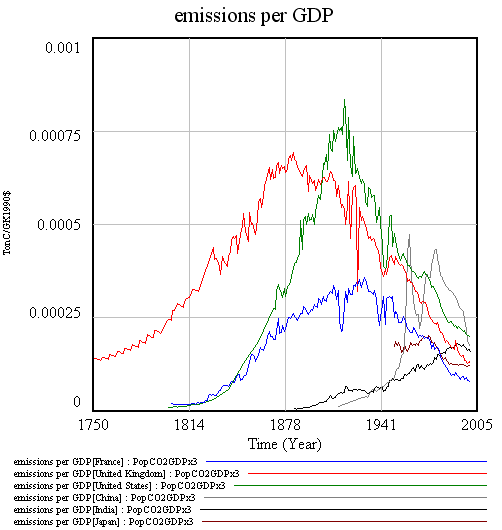

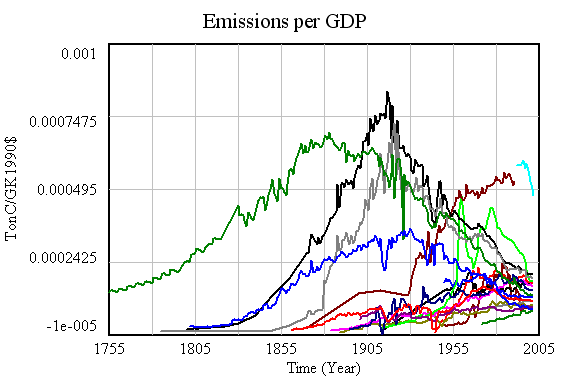

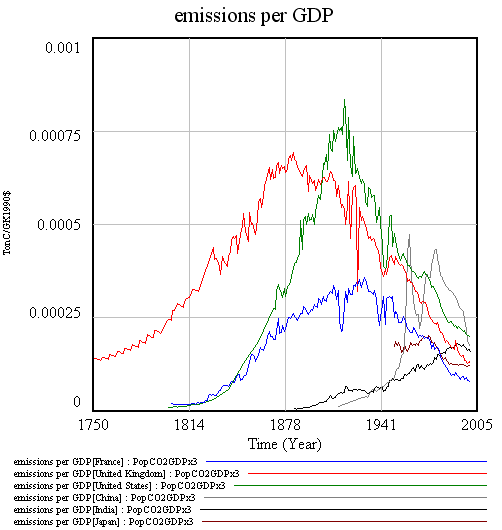

Emissions intensity of GDP for 18 major emitters. Notice the convergence in intensity, with high-intensity nations falling, and low-intensity nations (generally less-developed) rising.

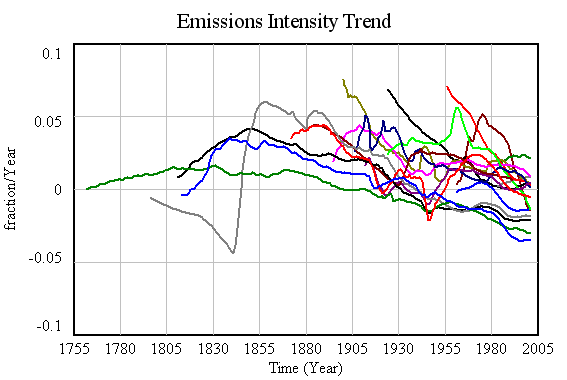

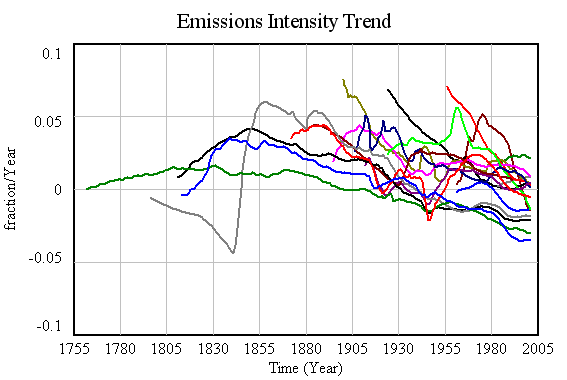

Corresponding decadal trends in emissions intensity. Over the long haul, there’s some indication that emissions are falling faster in developed nations – a reason for hope. But there’s also a lot of diversity, and many nations have positive trends in intensity. More importantly, even with major wars and depressions, no major emitter has achieved the kind of intensity trend (about -7%/yr) needed to achieve 80% emissions reductions by 2050 while sustaining 3%/yr GDP growth. That suggests that achieving aggressive goals may require more than technology, including – gasp – lifestyle changes.

A closer look at intensity for 6 major emitters. Notice intensity rising in China and India until recently, and that Chinese data is indeed suspect.

Pielke, Wigley, and Green wrap up:

There is no question about whether technological innovation is necessary ’” it is. The question is, to what degree should policy focus directly on motivating such innovation? The IPCC plays a risky game in assuming that spontaneous advances in technological innovation will carry most of the burden of achieving future emissions reductions, rather than focusing on creating the conditions for such innovations to occur.

There’s a second risky game afoot, which is assuming that “creating the conditions for such innovations to occur” means investing in R&D, exclusive of other measures. To achieve material reductions in emissions, “occur” must mean “be adopted” not just “be invented.” Absent market signals and institutional changes, it is unlikely that technologies like carbon sequestration will ever be adopted. Others, like vehicle and lighting efficiency, could easily see their gains eroded by increased consumption of energy services, which become cheaper as technology improves productivity.