Econbrowser has an interesting article on the Billion Prices Project, which looks for daily price movements on items across the web. This yields a price index that’s free of quality change assumptions, unlike hedonic CPI measures, but introduces some additional issues due to the lack of control over the changing portfolio of measured items.

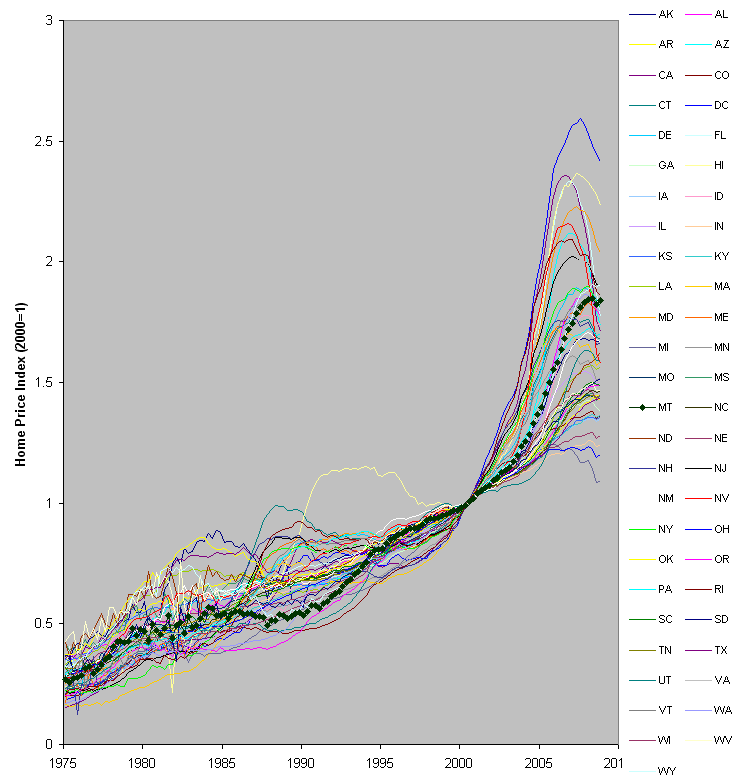

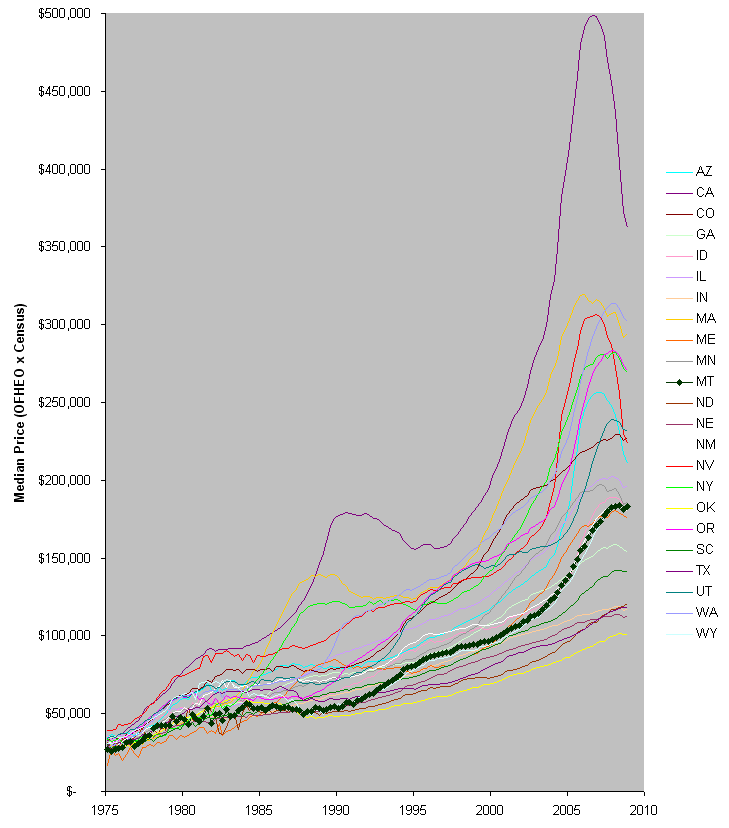

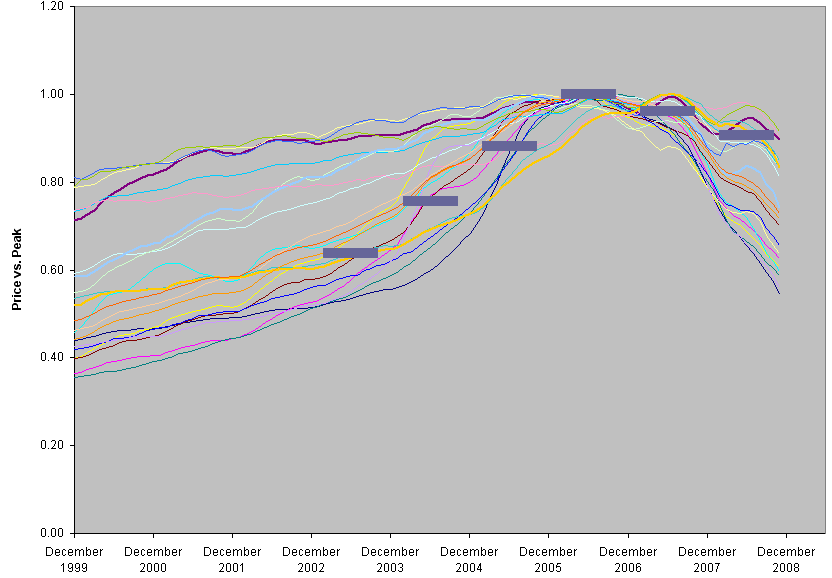

A couple of years ago we built the analytics behind the RPX index of residential real estate prices, and grappled with many of the same problems. The competition was the CSI – the Case-Shiller indes, which uses the repeat-sales method. With that approach, every house serves as its own control, so changes in neighborhoods or other quality aspects wash out. However, the clever statistical control introduces some additional problems. First, it reduces the sample of viable data points, necessitating a 3x longer reporting lag. Second, the processing steps reduce transparency. Third, one step in particular involves downweighting of homes with (possibly implausibly) large price movements, which may have the side effect of reducing sensitivity to real extreme events. Fourth, users may want to see effects of a changing sales portfolio.

For the RPX, we chose instead a triple power law estimate, ignoring quality and mix issues entirely. The TPL is basically a robust measure of the central tendency of prices. It’s not too different from the median, except that it provides some diagnostics of data quality issues from the distribution of the tails. The payoff is a much more responsive index, which can be reported daily with a short lag. We spent a lot of time comparing the RPX to the CSI, and found that, while changes in quality and mix of sales could matter in principle, in practice the two approaches yield essentially the same answer, even over periods of years. My (biased) inclination, therefore, is to prefer the RPX approach. Your mileage may vary.

One interesting learning for me from the RPX project was that traders don’t want models. We went in thinking that sophisticated dynamics coupled to data would be a winner. Maybe it is a winner, but people want their own sophisticated dynamics. They wanted us to provide only a datastream that maximized robustness and transparency, and minimized lag. Those are sensible design principles. But I have to wonder whether a little dynamic insight would have been useful as well since, after all, many data consumers evidently did not have an adequate model of the housing market.