Discounting has long been controversial in climate integrated assessment models (IAMs), with prevailing assumptions less than favorable to future generations.

The evidence in favor of aggressive discounting has generally been macro in nature – observed returns appear to be consistent with discounting of welfare, so that’s what we should do. To swallow this, you have to believe that markets faithfully reveal preferences and that only on-market returns count. Even then, there’s still the problem of confounding of time preference with inequality aversion. Given that this perspective is contradicted by micro behavior, i.e. actually asking people what they want, it’s hard to see a reason other than convenience for its upper hand in decision making. Ultimately, the situation is neatly self-fulfilling. We observe inflated returns consistent with myopia, so we set myopic hurdles for social decisions, yielding inflated short-term returns.

It gets worse.

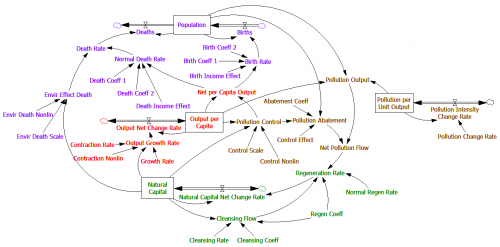

Back in 1997, I attended a talk on an early version of the RICE model, a regional version of DICE. In an optimization model with uniform utility functions, there’s an immediate drive to level incomes across all the regions. That’s obviously contrary to the observed global income distribution. A “solution” is to use Negishi weights, which apply weights to each region’s welfare in proportion to the inverse of the marginal utility of consumption there. That prevents income leveling, by explicitly assuming that the rich are rich because they deserve it.

This is a reasonable practical choice if you don’t think you can do anything about income distribution, and you’re not worried that it confounds equity with human capital differences. But when you use the same weights to identify an optimal emissions trajectory, you’re baking the inequity of the current market order into climate policy. In other words, people in developed countries are worth 10x more than people in developing countries.

Way back when, I didn’t have the words at hand to gracefully ask why it was a good idea to model things this way, but I sure wish I’d had the courage to forge ahead anyway.

The silly thing is that there’s no need to make such inequitable assumptions to model this problem. Elizabeth Stanton analyzes Negishi weighting and suggests alternatives. Richard Tol explored alternative frameworks some time before. And there are still more options, I think.

In the intertemporal optimization framework, one could treat the situation as a game between self-interested regions (with Negishi weights) and an equitable regulator (with equal weights to welfare). In that setting, mitigation by the rich might look like a form of foreign aid that couldn’t be squandered by the elites of poor regions, and thus I would expect deep emissions cuts.

Better still, dump notions of equilibrium and explore the problem with behavioral models, reserving optimization for policy analysis with fair objectives.

Thanks to Ramon Bueno for passing along the Stanton article.