There’s been a long-running debate over whether wolf reintroduction led to a trophic cascade in Yellowstone. There’s a nice summary here:

Yesterday, June initiated an in depth discussion on the benefit of wolves in Yellowstone, in the form of trophic cascade with the video: How Wolves Change the River:

This was predicted by some, and has been studied by William Ripple, Robert Beschta Trophic Cascades in Yellowstone: The first fifteen years after wolf reintroduction http://www.cof.orst.edu/leopold/papers/RippleBeschtaYellowstone_BioConserv.pdf

Shannon, Roger, and Mike, voiced caution that the verdict was still out.

I would like to caution that many of the reported “positive” impacts wolves have had on the environment after coming back to Yellowstone remain unproven or are at least controversial. This is still a hotly debated topic in science but in the popular media the idea that wolves can create a Utopian environment all too often appears to be readily accepted. If anyone is interested, I think Dave Mech wrote a very interesting article about this (attached). As he puts it “the wolf is neither a saint nor a sinner except to those who want to make it so”.

Mech: Is Science in Danger of Sanctifying Wolves

Roger added

I see 2 points of caution regarding reports of wolves having “positive” impacts in Yellowstone. One is that understanding cause and effect is always hard, nigh onto impossible, when faced with changes that occur in one place at one time. We know that conditions along rivers and streams have changed in Yellowstone but how much “cause” can be attributed to wolves is impossible to determine.

Perhaps even more important is that evaluations of whether changes are “positive” or “negative” are completely human value judgements and have no basis in science, in this case in the science of ecology.

-Ely Field Naturalists

Of course, in a forum discussion, this becomes:

Wolves changed rivers.

Not they didn’t.

Yes they did.

(iterate ad nauseam)

Prove it.

… with “prove it” roughly understood to mean establishing that river = a + b*wolves, rejecting the null hypothesis that b=0 at some level of statistical significance.

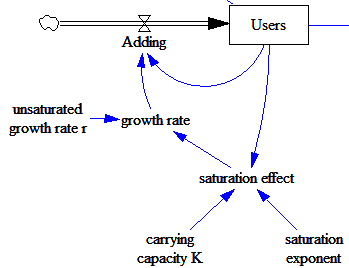

I would submit that this is a poor framing of the problem. Given what we know about nonlinear dynamics in networks like an ecosystem, it’s almost inconceivable that there would not be trophic cascades. Moreover, it’s well known that simple correlation would not be able to detect such cascades in many cases anyway.

A “no effect” default in other situations seems equally naive. Is it really plausible that a disturbance to a project would not have any knock-on effects? That stressing a person’s endocrine system would not cause a path-dependent response? I don’t think so. Somehow we need ordinary conversations to employ more sophisticated notions about models and evidence in complex systems. I think at least two ideas are useful:

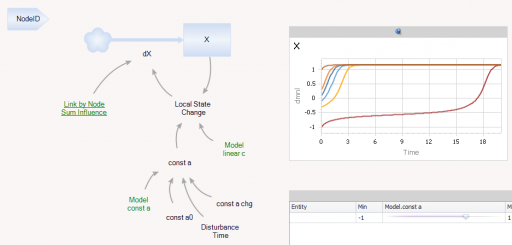

- The idea that macro behavior emerges from micro structure. The appropriate level of description of an ecosystem, or a project, is not a few time series for key populations, but an operational, physical description of how species reproduce and interact with one another, or how tasks get done.

- A Bayesian approach to model selection, in which our belief in a particular representation of a system is proportional to the degree to which it explains the evidence, relative to various alternative formulations, not just a naive null hypothesis.

In both cases, it’s important to recognize that the formal, numerical data is not the only data applicable to the system. It’s also crucial to respect conservation laws, units of measure, extreme conditions tests and other Reality Checks that essentially constitute free data points in parts of the parameter space that are otherwise unexplored.

The way we think and talk about these systems guides the way we act. Whether or not we can prove in specific instances that Yellowstone had a trophic cascade, or the Chunnel project had unintended consequences, we need to manage these systems as if they could. Complexity needs to be the default assumption.