I’ve been sniffing around for more information on the dynamics of boiling water reactors, particularly in extreme conditions. Here’s what I can glean (caveat: I’m not a nuclear engineer).

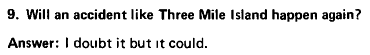

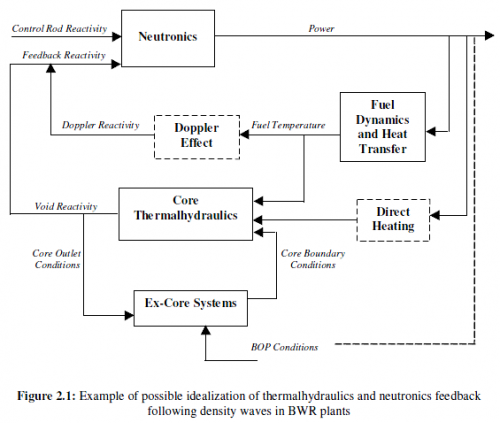

It turns out that there’s quite a bit of literature on reduced-form models of reactor operations. Most of this, though, is focused on operational issues that arise from nonlinear dynamics, on a time scale of less than a second or so. (Update: I’ve posted an example of such a model here.)

Source: Instability in BWR NPPs – F. Maggini 2004

Those are important – it was exactly those kinds of fast dynamics that led to disaster when operators took the Chernobyl plant into unsafe territory. (Fortunately, the Chernobyl design is not widespread.)

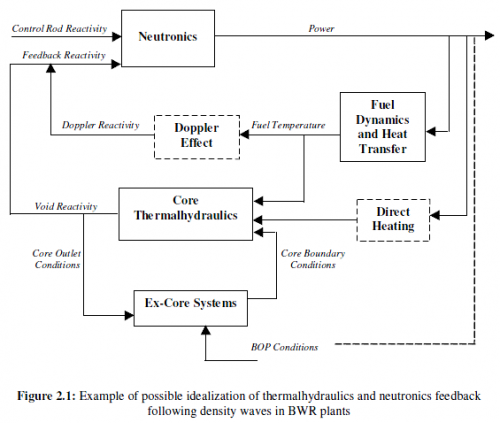

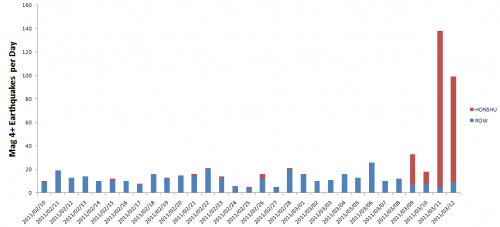

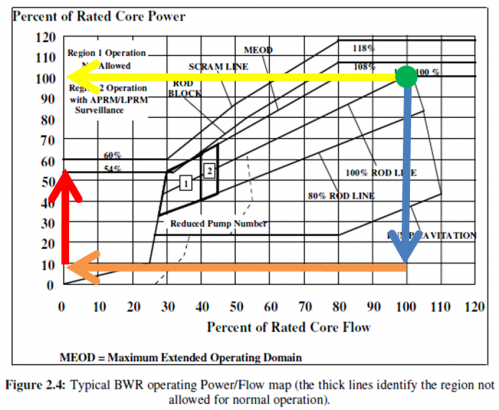

However, I don’t think those are the issues that are now of interest. The Japanese reactors are now far from their normal operating point, and the dynamics of interest have time scales of hours, not seconds. Here’s a map of the territory:

Source: Instability in BWR NPPs – F. Maggini 2004

colored annotations by me.

The horizontal axis is coolant flow through the core, and the vertical axis is core power – i.e. the rate of heat generation. The green dot shows normal full-power operation. The upper left part of the diagram, above the diagonal, is the danger zone, where high power output and low coolant flow creates the danger of a meltdown – like driving your car over a mountain pass, with nothing in the radiator.

It’s important to realize that there are constraints on how you move around this diagram. You can quickly turn off the nuclear chain reaction in a reactor, by inserting the control rods, but it takes a while for the power output to come down, because there’s a lot of residual heat from nuclear decay products.

On the other hand, you can turn off the coolant flow pretty fast – turn off the electricity to the pumps, and the flow will stop as soon as the momentum of the fluid is dissipated. If you were crazy enough to turn off the cooling without turning down the power (yellow line), you’d have an immediate catastrophe on your hands.

In an orderly shutdown, you turn off the chain reaction, then wait patiently for the power to come down, while maintaining coolant flow. That’s initially what happened at the Fukushima reactors (blue line). Seismic sensors shut down the reactors, and an orderly cool-down process began.

After an hour, things went wrong when the tsunami swamped backup generators. Then the reactor followed the orange line to a state with near-zero coolant flow (whatever convection provides) and nontrivial power output from the decay products. At that point, things start heating up. The process takes a while, because there’s a lot of thermal mass in the reactor, so if cooling is quickly restored, no harm done.

If cooling isn’t restored, a number of positive feedbacks (nasty vicious cycles) can set in. Boiling in the reactor vessel necessitates venting (releasing small amounts of mostly short-lived radioactive materials); if venting fails, the reactor vessel can fail from overpressure. Boiling reduces the water level in the reactor and makes heat transfer less efficient; fuel rods that boil dry heat up much faster. As fuel rods overheat, their zirconium cladding reacts with water to make hydrogen – which can explode when vented into the reactor building, as we apparently saw at reactors 1 & 3. That can cause collateral damage to systems or people, making it harder to restore cooling.

Things get worse as heat continues to accumulate. Melting fuel rods dump debris in the reactor, obstructing coolant flow, again making it harder to restore cooling. Ultimately, melted fuel could concentrate in the bottom of the reactor vessel, away from the control rods, making power output go back up (following the red line). At that point, it’s likely that the fuel is going to end up in a puddle on the floor of the containment building. Presumably, at that point negative feedback reasserts dominance, as fuel is dispersed over a large area, and can cool passively. I haven’t seen any convincing descriptions of this endgame, but nuclear engineers seem to think it benign – at least compared to Chernobyl. At Chernobyl, there was one less balancing feedback loop (ineffective containment) and an additional reinforcing feedback: graphite in the reactor, which caught fire.

So, the ultimate story here is a race against time. The bad news is that if the core is dry and melting, time is not on your side as you progress faster and faster up the red line. The good news is that, as long as that hasn’t happened yet, time is on the side of the operators – the longer they can hold things together with duct tape and seawater, the less decay heat they have to contend with. Unfortunately, it sounds like we’re not out of the woods yet.

But those are balanced by pronouncements like this:

But those are balanced by pronouncements like this: