Just for fun, I asked Grok for a list of critiques, and it came up with a pretty good one. [I’ve added a few notes and references in brackets.]

System Dynamics (SD) modeling, while powerful for analyzing complex systems, faces several critiques:

- Oversimplification: SD models often simplify complex systems, potentially missing critical details or interactions, leading to inaccurate predictions or insights. [One example of this is the Axtell critique of aggregation, which is a bit of a strawdog.]

- Subjectivity in Model Design: Model boundaries, assumptions, and relationships are defined by modelers, which can introduce bias or limit the model’s applicability. [See Dana Meadows’ Unavoidable A Priori.]

- Data Dependency: SD relies heavily on accurate data for calibration and validation. Poor-quality or incomplete data can undermine model reliability. [This is ironic, because SD historically did not rely heavily on formal data, and that is the usual critique. But SD provides excellent ways to improve reliability by combining data with sensible structure.]

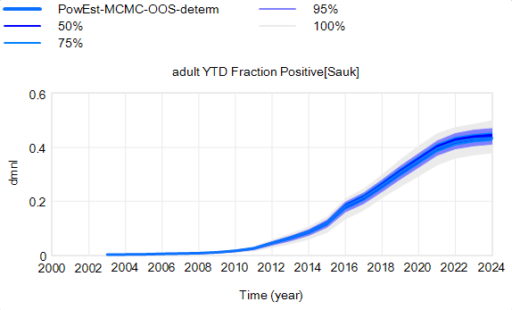

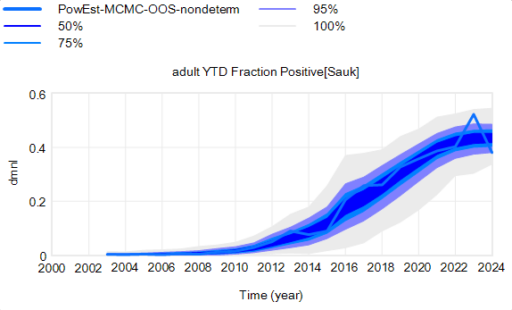

- Difficulty in Validation: Validating SD models is challenging, especially for long-term predictions, as real-world systems evolve unpredictably, and historical data may not suffice. [What method doesn’t have this problem, other than trivial ones that are easy to reject?]

- Computational Complexity: Large SD models can be computationally intensive, making them difficult to scale or analyze in real-time scenarios. [Yep.]

- Limited Predictive Power: SD is better suited for understanding system behavior than precise forecasting, as it struggles with chaotic or highly stochastic systems. [Nope.]

- Misinterpretation Risk: Non-experts may misinterpret model outputs, assuming deterministic results rather than recognizing them as scenarios or trends. [True, but hardly unique to SD. See Communicating uncertainty and policy sensitivity]

- Neglect of Discrete Events: SD focuses on continuous processes, which may inadequately capture discrete events or abrupt changes in systems. [Common, but not a requirement, even in the early days of SD.]

- Resistance to Interdisciplinary Integration: Some critics argue SD struggles to integrate with other modeling approaches (e.g., agent-based modeling), limiting its flexibility. [It might be a struggle, but I don’t think deliberate resistance is the issue.]

- Overemphasis on Feedback Loops: While feedback loops are central to SD, overfocusing on them can downplay external drivers or one-off events impacting the system. [Consider George Richardson’s endogenous point of view.]

These critiques highlight the need for careful model design, validation, and clear communication of limitations when using SD. Would you like me to dive deeper into any specific critique?