Lots of golf.

I couldn’t resist a ClimateDesk article mocking carbon-sucking golf balls, so I took a look at the patent.

I immediately started wondering about the golf ball’s mass balance. There are rules about these things. But the clever Nike engineers thought of everything,

Generally, a salt may be formed as a result of the reaction between the carbon dioxide absorbent and the atmospheric carbon dioxide. The presence of this salt may cause the golf ball to increase in weight. This increase in weight may be largely negligible, or the increase in weight may be sufficient to be measurable and affect the play characteristics of the golf ball. The United States Golf Association (USGA) official Rules of Golf require that a regulation golf ball weigh no more than 45.93 grams. Therefore, a golf ball in accordance with this disclosure may be manufactured to weigh some amount less than 45.93, so that the golf ball may increase in weight as atmospheric carbon dioxide is absorbed. For example, a finished golf ball manufactured in accordance with this disclosure may weigh 45.5 grams before absorbing any significant amount of atmospheric carbon dioxide.

Let’s pretend that 0.43 grams of CO2 is “significant” and do the math here. World energy CO2 emissions were about 32.6 MMT in 2011. That’s 32.6 gigatons or petagrams, so you’d need about 76 petaballs per year to absorb it. That’s 76,000,000,000,000,000 balls per year.

It doesn’t sound so bad if you think of it as 11 million balls per capita per year. Think of the fun you could have with 11 million golf balls! Plus, you’d have 22 million next year, except for the ones you whacked into a water trap.

Because the conversion efficiency is so low (less than half a gram CO2 uptake per 45 gram ball, i.e about 1%), you need 100 grams of ball per gram of carbon. This means that the mass flow of golf balls would have to exceed the total mass flow of food, fuels, minerals and construction materials on the planet, by a factor of 50.

76 petaballs take up about 4850 cubic kilometers, so we’d soon have to decide where to put them. I think Scotland would be appropriate. We’d only have to add a 60-meter layer of balls to the country each year.

A train bringing 10,000 tons of coal to a power plant (three days of fuel for 500MW) would have to make a lot more trips to carry away the 1,000,000 tons of balls needed to offset its emissions. That’s a lot of rail traffic, so it might make sense to equip plants with an array of 820 rotary cannon retrofitted to fire balls into the surrounding countryside. That’s only 90,000 balls per second, after all. Perhaps that’s what analysts mean when they say that there are no silver bullets, only silver buckshot. In any case, the meaning of “climate impacts” would suddenly be very palpable.

Dealing with this enormous mass flow would be tough, but there would be some silver linings. For starters, the earth’s entire fossil fuel output would be diverted to making plastics, so emissions would plummet, and the whole scale of the problem would shrink to manageable proportions. Golf balls are pretty tough, so those avoided emissions could be sequestered for decades. In addition, golf balls float, and they’re white, so we could release them in the arctic to replace melting sea ice.

Who knows what other creative uses of petaballs the free market will invent?

Update, courtesy of Jonathan Altman:

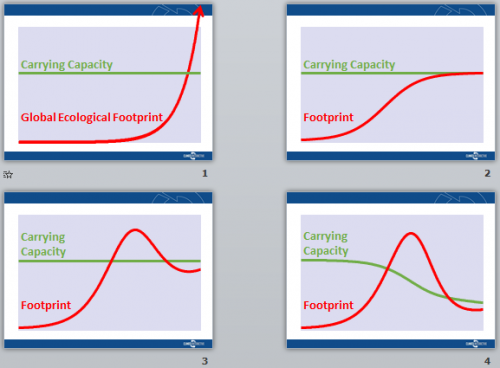

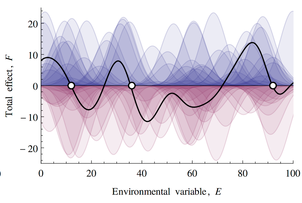

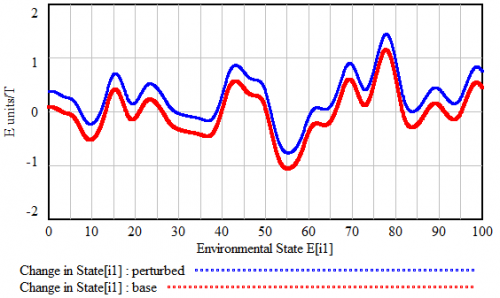

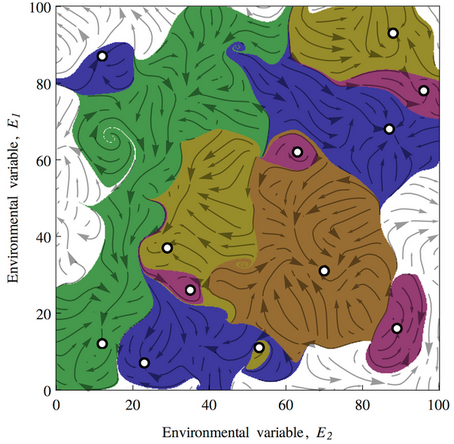

If this looks familiar, there’s a reason. What’s happening along the E dimension is a lot like what happens along the time dimension in

If this looks familiar, there’s a reason. What’s happening along the E dimension is a lot like what happens along the time dimension in

This leads to a variety of conclusions about ecological stability, for which I encourage you to have a look at the full paper. It’s interesting to ponder the applicability and implications of this conceptual model for social systems.

This leads to a variety of conclusions about ecological stability, for which I encourage you to have a look at the full paper. It’s interesting to ponder the applicability and implications of this conceptual model for social systems.