Yet another systems thinking classic is the riddle of the French farmer and the lily pads. This requires reasoning about exponential growth.

This may be too easy without the typical complex setup of the riddle, but I’d say the AI nailed it again.

Continuing with the theme, I tried the department store problem out on ChatGPT. This is a common test of stock-flow reasoning, in which participants assess the peak stock of people in a store from data on the inflow and outflow.

I posed a simplified version of the problem:

Interestingly, I had intended to have 6 people enter at 8am, but I made a typo. ChatGPT did a remarkable job of organizing my data into exactly the form I’d doodled in my notebook, but then happily integrated to wind up with -2 people in the store at the end.

This is pretty cool, but it’s interesting that ChatGPT was happy to correct the number of people in the room, without making the corresponding correction to people leaving. That makes the table inconsistent.

We got there in the end, but I think ChatGPT’s enthusiasm for reality checks may be a little weak. Overall though I’d still say this is a pretty good demonstration of stock-flow reasoning. I’d be curious how humans would perform on the same problem.

Research indicates that insights about stock-flow management don’t necessarily generalize from one situation to another. People can fill their bathtubs without comprehending the federal debt or COVID prevalence.

ChatGPT struggles a bit with the climate bathtub, so I wondered if it could reason successfully about real bathtubs.

The last sentence is a little tricky, but I think ChatGPT is assuming that the drain might not be at the bottom of the tub. Overall, I’d say the AI nailed this one.

Following up on our earlier foray into AI conversations about dynamics, I decided to follow up on ChatGPT’s understanding of bathtub dynamics. First I repeated our earlier question about climate:

This is close, but note that it’s suggesting that a decrease in emissions corresponds with a decrease in concentration. This is not necessarily true in general, due to the importance of emissions relative to removals. ChatGPT seems to recognize the issue, but fails to account for it completely in its answer. My parameter choice turned out to be a little unfortunate, because a 50% reduction in CO2 emissions is fairly close to the boundary between rising and falling CO2 concentrations in the future.

I asked again with a smaller reduction in emissions. This should have an unambiguous effect: emissions would remain above removals, so the CO2 concentration would continue to rise, but at a slower rate.

This time the answer is a little better, but it’s not clear whether “lead to a reduction in the concentration of CO2 in the atmosphere” means a reduction relative to what would have happened otherwise, or relative to today’s concentration. Interestingly, ChatGPT does get that the emissions reduction doesn’t reduce temperature directly; it just slows the rate of increase.

A couple weeks ago my wife started probing ChatGPT’s abilities. An early foray suggested that it didn’t entirely appreciate climate bathtub dynamics. She decided to start with a less controversial topic:

If there was a hole that went through the center of the moon, and I jumped in, how long would it take for me to come out the other side?

Initially, it’s spectacularly wrong. It gets the time-to-distance formula with linear acceleration right, but it has misapplied it. The answer is wrong by orders of magnitude, so it must be making a unit error or something. To us, the error is obvious. The moon is thousands of kilometers across, so how could you possibly traverse it in seconds, with only the moon’s tiny gravity to accelerate you?

At the end here, we ask for the moon’s diameter, because we started a race – I was building a Vensim model and my son was writing down the equations by hand, looking for a closed form solution and (when the integral looked ugly), repeating the calculation in Matlab. ChatGPT proved to be a very quick way to look up things like the diameter of the moon – faster even than googling up the Wikipedia page.

Since it was clear that non-constant acceleration was wrong, we tried to get it to correct. We hoped it would come up with F = m(me)*a = G*m(moon)*m(me)/R^2 and solve that.

Ahh … so the gigantic scale error is from assuming a generic 100-meter hole, rather than a hole all the way through to the other side. Also, 9.8 m/s^2 is Earth’s surface gravity.

Finally, it has arrived at the key concept needed to solve the problem: nonconstant acceleration, a = G*M(moon)/R^2 (where R varies with the jumper’s position in the hole).

Disappointingly, it crashed right at the crucial endpoint, but it’s already done most of the work to lay out the equations and collect the mass, radius and gravitational constant needed. It’s still stubbornly applying the constant acceleration formula at the end, but I must say that we were pretty impressed at this point.

In the same time, the Vensim model was nearly done, with a bit of assistance on the input numbers from Chat GPT. There were initially a few glitches, like forgetting to reverse the sign of the gravitational force at the center of the moon. But once it worked, it was easily extensible to variations in planet size, starting above or below the surface, etc. Puzzlingly the hand calculation was yielding a different answer (some kind of trivial hand computation error), but Matlab agreed with Vensim. Matlab was faster to code, but less interactive, and less safe because it didn’t permit checking units.

I’d hesitate to call this a success for the AI. It was a useful adjunct to a modeler who knew what they were doing. It was impressively fast at laying out the structure of the problem. But it was even faster at blurting out the wrong answer with an air of confidence. I would not want to fly in a plane designed by ChatGPT yet. To be fair, the system isn’t really designed to do physics, but a lot of reasoning about things like the economy or COVID requires some skills that it apparently doesn’t yet have.

There are lots of interesting developments in machine learning for time series modeling, but can an AI write about systems sensibly? I decided to try a few free online AI content generators.

Using system dynamics modeling for project delay and disruption

1. System Dynamics Modeling (SDM)

System dynamics modeling is a mathematical model that helps us understand how systems work and how they interact with each other. SDM is useful for understanding complex systems and their behavior over time. In addition, it can help predict future outcomes based on current conditions.

2. Project Delay & Disruption

Project delays and disruptions occur frequently in projects. These events cause significant problems for organizations and individuals alike. A project delay occurs when a project is delayed past its original completion date. A project disruption occurs when a project is disrupted before it reaches its original completion date. Both types of project delays and disruptions can have negative effects on the success of a project.

3. Causes of Project Delays & Disruptions

There are many causes of project delays and disruptions. Some of these causes include:

• Poor planning

• Unclear requirements

• Lack of communication

• Poor management

• Miscommunication

• Poor leadership

• Poor team coordination

• Poor resource allocation

Well, we didn’t get the rework cycle or any feedback effects, but not an entirely useless effort.

Do climate tipping points signal the onset of Limits to Growth?

Climate change is a serious human problem that causes concern among many people. Some believe that climate change is caused by human activity and that it will bring about a catastrophic future. However, others believe that it is natural and that there are ways to prevent it from causing further damage. Regardless of whether climate change is caused by human activity or not, it has the potential to cause major damage to the planet. Many believe that we are approaching climate tipping points, which could have catastrophic consequences for humanity.

One reason why climate tipping points signal the onset of limits to growth is because they are a harbinger of future environmental disasters. These disasters would occur because of the way we have altered the climate and what we have done to the planet. For example, the Larsen B ice shelf in Antarctica broke away in 2002 and disintegrated within four years due to global warming. Climate tipping points signal the onset of limits to growth because they are a harbinger of future environmental disasters. Governments and environmental groups should prepare for future environmental disasters by investing in renewable resources and making changes in agriculture to reduce food insecurity.

Another reason why climate tipping points signal the onset of limits to growth is because they are a harbinger of future economic problems. Scientists have warned us about manmade climate change for years; however, most people ignore their warnings and keep on using fossil fuels anyway. This is causing major problems for our planet, including droughts, rising sea levels and more frequent natural disasters. Unfortunately, many people are paying little attention to the warnings; they think they can ignore them and avoid problems later. Governments and environmental groups should prepare for future economic problems by investing in renewable resources and making changes in agriculture to reduce food insecurity.

I have to say, the AI is making more sense than the South Dakota legislature.

This is a case of a deer that had been found dead by an early hunter, then found by another hunter on the same day. These hunters were in the woods for several days, hunting deer. Then, for a week or more, the deer had eaten enough grass to cover itself, leaving the deer lying in its own feces. In the United States, this is called wasting. Chronic wasting is an infectious disease that, in humans, is caused by bacteria. When a person is infected with chronic wasting, they begin losing weight and become weak. They begin to smell bad, have sores on their body, lose their appetite, feel tired, listless and weak, having trouble standing up. Some people lose consciousness. Their physical…

I guess we still need modelers for some things.

I’m skeptical of the idea that machine learning and big data will automatically lead to some kind of technological nirvana, a Star Trek future in which machines quickly learn all the physics needed for us to live happily ever after.

First, every other human technology has been a mixed bag, with improvements in welfare coming along with some collateral damage. It just seems naive to think that this one will be different.

These are not the primary problem.

Second, I think there are some good reasons to think that problems will get harder at the same rate that machines get smarter. The big successes I’ve seen are localized point prediction problems, not integrated systems with a lot of feedback. As soon as causality are separated in time and space by complex mechanisms, you’re into sloppy systems territory, where data may constrain only a few parameters at a time. Making progress in such systems will increasingly require integration of multiple theories and data from multiple sources.

People in domains that have made heavy use of big data increasingly recognize this: Continue reading “Limits to Big Data”

DARPA put out a request for a BS detector for science. I responded with a strategy for combining the results of multiple models (using Rahmandad, Jalali & Paynabar’s generalized meta-analysis with some supporting infrastructure like data archiving) to establish whether new findings are consistent with an existing body of knowledge.

DARPA didn’t bite. I have no idea why, but could speculate from the RFC that they had in mind something more like a big data approach that would use text analysis to evaluate claims. Hopefully not, because a text-only approach will have limited power. Here’s why.

Here’s a nice example of how AI is killing us now. I won’t dignify this with a link, but I found it posted by a LinkedIn user.

I’d call this an example of artificial stupidity, not AI. The article starts off sounding plausible, but quickly degenerates into complete nonsense that’s either automatically generated or translated, with catastrophic results. But it was good enough to make it past someone’s cognitive filters.

For years, corporations have targeted on World Health Organization to indicate ads to and once to indicate the ads. AI permits marketers to, instead, specialize in what messages to indicate the audience, therefore, brands will produce powerful ads specific to the target market. With programmatic accounting for 67% of all international show ads in 2017, AI is required quite ever to make sure the inflated volume of ads doesn’t have an effect on the standard of ads.

One style of AI that’s showing important promise during this space is tongue process (NLP). informatics could be a psychological feature machine learning technology which will realize trends in behavior and traffic an equivalent method an individual’s brain will. mistreatment informatics during this method can match ads with people supported context, compared to only keywords within the past, thus considerably increasing click rates and conversions.

I’ve been watching the debate over AI with some amusement, as if it were some other planet at risk. The Musk-Zuckerberg kerfuffle is the latest installment. Ars Technica thinks they’re both wrong:

At this point, these debates are largely semantic.

I don’t see how anyone could live through the last few years and fail to notice that networking and automation have enabled an explosion of fake news, filter bubbles and other information pathologies. These are absolutely policy relevant, and smarter AI is poised to deliver more of what we need least. The problem is here now, not from some impending future singularity.

Ars gets one point sort of right:

Plus, computer scientists have demonstrated repeatedly that AI is no better than its datasets, and the datasets that humans produce are full of errors and biases. Whatever AI we produce will be as flawed and confused as humans are.

I don’t think the data is really the problem; it’s the assumptions the data’s treated with and the context in which that occurs that’s really problematic. In any case, automating flawed aspects of ourselves is not benign!

Here’s what I think is going on:

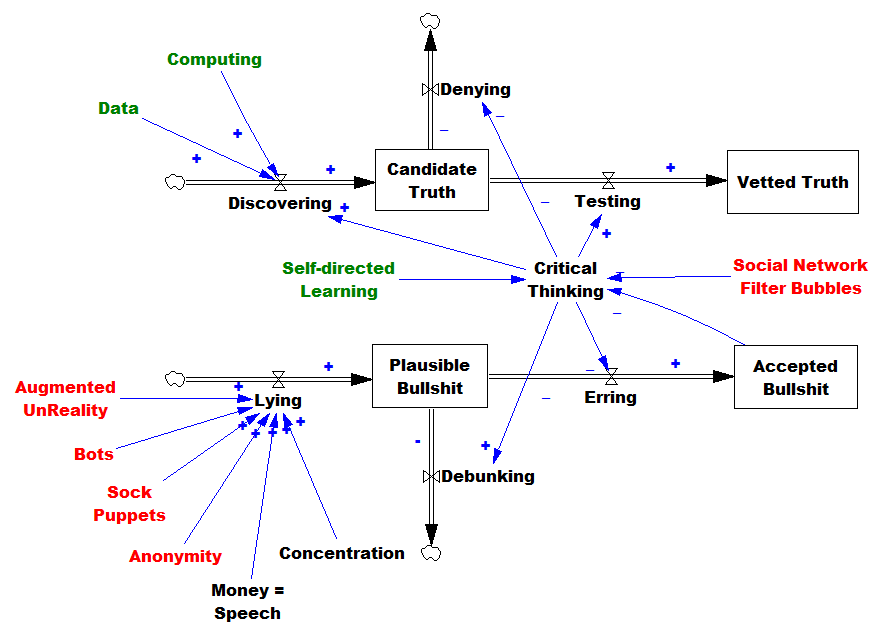

AI, and more generally computing and networks are doing some good things. More data and computing power accelerate the discovery of truth. But truth is still elusive and expensive. On the other hand, AI is making bullsh!t really cheap (pardon the technical jargon). There are many mechanisms by which this occurs:

These amplifiers of disinformation serve increasingly concentrated wealth and power elites that are isolated from their negative consequences, and benefit from fueling the process. We wind up wallowing in a sea of information pollution (the deadliest among the sins of managing complex systems).

As BS becomes more prevalent, various reinforcing mechanisms start kicking in. Accepted falsehoods erode critical thinking abilities, and promote the rejection of ideas like empiricism that were the foundation of the Enlightenment. The proliferation of BS requires more debunking, taking time away from discovery. A general erosion of trust makes it harder to solve problems, opening the door for opportunistic rent-seeking non-solutions.

I think it’s a matter of survival for us to do better at critical thinking, so we can shift the balance between truth and BS. That might be one area where AI could safely assist. We have other assets as well, like the explosion of online learning opportunities. But I think we also need some cultural solutions, like better management of trust and anonymity, brakes on concentration, sanctions for lying, rewards for prediction, and more time for reflection.