The NIPCC critique is, ironically, a compelling argument in favor of the IPCC assessment. Why? Well, science is about evaluation of competing hypotheses. The NIPCC report collects a bunch of alternatives to mainstream climate science in one place, where it’s easy to see how pathetic they are. If this is the best climate skeptics can muster, their science must be exceedingly weak.

The NIPCC (Nongovernmental International Panel on Climate Change, a.k.a. Not IPCC) is the Heartland Institute’s rebuttal of the IPCC assessments. Apparently the latest NIPCC report has been mailed to zillions of teachers. As a homeschooling dad, I’m disappointed that I didn’t get mine. Well, not really.

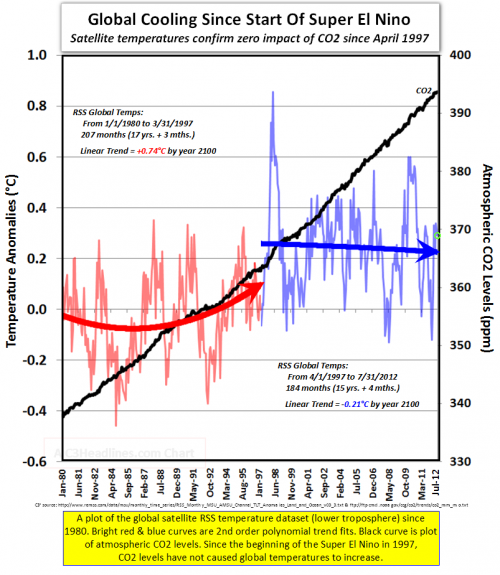

It would probably take more pages to debunk the NIPCC report than it occupies, but others are chipping away at it. Some aspects, like temperature cherry-picking, are like shooting fish in a barrel.

The SPM, and presumably the entire report that it summarizes, seems to labor under the misapprehension that the IPCC is itself a body that conducts science. In fact, the IPCC assessments are basically a giant literature review. So, when the Heartland panel writes,

In contradiction of the scientific method, the IPCC assumes its implicit hypothesis is correct and that its only duty is to collect evidence and make plausible arguments in the hypothesis’s favor.

we must remember that “the IPCC” is shorthand for a vast conspiracy of scientists, coordinated by an invisible hand.

The report organizes the IPPC argument into 3 categories: “Global Climate Model (GCM) projections,” “postulates,” and “circumstantial evidence.” This is a fairly ridiculous caricature of the actual body of work. Most of what is dismissed as postulates could better be described as, “things we’re too lazy to explore properly,” for example. But my eye strays straight to the report’s misconceptions about modeling.

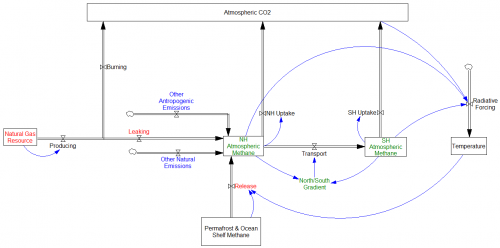

First, the NIPCC seems to have missed the fact that GCMs are not the only models in use. There are EMICS (models of intermediate complexity) and low-order energy balance models as well.

The NIPCC has taken George Box’s “all models are wrong, some are useful” and run with it:

… Global climate models produce meaningful results only if we assume we already know perfectly how the global climate works, and most climate scientists say we do not (Bray and von Storch, 2010).

How are we to read this … all models are useless, unless they’re perfect? Of course, no models are perfect, therefore all models are useless. Now that’s science!

NIPCC trots out a von Neumann quote that’s almost as tired as Box:

with four parameters I can fit an elephant, and with five I can make him wiggle his trunk

In models with lots of reality checks available (i.e. laws of physics), it just isn’t that easy. And the earth is a very big elephant, which means that there’s a rather vast array of data to be fit.

The NIPCC seems to be aware of only a few temperature series, but the AR5 report devotes 200 pages (Chapter 9) to model evaluation, with results against a wide variety of spatial and temporal distributions of physical quantities. Models are obviously far from perfect, but a lot of the results look good, in ways that exceed the wildest dreams of social system modelers.

NIPCC doesn’t seem to understand how this whole “fit” thing works.

Model calibration is faulty as it assumes all temperature rise since the start of the industrial revolution has resulted from human CO2 emissions.

This is blatantly false, not only because it contradicts the actual practice of attribution, but because there is no such parameter as “fraction of temp rise due to anthro CO2.” One can’t assume the answer to the attribution question without passing through a lot of intermediate checks, like conforming to physics and data other than global temperature. In complex models, where the contribution of any individual parameter to the outcome is likely to be unknown to the modeler, and the model is too big to calibrate by brute force, the vast majority of parameters must be established bottom up, from physics or submodels, which makes it extremely difficult for the modeler to impose preconceptions on the complete model.

Similarly,

IPCC models stress the importance of positive feedback from increasing water vapor and thereby project warming of ~3-6°C, whereas empirical data indicate an order of magnitude less warming of ~0.3-1.0°C.

Data by itself doesn’t “indicate” anything. Data only speaks insofar as it refutes (or fails to refute) a model. So where is the NIPCC model that fits available data and yields very low climate sensitivity?

The bottom line is that, if it were really true that models have little predictive power and admit many alternative calibrations (a la the elephant), it should be easy for skeptics to show model runs that fit the data as well as mainstream results, with assumptions that are consistent with low climate sensitivity. They wouldn’t necessarily need a GCM and a supercomputer; modest EBMs or EMICs should suffice. This they have utterly failed to demonstrate.