It’s a good idea to read things you criticize; checking your sources doesn’t hurt either. One of the most frequent targets of uninformed criticism, passed down from teacher to student with nary a reference to the actual text, must be The Limits to Growth. In writing my recent review of Green & Armstrong (2007), I ran across this tidbit:

Complex models (those involving nonlinearities and interactions) harm accuracy because their errors multiply. Ascher (1978), refers to the Club of Rome’s 1972 forecasts where, unaware of the research on forecasting, the developers proudly proclaimed, “in our model about 100,000 relationships are stored in the computer.” (page 999)

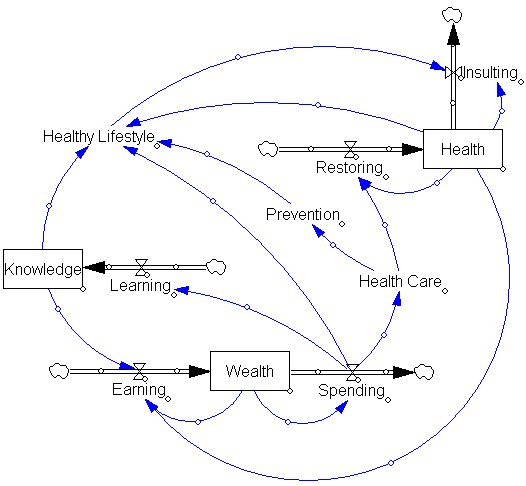

Setting aside the erroneous attributions about complexity, I found the statement that the MIT world models contained 100,000 relationships surprising, as both can be diagrammed on a single large page. I looked up electronic copies of World Dynamics and World3, which have 123 and 373 equations respectively. A third or more of those are inconsequential coefficients or switches for policy experiments. So how did Ascher, or Ascher’s source, get to 100,000? Perhaps by multiplying by the number of time steps over the 200 year simulation period – hardly a relevant measure of complexity.

Meadows et al. tried to steer the reader away from focusing on point forecasts. The introduction to the simulation results reads,

Each of these variables is plotted on a different vertical scale. We have deliberately omitted the vertical scales and we have made the horizontal time scale somewhat vague because we want to emphasize the general behavior modes of these computer outputs, not the numerical values, which are only approximately known. (page 123)

Many critics have blithely ignored such admonitions, and other comments to the effect of, “this is a choice, not a forecast” or “more study is needed.” Often, critics don’t even refer to the World3 runs, which are inconvenient in that none reaches overshoot in the 20th century, making it hard to establish that “LTG predicted the end of the world in year XXXX, and it didn’t happen.” Instead, critics choose the year XXXX from a table of resource lifetime indices in the chapter on nonrenewable resources (page 56), which were not forecasts at all. Continue reading “On Limits to Growth”