This is a spinoff of our work with C-ROADS: a shareable tool that presents the outcome of current climate commitments in a simple way.

Author: Tom

Dynamics of financial guarantee programs

Ever since the housing market fell apart, I’ve been meaning to write about some excellent work on federal financial guarantee programs, by colleagues Jim Hines (of TUI fame) and Jim Thompson.

Designing Programs that Work.

This document is part of a series reporting on a study of tederal financial guarantee programs. The study is concerned with how to design future guarantee programs so that they will be more robust, less prone to problems. Our focus has been on internal (that is. endogenous) weaknesses that might inadvertently be designed into new programs. Such weaknesses may be described in terms of causal loops. Consequently, the study is concerned with (a) identifying the causal loops that can give rise to problematic behavior patterns over time, and (b) considering how those loops might be better controlled.

Their research dates back to 1993, when I was a naive first-year PhD student, but it’s not a bit dated. Rather, it’s prescient. It considers a series of design issues that arise with the creation of government-backed entities (GBEs). From today’s perspective, many of the features identified were the seeds of the current crisis. Jim^2 identify a number of structural innovations that control the undesirable behaviors of the system. It’s evident that many of these were not implemented, and from what I can see won’t be this time around either.

There’s a sophisticated model beneath all of this work, but the presentation is a nice example of a nontechnical narrative. The story, in text and pictures, is compelling because the modeling provided internal consistency and insights that would not have been available through debate or navel rumination alone.

I don’t have time to comment too deeply, so I’ll just provide some juicy excerpts, and you can read the report for details:

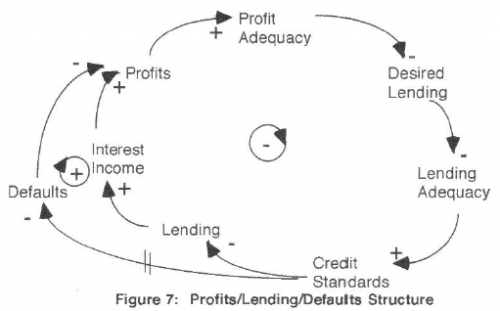

The profit-lending-default spiral

The situation described here is one in which an intended corrective process is weakened or reversed by an unintended self-reinforcing process. The corrective process is one in which inadequate profits are corrected by rising income on an increasing portfolio. The unintended self-reinforcing process is one in which inadequate profits are met with reduced credit standards which cause higher defaults and a further deterioration in profits. Because the fee and interest income lrom a loan begins to be received immediately, it may appear at first that the corrective process dominates, even if the self-reinforcing is actually dominant. Managers or regulators initially may be encouraged by the results of credit loosening and portfolio building, only to be surprised later by a rising tide of bad news.

As is typical, some well-intentioned policies that could mitigate the problem behavior have unpleasant side-effects. For example, adding risk-based premiums for guarantees worsens the short-term pressure on profits when standards erode, creating a positive loop that could further drive erosion.

Individuals matter after all

From arXiv:

From bird flocks to fish schools, animal groups often seem to react to environmental perturbations as if of one mind. Most studies in collective animal behaviour have aimed to understand how a globally ordered state may emerge from simple behavioural rules. Less effort has been devoted to understanding the origin of collective response, namely the way the group as a whole reacts to its environment. Yet collective response is the adaptive key to survivor, especially when strong predatory pressure is present. Here we argue that collective response in animal groups is achieved through scale-free behavioural correlations. By reconstructing the three-dimensional position and velocity of individual birds in large flocks of starlings, we measured to what extent the velocity fluctuations of different birds are correlated to each other. We found that the range of such spatial correlation does not have a constant value, but it scales with the linear size of the flock. This result indicates that behavioural correlations are scale-free: the change in the behavioural state of one animal affects and is affected by that of all other animals in the group, no matter how large the group is. Scale-free correlations extend maximally the effective perception range of the individuals, thus compensating for the short-range nature of the direct inter-individual interaction and enhancing global response to perturbations. Our results suggest that flocks behave as critical systems, poised to respond maximally to environmental perturbations.

The Obscure Art of Datamodeling in Vensim

There are lots of good reasons for building models without data. However, if you want to measure something (i.e. estimate model parameters), produce results that are closely calibrated to history, or drive your model with historical inputs, you need data. Most statistical modeling you’ll see involves static or dynamically simple models and well-behaved datasets: nice flat files with uniform time steps, units matching (or, alarmingly, ignored), and no missing points. Things are generally much messier with a system dynamics model, which typically has broad scope and (one would hope) lots of dynamics. The diversity of data needed to accompany a model presents several challenges:

- disagreement among sources

- missing data points

- non-uniform time intervals

- variable quality of measurements

- diverse source formats (spreadsheets, text files, databases)

The mathematics for handling the technical estimation problems were developed by Fred Schweppe and others at MIT decades ago. David Peterson’s thesis lays out the details for SD-type models, and most of the functionality described is built into Vensim. It’s also possible, of course, to go a simpler route; even hand calibration is often effective and reasonably quick when coupled with Synthesim.

Either way, you have to get your data corralled first. For a simple model, I’ll build the data right into the dynamic model. But for complicated models, I usually don’t want the main model bogged down with units conversions and links to a zillion files. In that case, I first build a separate datamodel, which does all the integration and passes cleaned-up series to the main model as a fast binary file (an ordinary Vensim .vdf). In creating the data infrastructure, I try to maximize three things:

- Replicability. Minimize the number of manual steps in the process by making the data model do everything. Connect the datamodel directly to primary sources, in formats as close as possible to the original. Automate multiple steps with command scripts. Never use hand calculations scribbled on a piece of paper, unless you’re scrupulous about lab notebooks, or note the details in equations’ documentation field.

- Transparency. Often this means “don’t do complex calculations in spreadsheets.” Spreadsheets are very good at some things, like serving as a data container that gives good visibility. However, spreadsheet calculations are error-prone and hard to audit. So, I try to do everything, from units conversions to interpolation, in Vensim.

- Quality.#1 and #2 already go a long way toward ensuring quality. However, it’s possible to go further. First, actually look at the data. Take time to build a panel of on-screen graphs so that problems are instantly visible. Use a statistics or visualization package to explore it. Lately, I’ve been going a step farther, by writing Reality Checks to automatically test for discontinuities and other undesirable properties of spliced time series. This works well when the data is simply to voluminous to check manually.

This can be quite a bit of work up front, but the payoff is large: less model rework later, easy updates, and higher quality. It’s also easier generate graphics or statistics that help others to gain confidence in the model, though it’s sometimes important to help them recognize that goodness of fit is a weak test of quality.

It’s good to build the data infrastructure before you start modeling, because that way your drivers and quality control checks are in place as you build structure, so you avoid the pitfalls of an end-of-pipe inspection process. A frequent finding in our corporate work has been that cherished data is in fact rubbish, or means something quite different that what users have historically assumed. Ventana colleague Bill Arthur argues that modern IT practices are making the situation worse, not better, because firms aren’t retaining data as long (perhaps a misplaced side effect of a mania for freshness).

Continue reading “The Obscure Art of Datamodeling in Vensim”

Fizzle

Hackers have stolen zillions of emails from CRU. The climate skeptic world is in such a froth that the climateaudit servers have slowed to a crawl. Patrick Michaels has declared it a “mushroom cloud.”

I rather think that this will prove to be a dud. We’ll find out that a few scientists are human, and lots of things will be taken out of context. At the end of the day, climate science will still rest on diverse data from more than a single research center. We won’t suddenly discover that it’s all a hoax and climate sensitivity is Lindzen’s 0.5C, nor will we know any better whether it’s 1.5 or 6C.

We’ll still be searching for a strategy that works either way.

GAMS Rant

I’ve just been looking into replicating the DICE-2007 model in Vensim (as I’ve previously done with DICE and RICE). As usual, it’s in GAMS, which is very powerful for optimization and general equilibrium work. However, it has to be the most horrible language I’ve ever seen for specifying dynamic models – worse than Excel, BASIC, you name it. The only contender for the title of time series horror show I can think of is SQL. I was recently amused when a GAMS user in China, working with a complex, unfinished Vensim model, heavy on arrays and interface detail, 50x the size of DICE, exclaimed, “it’s so easy!” I’d rather go to the dentist than plow through yet another pile of GAMS code to figure out what gsig(T)=gsigma*EXP(-dsig*10*(ORD(T)-1)-dsig2*10*((ord(t)-1)**2));sigma(“1”)=sig0;LOOP(T,sigma(T+1)=(sigma(T)/((1-gsig(T+1))));); means. End rant.

National Geographic takes a bath

The 2009 World Energy Outlook

Following up on Carlos Ferreira’s comment, I looked up the new IEA WEO, unveiled today. A few excerpts from the executive summary:

- The financial crisis has cast a shadow over whether all the energy investment needed to meet growing energy needs can be mobilised.

- Continuing on today’s energy path, without any change in government policy, would mean rapidly increasing dependence on fossil fuels, with alarming consequences for climate change and energy security.

- Non-OECD countries account for all of the projected growth in energy-related CO2 emissions to 2030.

- The reductions in energy-related CO2 emissions required in the 450 Scenario (relative to the Reference Scenario) by 2020 — just a decade away — are formidable, but the financial crisis offers what may be a unique opportunity to take the necessary steps as the political mood shifts.

- With a new international climate policy agreement, a comprehensive and rapid transformation in the way we produce, transport and use energy — a veritable lowcarbon revolution — could put the world onto this 450-ppm trajectory.

- Energy efficiency offers the biggest scope for cutting emissions

- The 450 Scenario entails $10.5 trillion more investment in energy infrastructure and energy-related capital stock globally than in the Reference Scenario through to the end of the projection period.

- The cost of the additional investments needed to put the world onto a 450-ppm path is at least partly offset by economic, health and energy-security benefits.

- In the 450 Scenario, world primary gas demand grows by 17% between 2007 and 2030, but is 17% lower in 2030 compared with the Reference Scenario.

- The world’s remaining resources of natural gas are easily large enough to cover any conceivable rate of increase in demand through to 2030 and well beyond, though the cost of developing new resources is set to rise over the long term.

- A glut of gas is looming

This is pretty striking language, especially if you recall the much more business-as-usual tone of WEOs in the 90s.

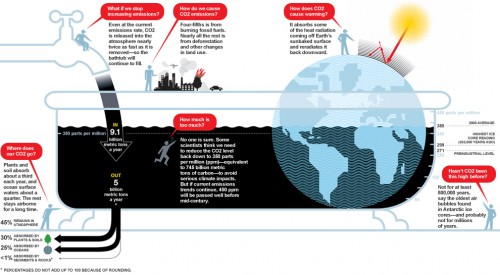

The other bathtubs – population

I’ve written quite a bit about bathtub dynamics here. I got the term from “Cloudy Skies” and other work by John Sterman and Linda Booth Sweeney.

We report experiments assessing people’s intuitive understanding of climate change. We presented highly educated graduate students with descriptions of greenhouse warming drawn from the IPCC’s nontechnical reports. Subjects were then asked to identify the likely response to various scenarios for CO2 emissions or concentrations. The tasks require no mathematics, only an understanding of stocks and flows and basic facts about climate change. Overall performance was poor. Subjects often select trajectories that violate conservation of matter. Many believe temperature responds immediately to changes in CO2 emissions or concentrations. Still more believe that stabilizing emissions near current rates would stabilize the climate, when in fact emissions would continue to exceed removal, increasing GHG concentrations and radiative forcing. Such beliefs support wait and see policies, but violate basic laws of physics.

The climate bathtubs are really a chain of stock processes: accumulation of CO2 in the atmosphere, accumulation of heat in the global system, and accumulation of meltwater in the oceans. How we respond to those, i.e. our emissions trajectory, is conditioned by some additional bathtubs: population, capital, and technology. This post is a quick look at the first.

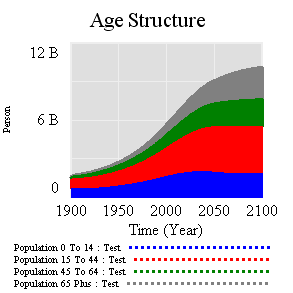

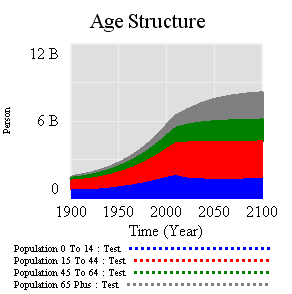

I’ve grabbed the population sector from the World3 model. Regardless of what you think of World3’s economics, there’s not much to complain about in the population sector. It looks like this:

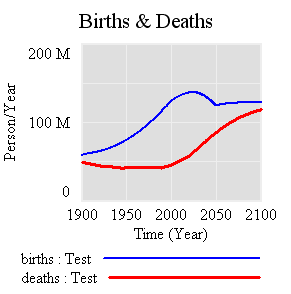

People are categorized into young, reproductive age, working age, and older groups. This 4th order structure doesn’t really capture the low dispersion of the true calendar aging process, but it’s more than enough for understanding the momentum of a population. If you think of the population in aggregate (the sum of the four boxes), it’s a bathtub that fills as long as births exceed deaths. Roughly tuned to history and projections, the bathtub fills until the end of the century, but at a diminishing rate as the gap between births and deaths closes:

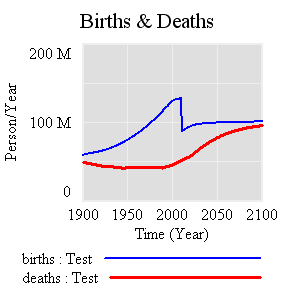

Notice that the young (blue) peak in 2030 or so, long before the older groups come into near-equilibrium. An aging chain like this has a lot of momentum. A simple experiment makes that momentum visible. Suppose that, as of 2010, fertility suddenly falls to slightly below replacement levels, about 2.1 children per couple. (This is implemented by changing the total fertility lookup). That requires a dramatic shift in birth rates:

However, that doesn’t translate to an immediate equilibrium in population. Instead,population still grows to the end of the century, but reaching a lower level. Growth continues because the aging chain is internally out of equilibrium (there’s also a small contribution from ongoing extension of life expectancy, but it’s not important here). Because growth has been ongoing, the demographic pyramid is skewed toward the young. So, while fertility is constant per person of child-bearing age, the population of prospective parents grows for a while as the young grow up, and thus births continue to increase. Also, at the time of the experiment, the elderly population has not reached equilibrium given rising life expectancy and growth down the chain.

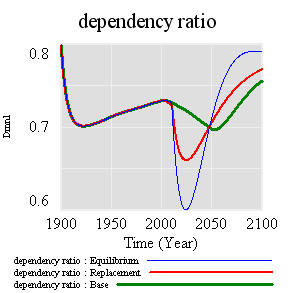

Achieving immediate equilibrium in population would require a much more radical fall in fertility, in order to bring births immediately in line with deaths. Implementing such a change would require shifting yet another bathtub – culture – in a way that seems unlikely to happen quickly. It would also have economic side effects. Often, you hear calls for more population growth, so that there will be more kids to pay social security and care for the elderly. However, that’s not the first effect of accelerated declines in fertility. If you look at the dependency ratio (the ratio of the very young and old to everyone else), the first effect of declining fertility is actually a net benefit (except to the extent that young children are intrinsically valued, or working in sweatshops making fake Gucci wallets):

The bottom line of all this is that, like other bathtubs, it’s hard to change population quickly, partly because of the physics of accumulation of people, and partly because it’s hard to even talk about the culture of fertility (and the economic factors that influence it). Population isn’t likely to contribute much to meeting 2020 emissions targets, but it’s part of the long game. If you want to win the long game, you have to anticipate long delays, which means getting started now.

The model (Vensim binary, text, and published formats): World3 Population.vmf World3-Population.mdl World3 Population.vpm

Hope is not a method

My dad pointed me to this interesting Paul Romer interview on BBC Global Business. The BBC describes Romer as an optimist in a dismal science. I think of Paul Romer as one of the economists who founded the endogenous growth movement, though he’s probably done lots of other interesting things. I personally find the models typically employed in the endogenous growth literature to be silly, because they retain assumptions like perfect foresight (we all know that hard optimal control math is the essence of a theory of behavior, right?). In spite of their faults, those models are a huge leap over earlier work (exogenous technology) and subsequent probing around the edges has sparked a very productive train of thought.

About 20 minutes in, Romer succumbs to the urge to bash the Club of Rome (economists lose their union card if they don’t do this once in a while). His reasoning is part spurious, and part interesting. The spurious part is is blanket condemnation, that simply everything about it was wrong. That’s hard to accept or refute, because it’s not clear if he means Limits to Growth, or the general tenor of the discussion at the time. If he means Limits, he’s making the usual straw man mistake. To be fair, the interviewer does prime him by (incorrectly) summarizing the Club of Rome argument as “running out of raw materials.” But Romer takes the bait, and adds, “… they were saying the problem is we wouldn’t have enough carbon resources, … the problem is we have way too much carbon resources and are going to burn too much of it and damage the environment….” If you read Limits, this was actually one of the central points – you may not know which limit is binding first, but if you dodge one limit, exponential growth will quickly carry you to the next.

Interestingly, Romer’s solution to sustainability challenges arises from a more micro, evolutionary perspective rather than the macro single-agent perspective in most of the growth literature. He argues against top-down control and for structures (like his charter cities) that promote greater diversity and experimentation, in order to facilitate the discovery of new ways of doing things. He also talks a lot about rules as a moderator for technology – for example, that it’s bad to invent a technology that permit greater harvest of a resource, unless you also invent rules that ensure the harvest remains sustainable. I think he and I and the authors of Limits would actually agree on many real-world policy prescriptions.

However, I think Romer’s bottom-up search for solutions to human problems through evolutionary innovation is important but will, in the end, fail in one important way. Evolutionary pressures within cities, countries, or firms will tend to solve short-term, local problems. However, it’s not clear how they’re going to solve problems larger in scale than those entities, or longer in tenure than the agents running them. Consider resource depletion: if you imagine for a moment that there is some optimal depletion path, a country can consume its resources faster or slower than that. Too fast, and they get-rich-quick but have a crisis later. Too slow, and they’re self-deprived now. But there are other options: a country can consume its resources quickly now, build weapons, and seize the resources of the slow countries. Also, rapid extraction in some regions drives down prices, creating an impression of abundance, and discouraging other regions from managing the resource more cautiously. The result may be a race for the bottom, rather than evolution of creative long term solutions. Consider also climate: even large emitters have little incentive to reduce, if only their own damages matter. To align self-interest with mitigation, there has to be some kind of external incentive, either imposed top-down or emerging from some mix of bottom-up cooperation and coercion.

If you propose to solve long term global problems only through diversity, evolution, and innovation, you are in effect hoping that those measures will unleash a torrent of innovation that will solve the big problems coincidentally, or that we’ll stay perpetually ahead in the race between growth and side-effects. That could happen, but as the Dartmouth clinic used to say about contraception, “hope is not a method.”