In a recent conversation about data requirements for future Vensim, a colleague observed that the availability of ready access to ‘big data’ in corporations has had curious side effects. One might have hoped for a flowering of model-driven conversations about the firm. Instead, ubiquitous access to data has led managers to spend less time contemplating what data might actually be important. Crucial data for model calibration are often harder to get than they were in the bad old days, because:

- The perceived time scale of relevance is shorter than ever; there are no enduring generic structures, only transient details, so old data gets tossed or ignored.

- Prevalent databases are still lousy at constructing aggregate time series.

- Zombie managerial instincts for hoarding data still walk the earth.

- Users are riveted by slick graphics which conceal quality issues in the underlying data.

Perhaps this is a consequence of the fact that data collection has become incredibly cheap. In the short run, business is about execution of essentially fixed strategies, and raw data is pretty darn useful for that. The problem is that the long run challenge of formulating strategies requires an investment of time to turn data into models (mental or formal), but modeling hasn’t experienced the same productivity revolution. This could leave companies more strategically blind than ever, and therefore accelerate the process of inadvertently walking off a cliff.

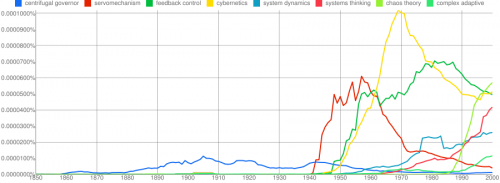

Around the same time, I ran into this Wired article about the power of feedback to change behavior. It details a variety of interesting innovations, from radar speed signs to brainwave headbands. I’ve experimented with similar stuff, like Daytum (found here, clever, but soon abandoned) and the Kill-a-watt (still used occasionally).

In the past two or three years, the plunging price of sensors has begun to foster a feedback-loop revolution. …

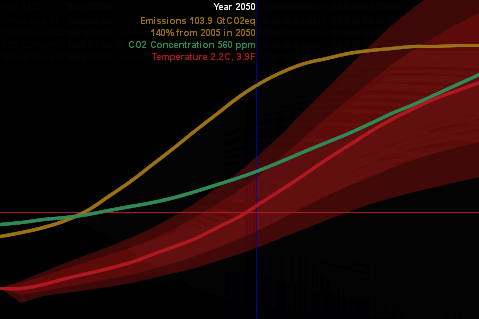

And today, their promise couldn’t be greater. The intransigence of human behavior has emerged as the root of most of the world’s biggest challenges. Witness the rise in obesity, the persistence of smoking, the soaring number of people who have one or more chronic diseases. Consider our problems with carbon emissions, where managing personal energy consumption could be the difference between a climate under control and one beyond help. And feedback loops aren’t just about solving problems. They could create opportunities. Feedback loops can improve how companies motivate and empower their employees, allowing workers to monitor their own productivity and set their own schedules. They could lead to lower consumption of precious resources and more productive use of what we do consume. They could allow people to set and achieve better-defined, more ambitious goals and curb destructive behaviors, replacing them with positive actions. Used in organizations or communities, they can help groups work together to take on more daunting challenges. In short, the feedback loop is an age-old strategy revitalized by state-of-the-art technology. As such, it is perhaps the most promising tool for behavioral change to have come along in decades.

But the applications don’t quite live up to these big ambitions:

… The GreenGoose concept starts with a sheet of stickers, each containing an accelerometer labeled with a cartoon icon of a familiar household object—a refrigerator handle, a water bottle, a toothbrush, a yard rake. But the secret to GreenGoose isn’t the accelerometer; that’s a less-than-a-dollar commodity. The key is the algorithm that Krejcarek’s team has coded into the chip next to the accelerometer that recognizes a particular pattern of movement. For a toothbrush, it’s a rapid back-and-forth that indicates somebody is brushing their teeth. … In essence, GreenGoose uses sensors to spray feedback loops like atomized perfume throughout our daily life—in our homes, our vehicles, our backyards. “Sensors are these little eyes and ears on whatever we do and how we do it,” Krejcarek says. “If a behavior has a pattern, if we can calculate a desired duration and intensity, we can create a system that rewards that behavior and encourages more of it.” Thus the first component of a feedback loop: data gathering.

Then comes the second step: relevance. GreenGoose converts the data into points, with a certain amount of action translating into a certain number of points, say 30 seconds of teeth brushing for two points. And here Krejcarek gets noticeably excited. “The points can be used in games on our website,” he says. “Think FarmVille but with live data.” Krejcarek plans to open the platform to game developers, who he hopes will create games that are simple, easy, and sticky. A few hours of raking leaves might build up points that can be used in a gardening game. And the games induce people to earn more points, which means repeating good behaviors. The idea, Krejcarek says, is to “create a bridge between the real world and the virtual world. This has all got to be fun.”

This strikes me as a rehash of the corporate experience: use cheap data to solve execution problems, but leave the big strategic questions unaddressed. The torrent of the measurable might even push the crucial intangibles – love, justice, happiness, wisdom – further toward the unmanaged margins of our existence.

My guess is that these technologies can help us solve our universal personal problems, particularly in areas like health and fitness where rewards are proximate in time and space. There might even be beneficial spillovers from healthier, happier personal lifestyles to reduced resource demand and

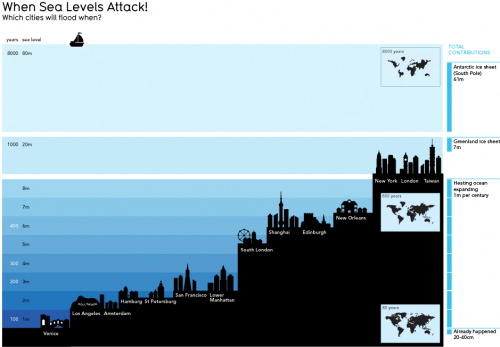

But I don’t see them doing much to solve global environmental problems, or even large-scale universal problems like urban decay and poverty. Those problems exist, not for lack of data, but for lack of feedback that is compelling to the same degree as the pressures of markets and other financial and social systems, which aren’t all about fun. In the US, we’re not even willing to entertain the idea of creating climate feedback loops. I suspect that the solutions to our biggest problems awaits some other technology that makes us much more productive at devising good strategies based on shared mental models.

Click to enlarge.

Click to enlarge.