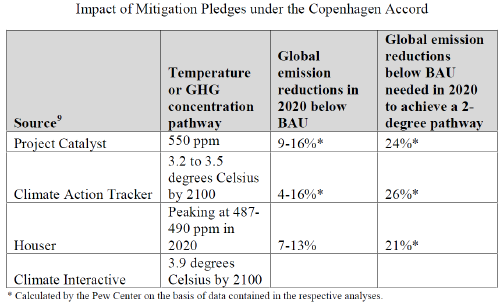

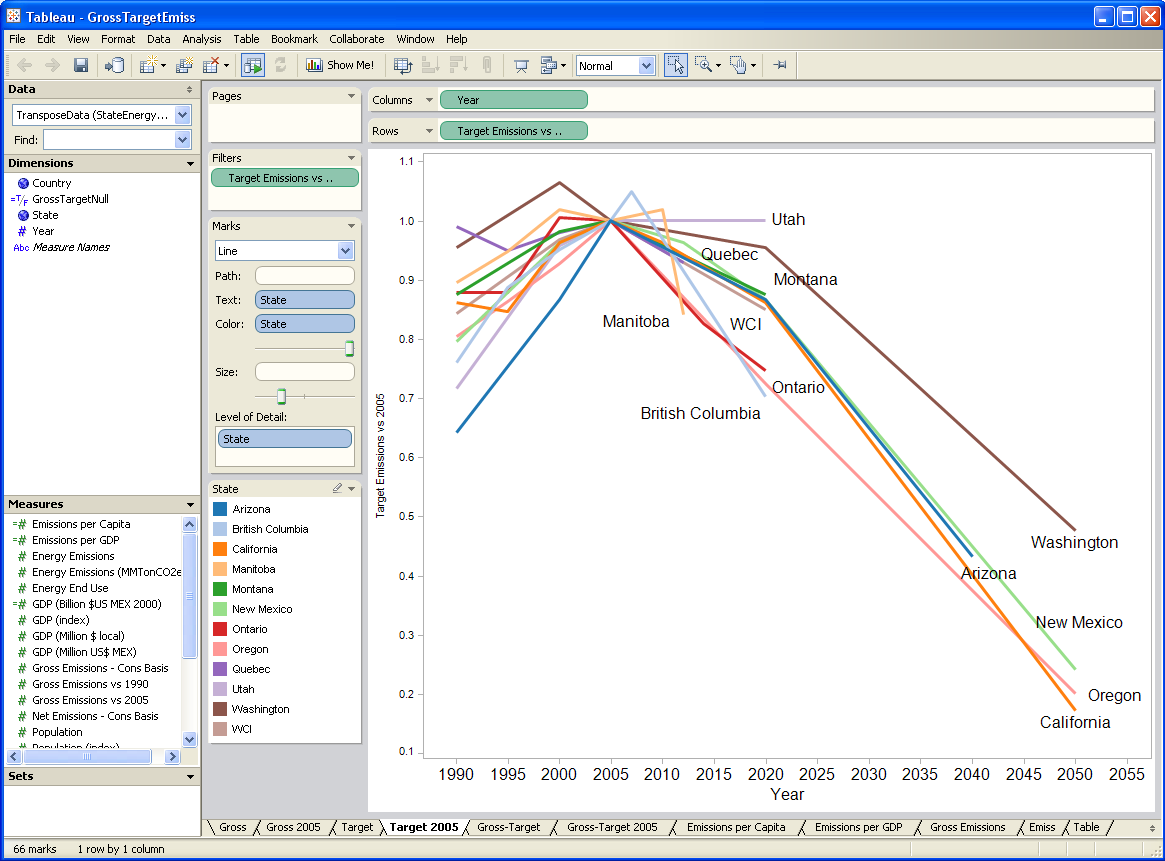

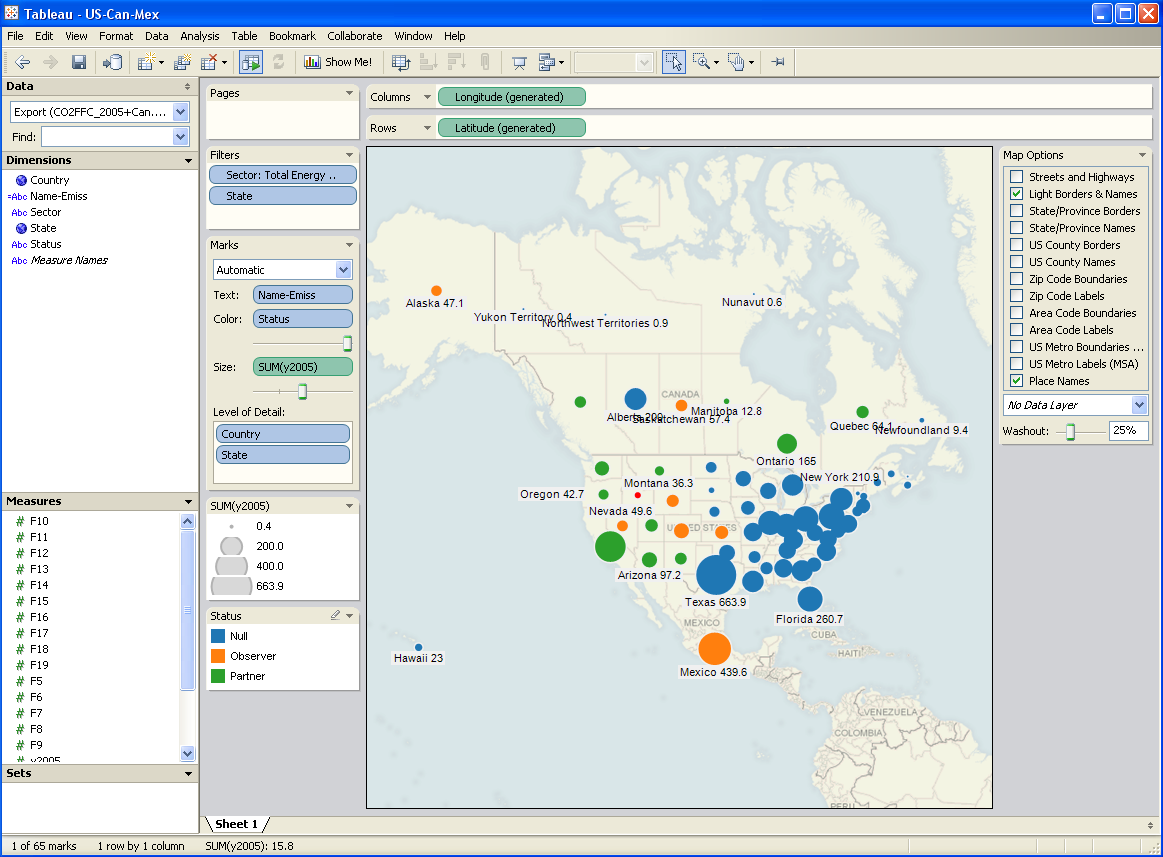

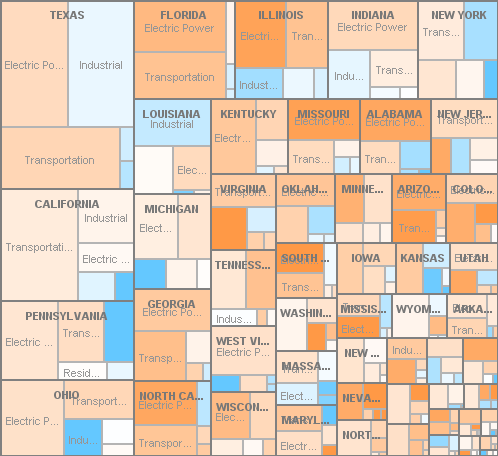

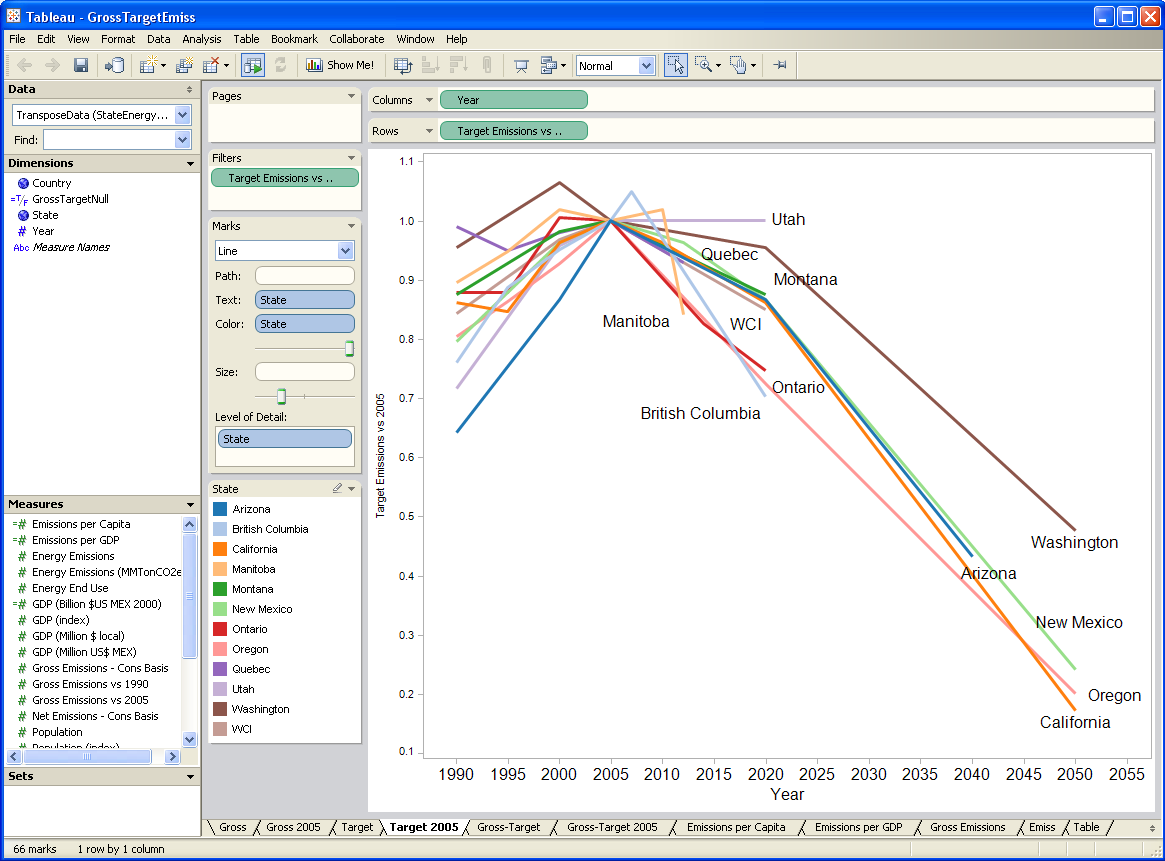

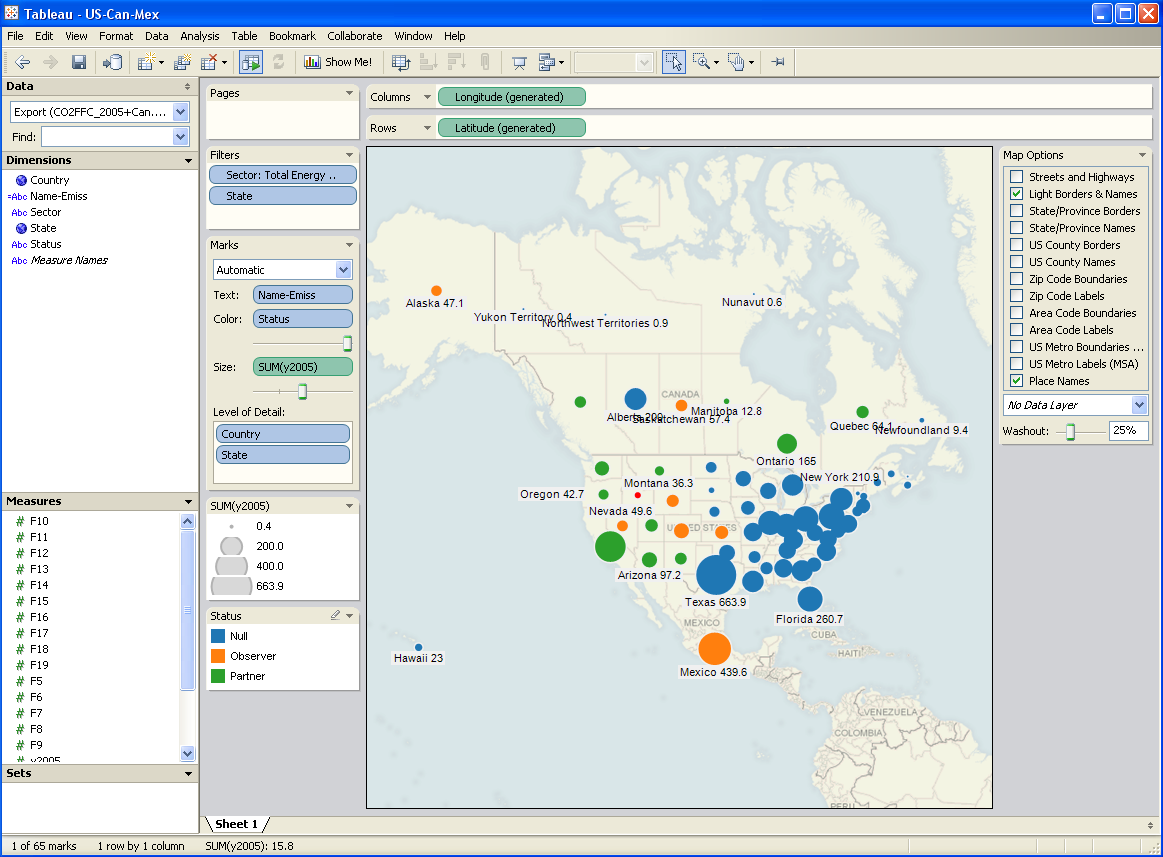

I’ve been testing a data mining and visualization tool called Tableau. It seems to be a hot topic in that world, and I can see why. It’s a very elegant way to access large database servers, slicing and dicing many different ways via a clean interface. It works equally well on small datasets in Excel. It’s very user-friendly, though it helps a lot to understand the relational or multidimensional data model you’re using. Plus it just looks good. I tried it out on some graphics I wanted to generate for a collaborative workshop on the Western Climate Initiative. Two examples:

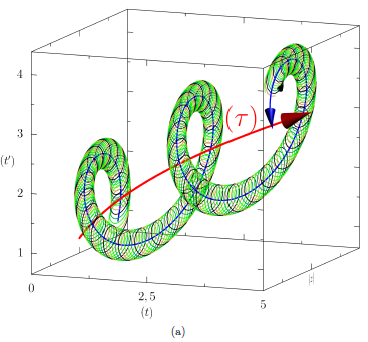

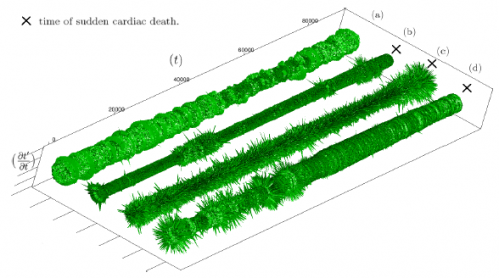

A year or two back, I created a tool, based on VisAD, that uses the Vensim .dll to do multidimensional visualization of model output. It’s much cruder, but cooler in one way: it does interactive 3D. Anyway, I hoped that Tableau, used with Vensim, would be a good replacement for my unfinished tool.

After some experimentation, I think there’s a lot of potential, but it’s not going to be the match made in heaven that I hoped for. Cycle time is one obstacle: data can be exported from Vensim in .tab, .xls, or a relational table format (known as “data list” in the export dialog). If you go the text route (.tab), you have to pass through Excel to convert it to .csv, which Tableau reads. If you go the .xls route, you don’t need to pass through Excel, but may need to close/open the Tableau workspace to avoid file lock collisions. The relational format works, but yields a fundamentally different description of the data, which may be harder to work with.

I think where the pairing might really shine is with model output exported to a database server via Vensim’s ODBC features. I’m lukewarm on doing that with relational databases, because they just don’t get time series. A multidimensional database would be much better, but unfortunately I don’t have time to try at the moment.

Whether it works with models or not, Tableau is a nice tool, and I’d recommend a test drive.

http://www.ssec.wisc.edu/~billh/visad.html