Hoisted from a comment by Luzi on Vi Hart on positive feedback driving polarization:

Can you find the point where the many positive feedback loops can be balanced or broken?

Don't just do something, stand there! Reflections on the counterintuitive behavior of complex systems, seen through the eyes of System Dynamics, Systems Thinking and simulation.

Hoisted from a comment by Luzi on Vi Hart on positive feedback driving polarization:

Can you find the point where the many positive feedback loops can be balanced or broken?

Every couple of years, an article comes out reviewing the performance of the World3 model against data, or constructing an alternative, extended model based on World3. Here’s the latest:

Abstract

This study investigates the notion of limits to socioeconomic growth with a specific focus on the role of climate change and the declining quality of fossil fuel reserves. A new system dynamics model has been created. The World Energy Model (WEM) is based on the World3 model (The Limits to Growth, Meadows et al., 2004) with climate change and energy production replacing generic pollution and resources factors. WEM also tracks global population, food production and industrial output out to the year 2100. This paper presents a series of WEM’s projections; each of which represent broad sweeps of what the future may bring. All scenarios project that global industrial output will continue growing until 2100. Scenarios based on current energy trends lead to a 50% increase in the average cost of energy production and 2.4–2.7 °C of global warming by 2100. WEM projects that limiting global warming to 2 °C will reduce the industrial output growth rate by 0.1–0.2%. However, WEM also plots industrial decline by 2150 for cases of uncontrolled climate change or increased population growth. The general behaviour of WEM is far more stable than World3 but its results still support the call for a managed decline in society’s ecological footprint.

The new paper puts economic collapse about a century later than it occurred in Limits. But that presumes that the phrase highlighted above is a legitimate simplification: GHGs are the only pollutant, and energy the only resource, that matters. Are we really past the point of concern over PCBs, heavy metals, etc., with all future chemical and genetic technologies free of risk? Well, maybe … (Note that climate integrated assessment models generally indulge in the same assumption.)

But quibbling over dates is to miss a key point of Limits to Growth: the model, and the book, are not about point prediction of collapse in year 20xx. The central message is about a persistent overshoot behavior mode in a system with long delays and finite boundaries, when driven by exponential growth.

We have deliberately omitted the vertical scales and we have made the horizontal time scale somewhat vague because we want to emphasize the general behavior modes of these computer outputs, not the numerical values, which are only approximately known.

There are two ways to go about building a model.

Ideally, both approaches converge to the same point.

Plan B is attractive, for several reasons. It helps you to explore a wide range of ideas. It gives a satisfying illusion of rapid progress. And, most importantly, it’s satisfying for stakeholders, who typically have a voracious appetite for detail and a limited appreciation of dynamics.

The trouble is, Plan B does not really exist. When you build a lot of structure quickly, the sacrifice you have to make is ignoring lots of potential interactions, consistency checks, and other relationships between components. You’re creating a large backlog of undiscovered rework, which the extensive SD literature on projects tells us is fatal. So, you’re really on Path C, which leads to disaster: a large, incomprehensible, low-quality model.

In addition, you rarely have as much time as you think you do. When your work gets cut short, only Path A gives you an end product that you can be proud of.

So, resist pressures to include every detail. Embrace elegant simplicity and rich feedback. Check your units regularly, test often, and “always be done” (as Jim Hines puts it). Your life will be easier, and you’ll solve more problems in the long run.

Related: How to critique a model (and build a model that withstands critique)

My award for dumbest headline of the week goes to The Atlantic:

Climate Change Can Be Stopped by Turning Air Into Gasoline

A team of scientists from Harvard University and the company Carbon Engineering announced on Thursday that they have found a method to cheaply and directly pull carbon-dioxide pollution out of the atmosphere.

If their technique is successfully implemented at scale, it could transform how humanity thinks about the problem of climate change. It could give people a decisive new tool in the race against a warming planet, but could also unsettle the issue’s delicate politics, making it all the harder for society to adapt.

Their research seems almost to smuggle technologies out of the realm of science fiction and into the real. It suggests that people will soon be able to produce gasoline and jet fuel from little more than limestone, hydrogen, and air. It hints at the eventual construction of a vast, industrial-scale network of carbon scrubbers, capable of removing greenhouse gases directly from the atmosphere.

The underlying article that triggered the story has nothing to do with turning CO2 into gasoline. It’s purely about lower-cost direct capture of CO2 from the air (DAC). Even if we assume that the article’s right, and DAC is now cheaper, that in no way means “climate change can be stopped.” There are several huge problems with that notion:

First, if you capture CO2 from the air, make a liquid fuel out of it, and burn that in vehicles, you’re putting the CO2 back in the air. This doesn’t reduce CO2 in the atmosphere; it just reduces the growth rate of CO2 in the atmosphere by displacing the fossil carbon that would otherwise be used. With constant radiative forcing from elevated CO2, temperature will continue to rise for a long time. You might get around this by burning the fuel in stationary plants and sequestering the CO2, but there are huge problems with that as well. There are serious sink constraint problems, and lots of additional costs.

Second, just how do you turn all that CO2 into fuel? The additional step is not free, nor is it conventional Fischer-Tropsch technology, which starts with syngas from coal or gas. You need relatively vast amounts of energy and hydrogen to do it on the necessary gigatons/year scale. One estimate puts the cost of such fuels at $3.80-9.20 a gallon (some of the costs overlap, but it’ll be more at the pump, after refining and marketing).

Third, who the heck is going to pay for all of this? If you want to just offset global emissions of ~40 gigatons CO2/year at the most optimistic cost of $100/ton, with free fuel conversion, that’s $4 trillion a year. If you’re going to cough up that kind of money, there are a lot of other things you could do first, but no one has an incentive to do it when the price of emissions is approximately zero.

Ironically, the Carbon Engineering team seems to be aware of these problems:

Keith said it was important to still stop emitting carbon-dioxide pollution where feasible. “My view is we should stick to trying to cut emissions first. As a voter, my view is it’s cheaper not to emit a ton of [carbon dioxide] than it is to emit it and recapture it.”

I think there are two bottom lines here:

Climate skeptics seem to have a thing for contests and bets. For example, there’s Armstrong’s proposed bet, baiting Al Gore. Amusingly (for data nerds anyway), the bet, which pitted a null forecast against the taker’s chosen climate model, could have been beaten easily by either a low-order climate model or a less-naive null forecast. And, of course, it completely fails to understand that climate science is not about fitting a curve to the global temperature record.

Another instance of such foolishness recently came to my attention. It doesn’t have a name that I know of, but here’s the basic idea:

Each series has length 135: the same length as that of the most commonly studied series of global temperatures (which span 1880–2014). The 1000 series were generated as follows. First, 1000 random series were obtained (for more details, see below). Then, some of those series were randomly selected and had a trend added to them. Each added trend was either 1°C/century or −1°C/century. For comparison, a trend of 1°C/century is greater than the trend that is claimed for global temperatures.

The best challenger managed to identify 860 series, so the prize went unclaimed. But only two challenges are described, so I have to wonder how many serious attempts were made. Had I known about the contest in advance, I would not have tried it. I know plenty about fitting dynamic models to data, though abstract statistical methods aren’t really my thing. But I still have to ask myself some questions:

For me, the statistical properties of the contest make it an obvious non-starter. But does it have any redeeming social value? For example, is it an interesting puzzle that has something to do with actual science? Sadly, no.

The hidden assumption of the contest is that climate science is about estimating the trend of the global temperature time series. Yes, people do that. But it’s a tiny fraction of climate science, and it’s a diagnostic of models and data, not a real model in itself. Science in general is not about such things. It’s about getting a good model, not a good fit. In some places the author talks about real physics, but ultimately seems clueless about this – he’s content with unphysical models:

Moreover, the Contest model was never asserted to be realistic.

…

Are ARIMA models truly appropriate for climatic time series? I do not have an opinion. There seem to be no persuasive arguments for or against using ARIMA models. Rather, studying such models for climatic series seems to be a worthy area of research.

…

Liljegren’s argument against ARIMA is that ARIMA models have a certain property that the climate system does not have. Specifically, for ARIMA time series, the variance becomes arbitrarily large, over long enough time, whereas for the climate system, the variance does not become arbitrarily large. It is easy to understand why Liljegren’s argument fails.

It is a common aphorism in statistics that “all models are wrong”. In other words, when we consider any statistical model, we will find something wrong with the model. Thus, when considering a model, the question is not whether the model is wrong—because the model is certain to be wrong. Rather, the question is whether the model is useful, for a particular application. This is a fundamental issue that is commonly taught to undergraduates in statistics. Yet Liljegren ignores it.

As an illustration, consider a straight line (with noise) as a model of global temperatures. Such a line will become arbitrarily high, over long enough time: e.g. higher than the temperature at the center of the sun. Global temperatures, however, will not become arbitrarily high. Hence, the model is wrong. And so—by an argument essentially the same as Liljegren’s—we should not use a straight line as a model of temperatures.

In fact, a straight line is commonly used for temperatures, because everyone understands that it is to be used only over a finite time (e.g. a few centuries). Over a finite time, the line cannot become arbitrarily high; so, the argument against using a straight line fails. Similarly, over a finite time, the variance of an ARIMA time series cannot become arbitrarily large; so, Liljegren’s argument fails.

Actually, no one in climate science uses straight lines to predict future temperatures, because forcing is rising, and therefore warming will accelerate. But that’s a minor quibble, compared to the real problem here. If your model is:

global temperature = f( time )

you’ve just thrown away 99.999% of the information available for studying the climate. (Ironically, the author’s entire point is that annual global temperatures don’t contain a lot of information.)

No matter how fancy your ARIMA model is, it knows nothing about conservation laws, robustness in extreme conditions, dimensional consistency, or real physical processes like heat transfer. In other words, it fails every reality check a dynamic modeler would normally apply, except the weakest – fit to data. Even its fit to data is near-meaningless, because it ignores all other series (forcings, ocean heat, precipitation, etc.) and has nothing to say about replication of spatial and seasonal patterns. That’s why this contest has almost nothing to do with actual climate science.

This is also why data-driven machine learning approaches have a long way to go before they can handle general problems. It’s comparatively easy to learn to recognize the cats in a database of photos, because the data spans everything there is to know about the problem. That’s not true for systemic problems, where you need a web of data and structural information at multiple scales in order to understand the situation.

Today I was looking for DYNAMO documentation of the TRND macro. Lo and behold, archive.org has the second edition of the DYNAMO User Guide online. It reminds me that I was lucky to have missed the punch card era:

… but not quite lucky enough to miss timesharing and the teletype:

The computer under my desk today would have been the fastest in the world the year I finished my dissertation. We’ve come a long way.

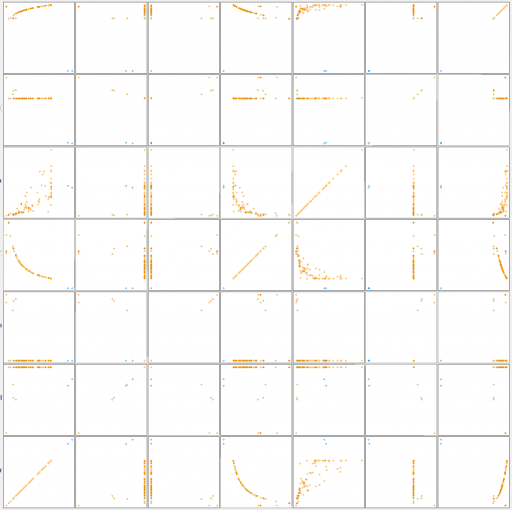

A colleague sent me a model that was yielding puzzling results in policy optimization. The model has multiple optima (not uncommon), so one question of interest is, how many peaks are there, and where? The parameter space is six-dimensional, so this is not practical to work out by intuition (especially for me, with no familiarity with the model).

One way to count the peaks is to use hill climbing optimization from multiple random starting points (Vensim’s Powell method, with multiple start). Then you look for clusters of endpoints that are presumably within a small tolerance of the maximum.

Interestingly, that doesn’t work out very well. After a lot of simulation, it appears that the model has two local optima. But in a large ensemble of simulations, about a third of the results make it to each of the peaks. The remaining third wind up strung out over the parameter space, seemingly at random.

Simple clustering algorithms like kmeans fail to discover the regularity of the results, so they indicate that there are more like half a dozen optima. But if you look at a scatter plot matrix of the solutions across the six dimensions, you quickly see it:

Scatter plat for results along the 6 parameter dimensions, plus the payoff.

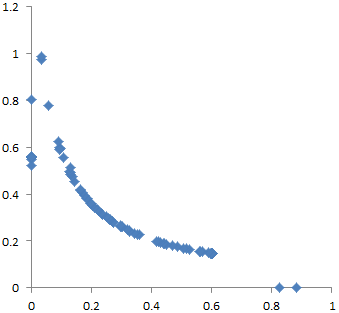

Endpoints for 2 of the 6 parameters

Why does this happen? I think there are two reasons. First, the model is somewhat sloppy – two of the dimensions dominate the payoff, and the rest have small effects, and therefore are more difficult to traverse numerically. Second, along the minor dimensions, tradeoffs create a curving valley. Imagine a parabola in the z dimension, extruded along the hyperbolic x-y curve above. The upper surface of a banana is a pretty good model for this in 3D.

The basic idea of direction set optimization methods is to build up a set of “good” search directions, based on earlier searches along the principal axes. This works well if the surface is basically elliptical, like the Easter egg. But it doesn’t work on the banana, because there is no consistent good direction.

Suppose you arrive at a point on the ridge via a series of searches of the axes, with net direction given by the green arrow above. The projection of that net direction (orange) is not a good search direction, because it immediately begins to fall off the side of the ridge. Returning to the original principal axes yields the same problem. In fact, almost any set of directions, other than the lucky one that proceeds along the ridge, is likely to get stuck at an apparent local optimum. I’m pretty sure a gradient method will have the same problem (plus other numerical problems in more general cases).

What to do about this? I think there are several options.

If you know the hyperbolic valley is there, you can transform the dimensions to get rid of it. For example, if you take the logs of the axes, an hyperbola becomes a line. That makes the valley tractable for a direction set search. But it’s unlikely that you know about the curving valley a priori. Resource allocation tradeoffs are likely to create such features, but it’s tough to know when and where. So this is not a very general, or convenient, solution.

Another option is to forget about direction sets and go to a stochastic method. The differential evolution MCMC in Vensim also works as a simulated annealing optimizer. Essentially, you’re taking a motivated random walk on the payoff surface. There’s some willingness to take uphill steps, which prevents you from getting stuck in the curving valley. This approach works pretty well in this case. However, when hill-climbing works, it’s a lot more efficient than evolutionary methods.

I think there’s a third option, which you might call a 2nd order direction set. The basic idea is to estimate not just the progress, but the curvature of progress, over multiple iterations through a set of directions. This makes it possible to guess where the curvy ridge is heading. In general, as soon as you look for higher-order approximations of things, you become more susceptible to noise and numerical pathologies. That might make this a waste of time in some cases.

However, I’m experimenting with this in the context of a new parallel optimization code in Vensim, and it turns out to be computationally cheap to explore a few extra directions on the side, in the hope of getting lucky. So far, results are encouraging. The improvement from making iterative algorithms parallel in Vensim is already massive, and the 2nd-order “banana-killer” seems to add a further 50% improvement in progress along curvy ridges.

All this talk of fruit is making me hungry, so that’s it for now, but there’s much more to come on this frontier.

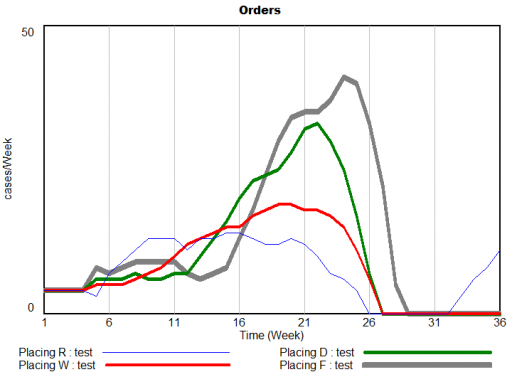

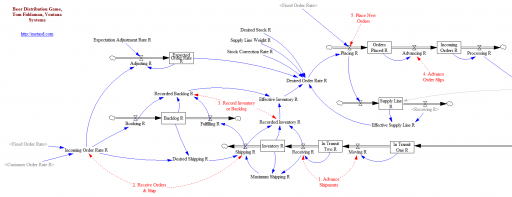

The Beer Game is the classic business game in system dynamics, demonstrating just how tricky it can be to manage a seemingly-simple system with delays and feedback. It’s a great icebreaker for teams, because it makes it immediately clear that catastrophes happen endogenously and fingerpointing is useless.

The system demonstrates amplification, aka the bullwhip effect, in supply chains. John Sterman analyzes the physical and behavioral origins of underperformance in the game in this Management Science paper. Steve Graves has some nice technical observations about similar systems in this MSOM paper.

Here are two versions that are close to the actual board game and the Sterman article:

Beer Game Fiddaman NoSubscripts.zip

This version doesn’t use arrays, and therefore should be usable in Vensim PLE. It includes a bunch of .cin files that implement the (calibrated) decision heuristics of real teams of the past, as well as some sensitivity and optimization control files.

This version does use arrays to represent the levels of the supply chain. That makes it a little harder to grasp, but much easier to modify if you want to add or remove levels from the system or conduct optimization experiments. It requires Vensim Pro or DSS, or the Model Reader.

An integrated market model is a hungry beast. It wants data from a variety of areas of a firm’s business, often from a variety of sources. As I said in my previous post, typically these data streams have never been considered together before, and therefore they’re full of contradictions and quality issues. Here’s a real world example, from the pharma business. The details are proprietary, and I’ve stylized the data, but the story is pretty simple.

Suppose you have a product with two different indications. One is short term (for injuries, a 4 month treatment), and one is long term (for a chronic condition, over 24 months). It’s of obvious interest to understand the two markets individually, to enable allocation of resources to distinct marketing efforts for each set of doctors and patients.

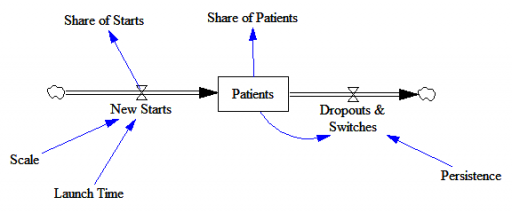

Here’s the structure of the market:

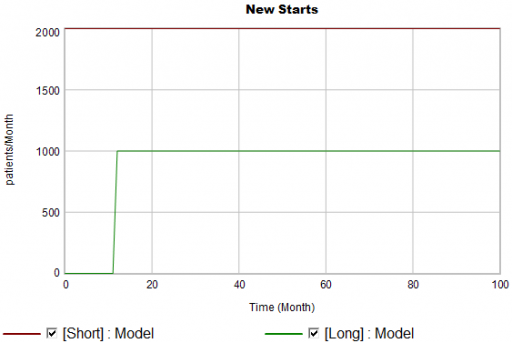

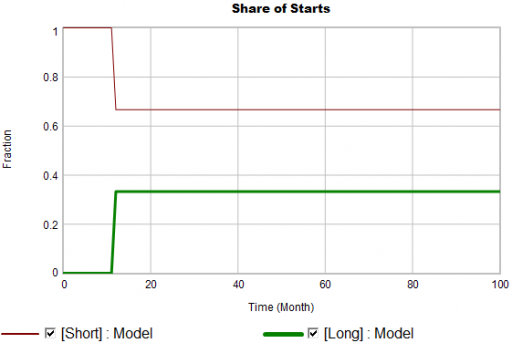

New patients are started on therapy. They remain in the stock of Patients for some time, before they drop out of therapy or switch to another drug. Initially, just the short term indication is approved; the long term indication gets approved a year into the simulation:

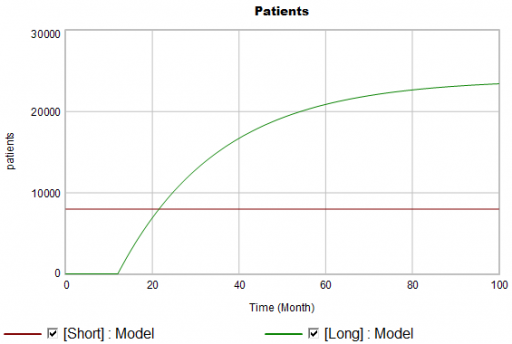

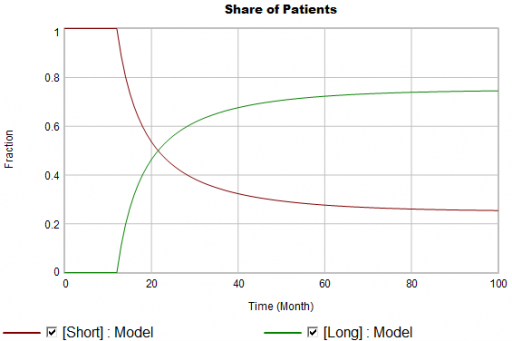

There are twice as many short term starts, but the long term patients stick around 6 times as long, so ultimately there are a lot more of them:

Notice that this is simple first-order goal seeking behavior. The long term patient population is rising toward an equilibrium of (1000 patients/month)x(24 months persistence)=24,000 patients, over a time scale of 24 months.

Puzzle #1

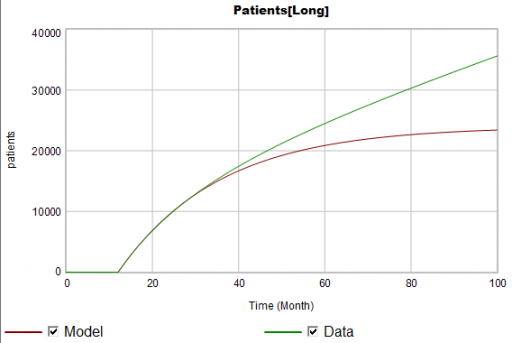

Suppose the data for the long term patients is doing something different (note that the colors now refer to model and data):

The model is goal-seeking, but the patient population data keeps rising. Bathtub dynamics says that it’s impossible for the step in the inflow of starts to integrate to this pattern when the outflow of dropouts is first order. You’d have to conclude that the model can’t fit the data, without invoking some additional assumptions. For example, the persistence of the long term patients might be increasing as doctors gain experience or the composition of the patient population changes.

But what if I told you that the driving data, new starts, isn’t a “real” measurement? First, new prescriptions aren’t easy to distinguish from refills, and there’s a certain amount of overcounting when patients switch pharmacies or otherwise drop out of the data, then reappear. Second, the short term and long term patients take the same drug, and prescription records don’t say why. So, the data vendor infers the split from dosages, prescriber specialties, and the phase of the moon. The inference happens in an undocumented black box algorithm and there’s no way to establish the ground truth of its performance.

Now, do you trust the algorithm, or doctors who say they know the duration of treatment – but might be missing something too?

Puzzle #2

Even in the presence of algorithmic uncertainty, you’d expect certain dynamic reality checks to pass. Consider the share of long term patients in the market. For new starts, it’s a step function, rising from 0 to 1/3 at launch in month 12:

Again, from the bathtub, we know that the patient population can’t instantly mimic the step in starts. If the system is first order with constant persistence, the long term share of patients should rise gradually to 3/4 (1000*24/(1000*24+2000*4)). If persistence is increasing, per puzzle #1, it might go higher on a longer time scale, but it can’t go faster.

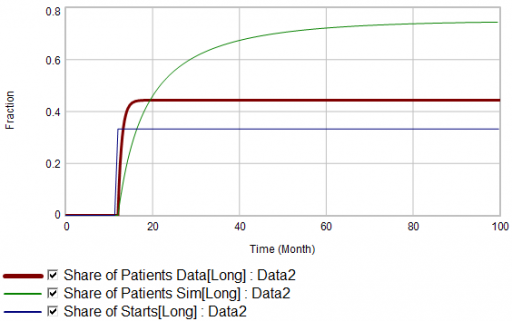

Now, suppose the data does something unexpected:

Here, the patient population share data mimics the share of new starts with a time constant that’s very short compared to the persistence of therapy. This should be dynamically impossible in a simple system. But, as always, you could start invoking time varying inputs or parameters to explain what the data shows. (And remember that the real data is noisy, making it harder to be sure about anything.)

But I think there’s another, simpler explanation. The data vendor could be using the same or similar algorithms to classify new starts and existing patients. It could be wrong about the inflow split, or wrong about the stocks, or both. And, it could be reclassifying existing patients from short to long and back with a time constant much faster than the persistence of therapy permits.

Conclusion

It turns out that, in spite of having lots of data about this system, we don’t actually know much. This is a problem for model calibration, because we don’t know which source to trust. Uncertainty in the calibration propagates into decision making. It’s awkward for people in the firm to revise the stories they’ve used to justify past actions. It ought to be awkward for the data vendor to provide flaky information, but luckily they have a near-monopoly.

But we still have options:

A real challenge for modelers is that model consumers typically have science tastes on a propaganda budget. People are used to seeing data that looks precise, full of enticing detail, with conclusions that sound plausible, but are little more than superstition. It’s cheap to make nice graphics and long figure-rich Powerpoint decks.

Really sorting out what’s going on in situations like this is hard, but it can have great strategic value. For example, in this case, if persistence is increasing, it’s more critical than ever to win the long term patients. If market shares could differ dramatically from what measurements report, competitive threats and opportunities could go unnoticed. Anyone who can use models to discover the fog of data and see through it will have a real competitive edge.